NLP Papers

Looking for a specific paper or subject?

Titans by Google: The Era of AI After Transformers?

Dive into Titans, a new AI architecture by Google, showing promising results comparing to Transformers! Paving the way for a new era in AI?…

rStar-Math by Microsoft: Can SLMs Beat OpenAI o1 in Math?

Discover how System 2 thinking through Monte Carlo Tree Search enables rStar-Math to rival OpenAI’s o1 in math, using Small Language Models!…

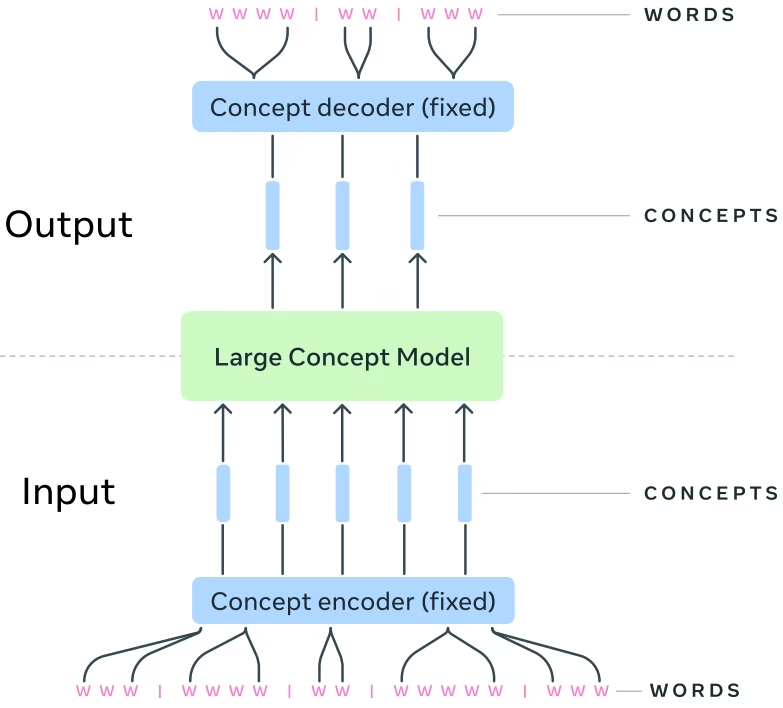

Large Concept Models (LCMs) by Meta: The Era of AI After LLMs?

Explore Meta’s Large Concept Models (LCMs) - an AI model that processes concepts instead of tokens. Can it become the next LLM architecture?…

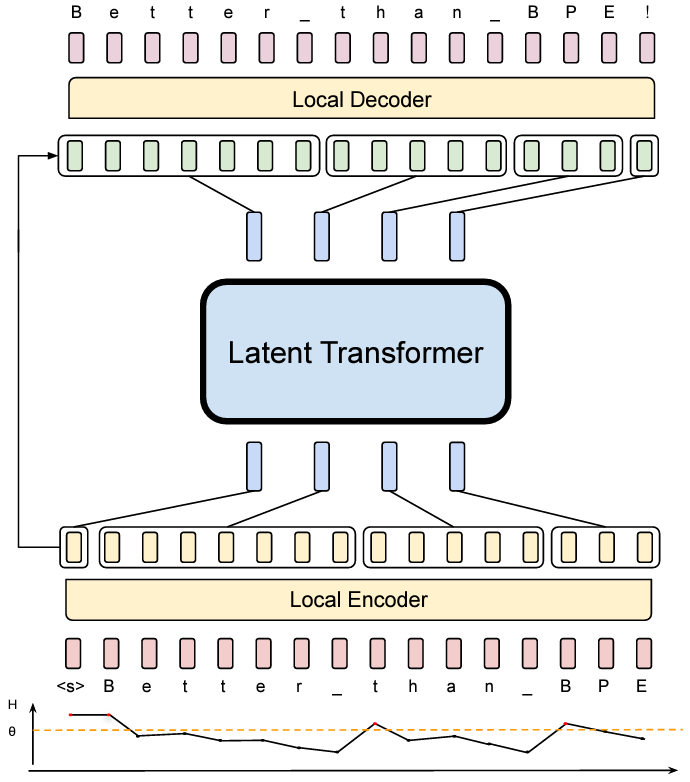

Byte Latent Transformer (BLT) by Meta AI: A Tokenizer-free LLM Revolution

Explore Byte Latent Transformer (BLT) by Meta AI: A tokenizer-free LLM that scales better than tokenization-based LLMs…

Coconut by Meta AI – Better LLM Reasoning With Chain of CONTINUOUS Thought?

Discover how Meta AI’s Chain of Continuous Thought (Coconut) empowers large language models (LLMs) to reason in their own language…

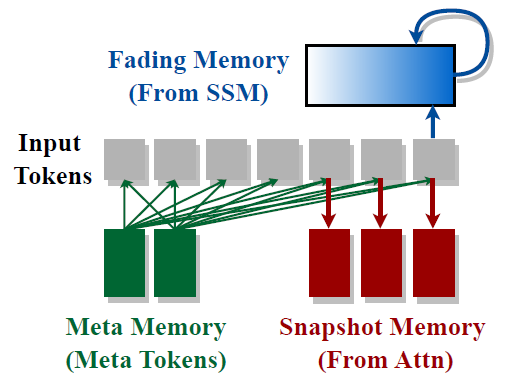

Hymba by NVIDIA: A Hybrid Mamba-Transformer Language Model

Discover NVIDIA’s Hymba model that combines Transformers and State Space Models for state-of-the-art performance in small language models…

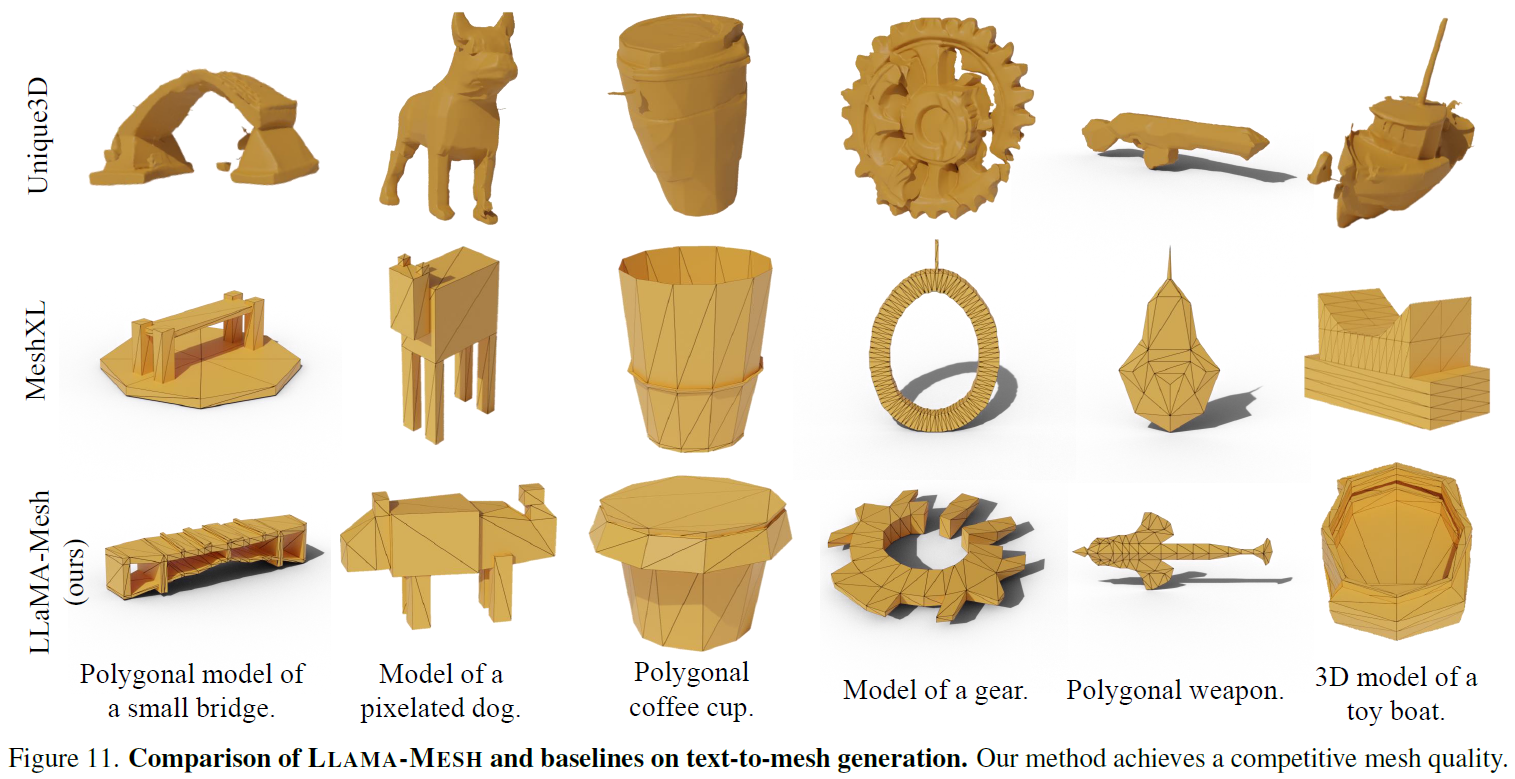

LLaMA-Mesh by Nvidia: LLM for 3D Mesh Generation

Dive into Nvidia’s LLaMA-Mesh: Unifying 3D Mesh Generation with Language Models, a LLM which was adapted to understand 3D objects…

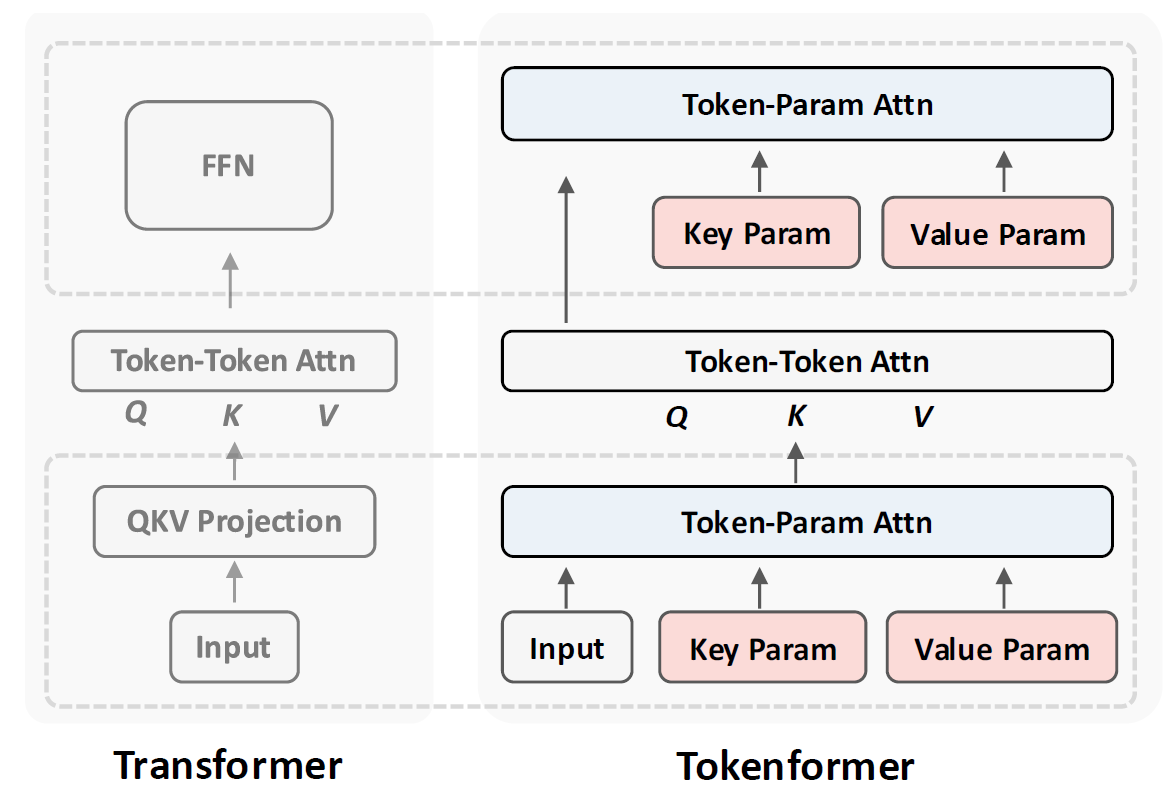

Tokenformer: Rethinking Transformer Scaling with Tokenized Model Parameters

Dive into Tokenformer, a novel architecture that improves Transformers to support incremental model growth without training from scratch…

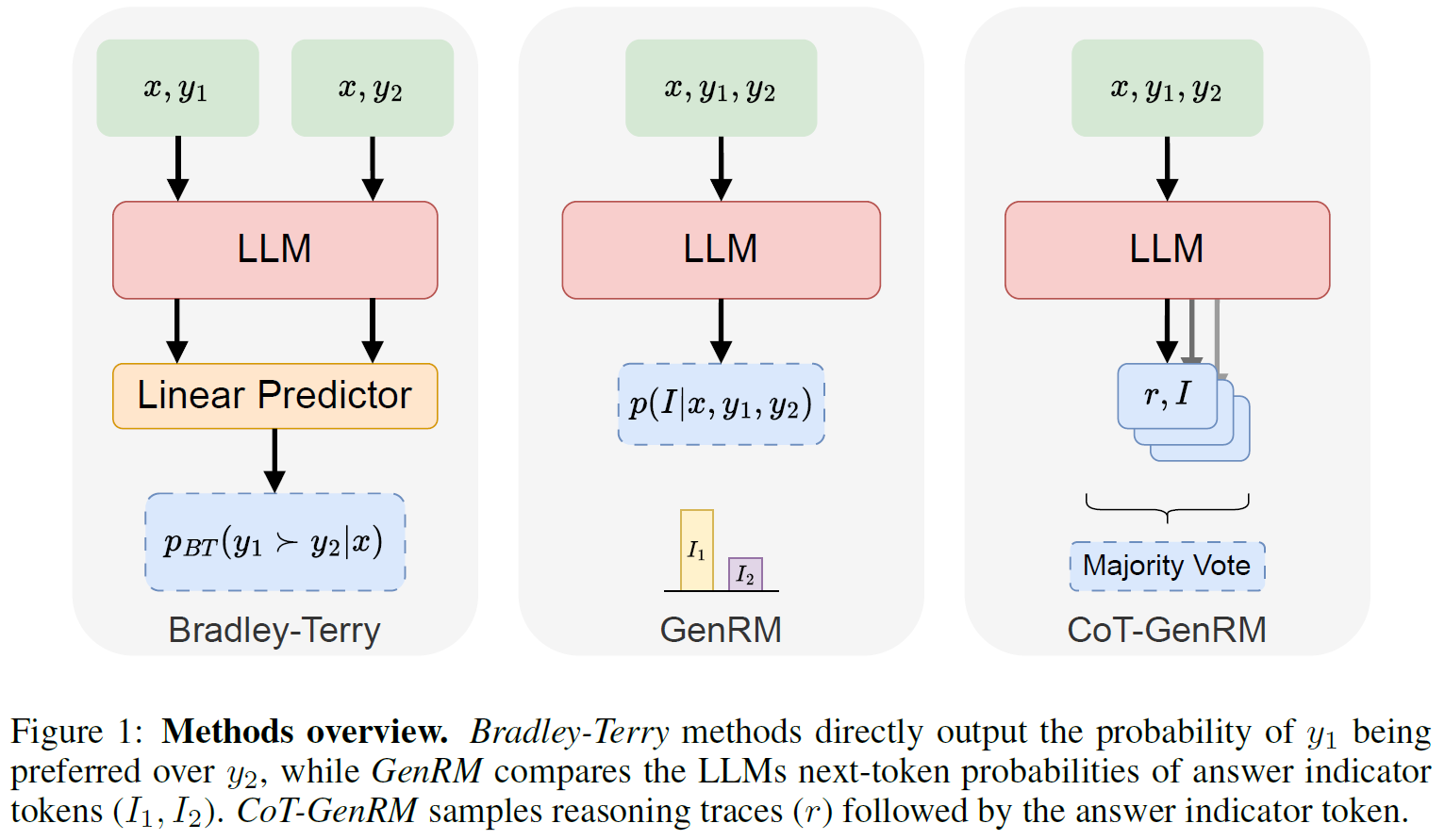

Generative Reward Models: Merging the Power of RLHF and RLAIF for Smarter AI

In this post we dive into a Stanford research presenting Generative Reward Models, a hybrid Human and AI RL to improve LLMs…

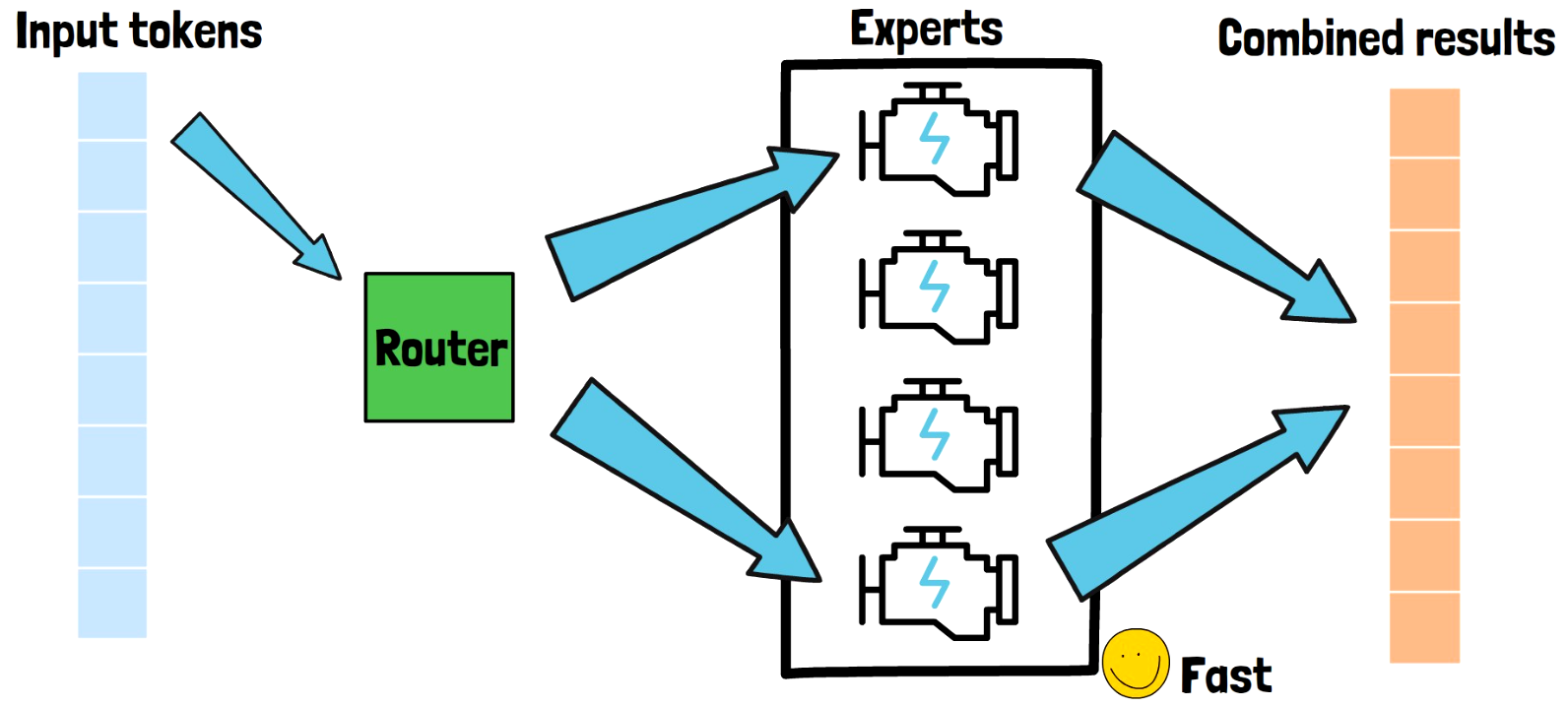

Introduction to Mixture-of-Experts | Original MoE Paper Explained

Diving into the original Google paper which introduced the Mixture-of-Experts (MoE) method, which was critical to AI progress…

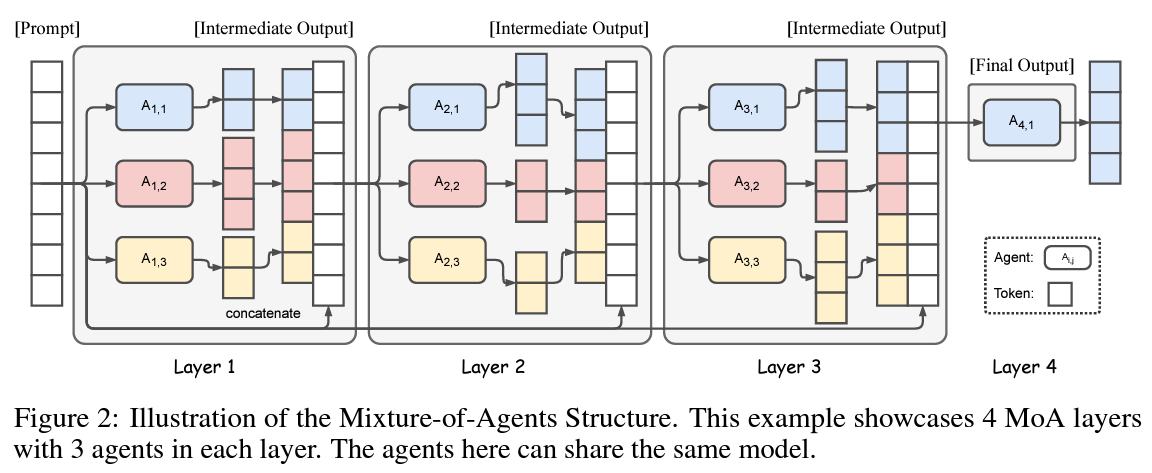

Mixture-of-Agents Enhances Large Language Model Capabilities

In this post we explain the Mixture-of-Agents method, which shows a way to unite open-source LLMs to win GPT-4o on AlpacaEval 2.0…

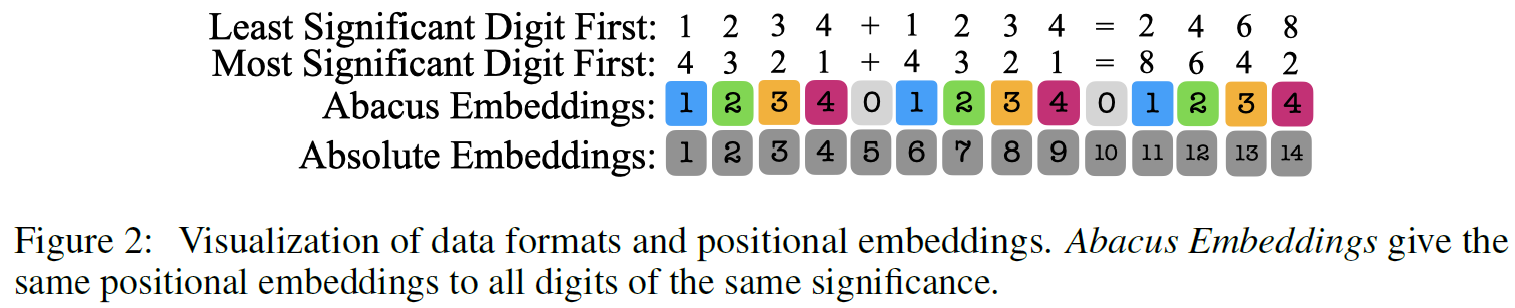

Arithmetic Transformers with Abacus Positional Embeddings

In this post we dive into Abacus Embeddings, which dramatically enhance Transformers arithmetic capabilities with strong logical extrapolation…

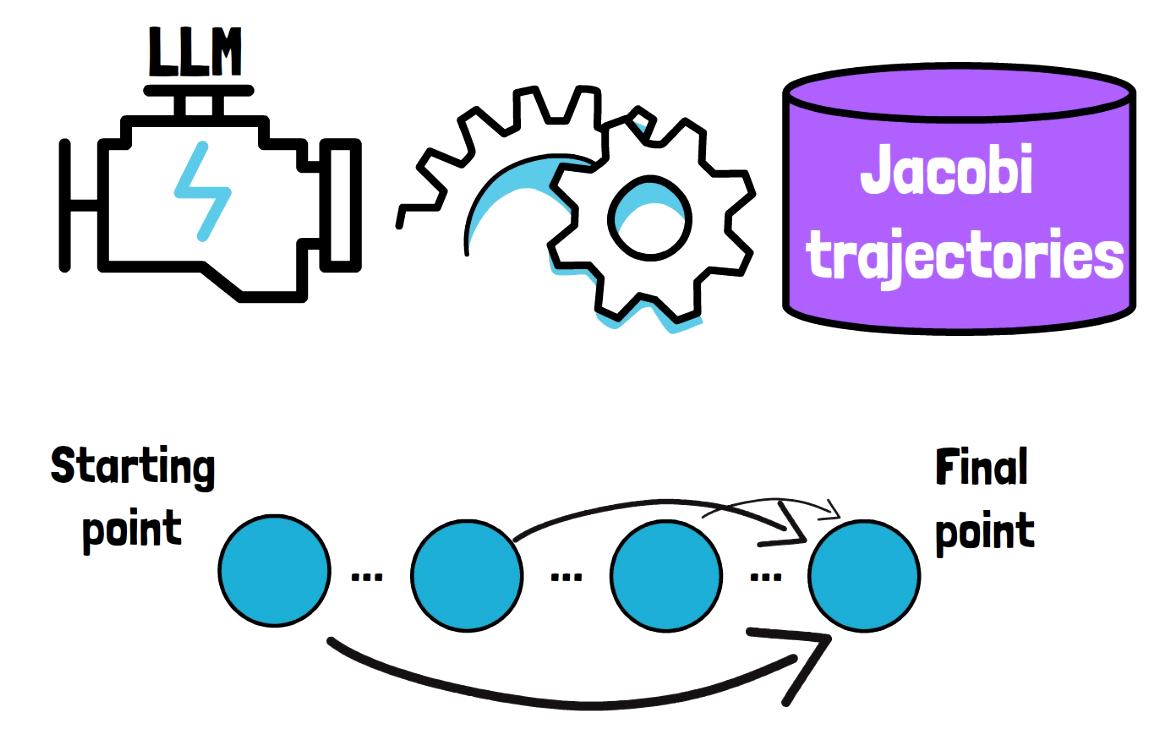

CLLMs: Consistency Large Language Models

In this post we dive into Consistency Large Language Models (CLLMs), a new family of models which can dramatically speedup LLMs inference!…

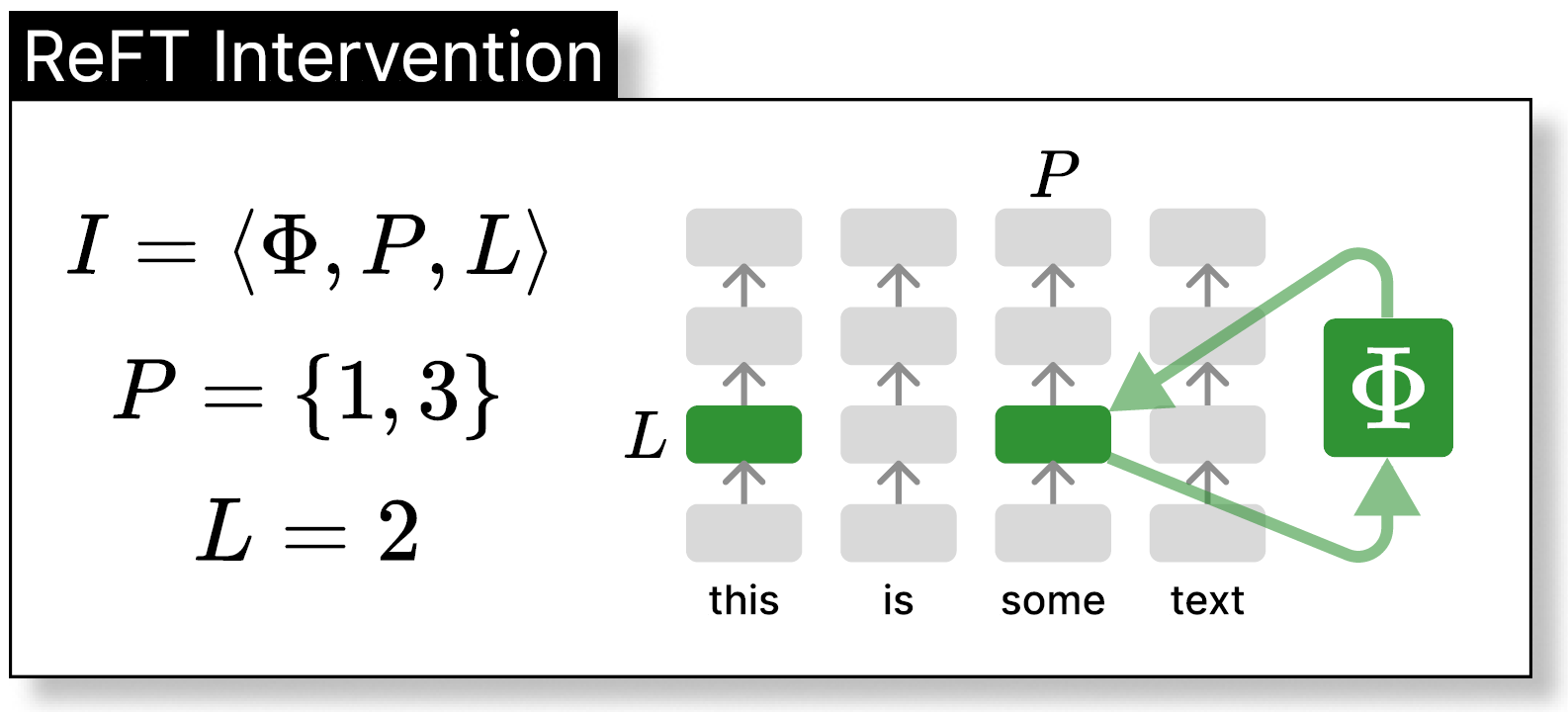

ReFT: Representation Finetuning for Language Models

Learn about Representation Finetuning (ReFT) by Stanford University, a method to fine-tune large language models (LLMs) efficiently…

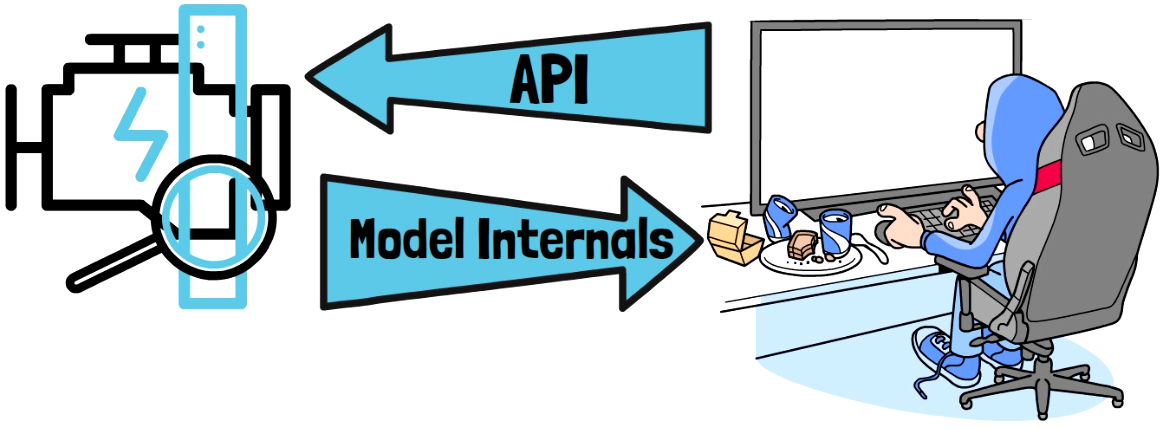

Stealing Part of a Production Language Model

What if we could discover OpenAI models internal weights? In this post we dive into a paper which presents an attack that steals LLMs data…

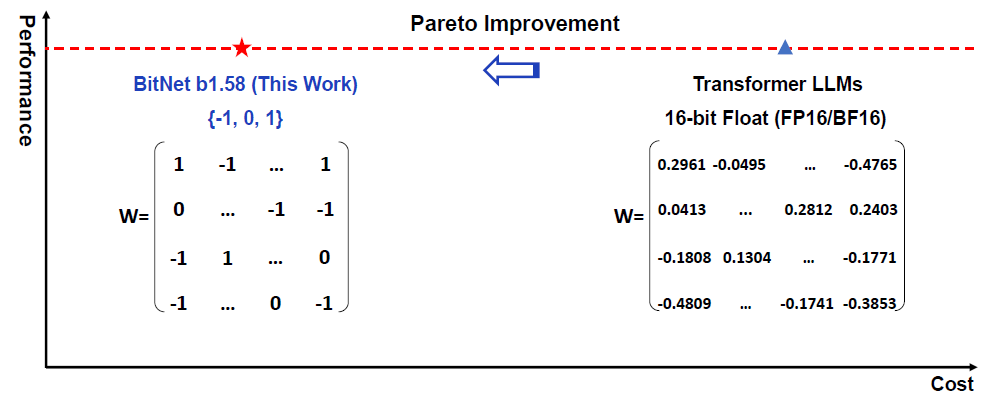

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

In this post we dive into the era of 1-bit LLMs paper by Microsoft, which shows a promising direction for low cost large language models…

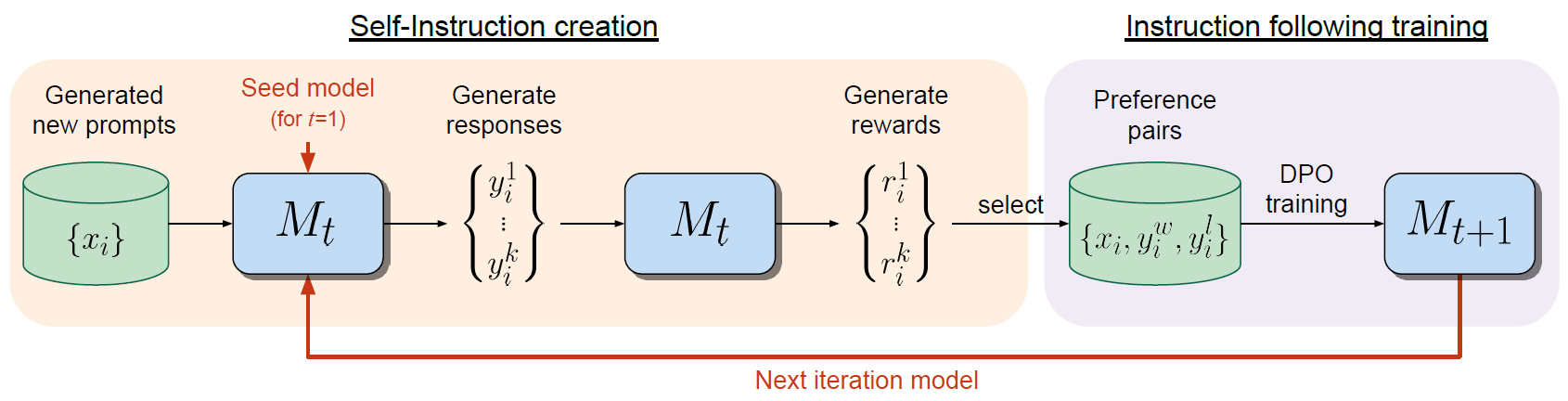

Self-Rewarding Language Models by Meta AI

In this post we dive into the Self-Rewarding Language Models paper by Meta AI, which can possibly be a step towards open-source AGI…

Fast Inference of Mixture-of-Experts Language Models with Offloading

Diving into a research paper introducing an innovative method to enhance LLM inference efficiency using memory offloading…