Computer Vision Papers

Looking for a specific paper or subject?

-

How Do Vision Transformers Work?

Up until vision transformers were invented, the dominating model architecture in computer vision was convolutional neural network (CNN), which was invented at 1989 by famous researchers including Yann LeCun and Yoshua Bengio. At 2017, transformers were invented by Google and took the natural language processing domain by storm, but were not adapted successfully to computer…

-

From Diffusion Models to LCM-LoRA

Recently, a new research paper was released, titled: “LCM-LoRA: A Universal Stable-Diffusion Acceleration Module”, which presents a method to generate high quality images with large text-to-image generation models, specifically SDXL, but doing so dramatically faster. And not only it can run SDXL much faster, it can also do so for a fine-tuned SDXL, say for…

-

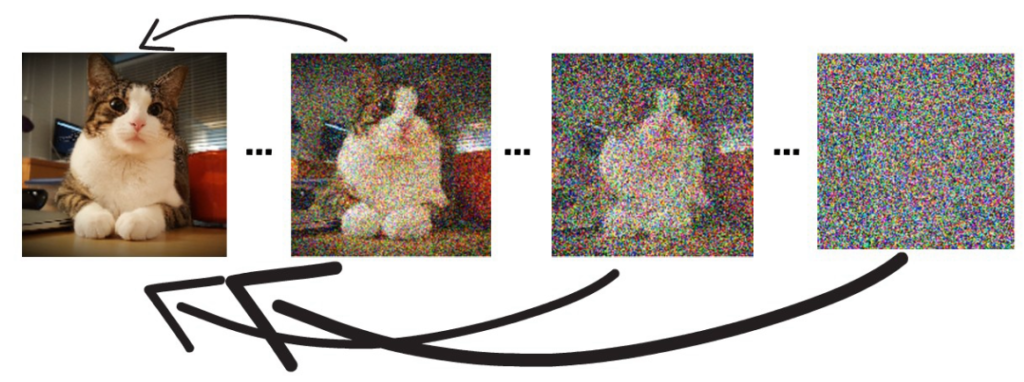

Consistency Models – Optimizing Diffusion Models Inference

Consistency models are a new type of generative models which were introduced by Open AI in a paper titled Consistency Models.In this post we will discuss about why consistency models are interesting, what they are and how they are created.Let’s start by asking why should we care about consistency models? If you prefer a video…

-

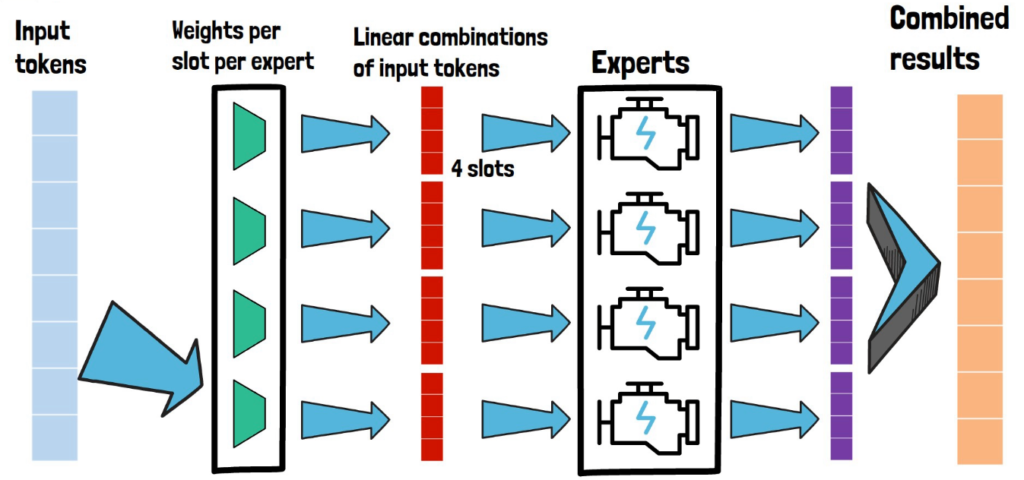

From Sparse to Soft Mixture of Experts

In this post we will dive into a research paper by Google DeepMind titled “From Sparse to Soft Mixtures of Experts”. In recent years we see that transformer-based models are getting larger and larger in order to improve their performance. An undesirable consequence is that the computational cost is also getting larger. And here comes…