NLP Papers

Looking for a specific paper or subject?

-

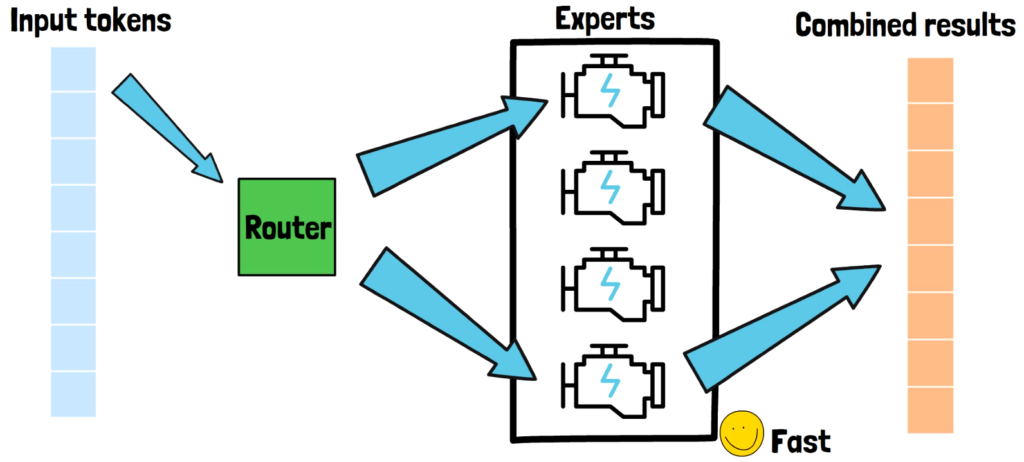

Introduction to Mixture-of-Experts (MoE)

In recent years, large language models are in charge of remarkable advances in AI, with models such as GPT-3 and 4 which are closed source and with open-source models such as LLaMA 2 and 3, and many more. However, as we moved forward, these models got larger and larger and it became important to find…

-

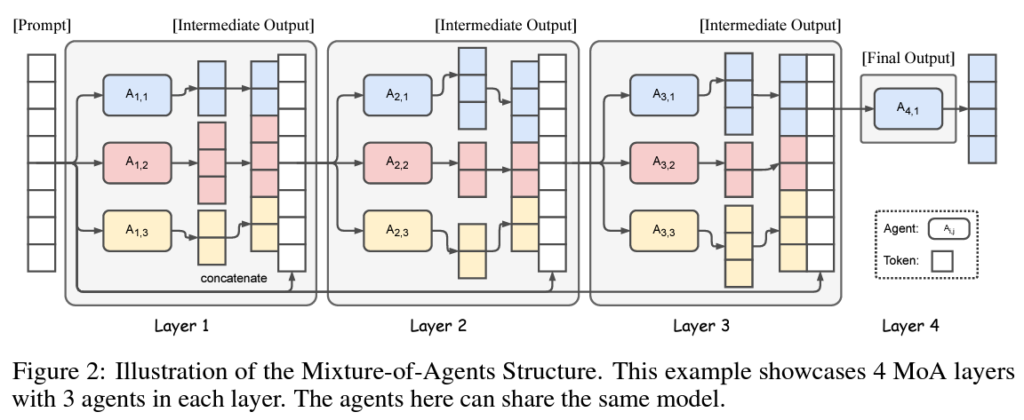

Mixture-of-Agents Enhances Large Language Model Capabilities

Motivation In recent years we witness remarkable advancements in AI and specifically in natural language understanding, which are driven by large language models. Today, there are various different LLMs out there such as GPT-4, Llama 3, Qwen, Mixtral and many more. In this post we review a recent paper, titled: “Mixture-of-Agents Enhances Large Language Model…

-

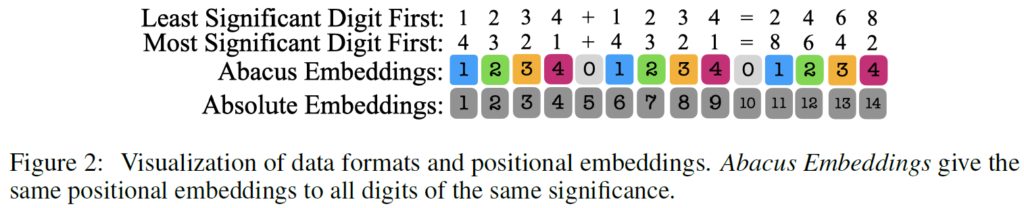

Arithmetic Transformers with Abacus Positional Embeddings

Introduction In the recent years, we witness remarkable success driven by large language models (LLMs). While LLMs perform well in various domains, such as natural language problems and code generation, there is still a lot of room for improvement with complex multi-step and algorithmic reasoning. To do research about algorithmic reasoning capabilities without pouring significant…

-

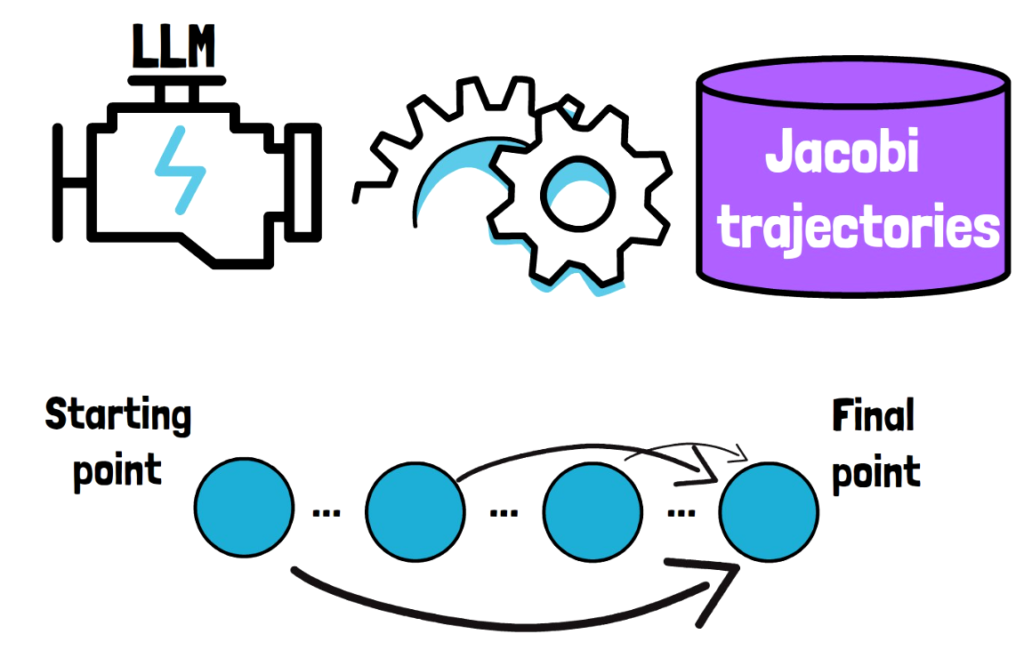

CLLMs: Consistency Large Language Models

In this post we dive into Consistency Large Language Models, or CLLMs in short, which were introduced in a recent research paper that goes by the same name. Before diving in, if you prefer a video format then check out the following video: Motivation Top LLMs such as GPT-4, LLaMA3 and more, are pushing the…

-

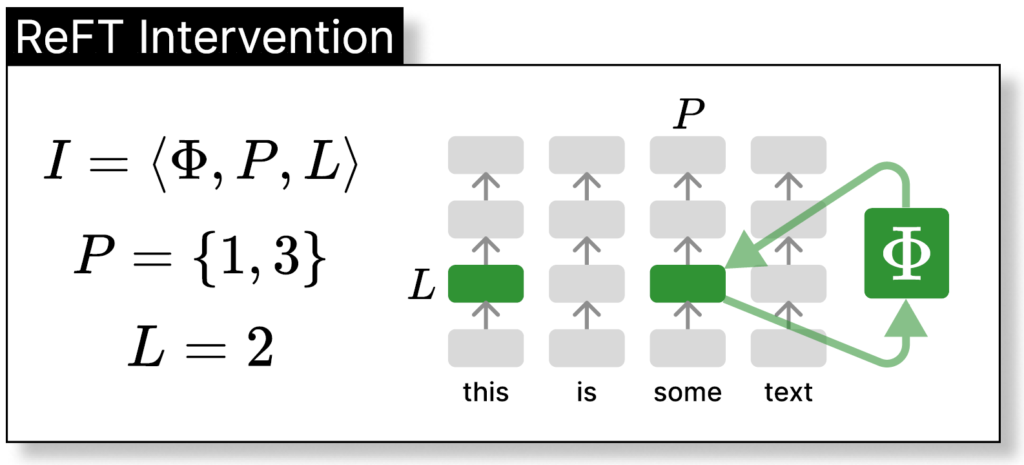

ReFT: Representation Finetuning for Language Models

In this post we dive into a recent research paper which presents a promising novel direction for fine-tuning LLMs, achieving remarkable results when considering both parameters count and performance. Before diving in, if you prefer a video format then check out the following video: Motivation – Finetuning a Pre-trained Transformer is Expensive A common method…

-

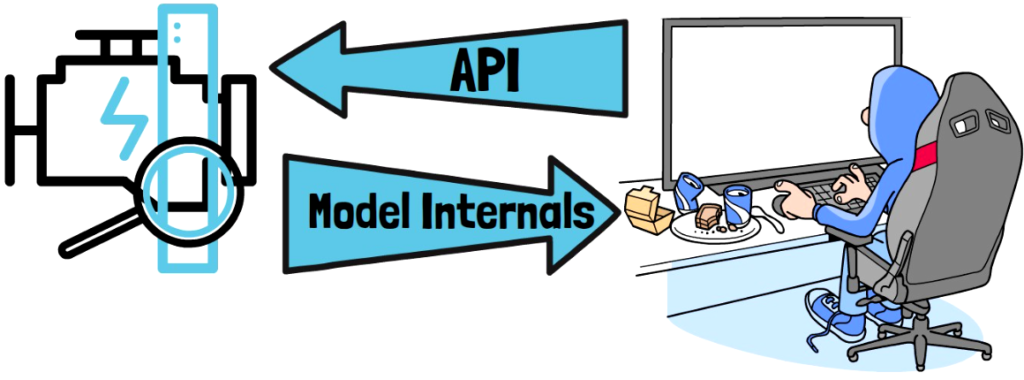

Stealing Part of a Production Language Model

Many of the top large language models today such as GPT-4, Claude 3 and Gemini are closed source, so a lot about the inner workings of these models is not known to the public. One justification for this is usually the competitive landscape, since companies are investing a lot of money and effort to create…

-

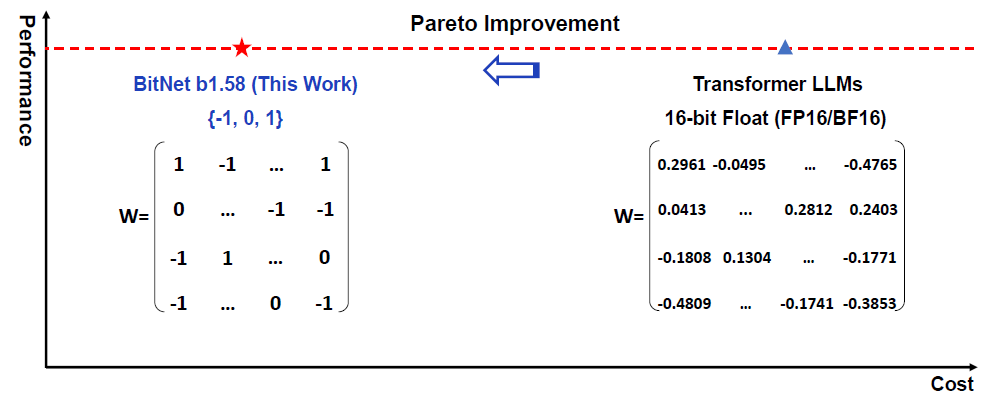

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

In this post, we dive into a new and exciting research paper by Microsoft, titled: “The Era of 1-bit LLMs: All Large Language Models are 1.58 bits”. In recent years, we’ve seen a tremendous success of large language models with models such as GPT, LLaMA and more. As we move forward, we see that the…

-

Fast Inference of Mixture-of-Experts Language Models with Offloading

In this post, we dive into a new research paper, titled: “Fast Inference of Mixture-of-Experts Language Models with Offloading”. Motivation LLMs Are Getting Larger In recent years, large language models are in charge of remarkable advances in AI, with models such as GPT-3 and 4 which are closed source and with open source models such…

-

LLM in a flash: Efficient Large Language Model Inference with Limited Memory

In this post we dive into a new research paper from Apple titled: “LLM in a flash: Efficient Large Language Model Inference with Limited Memory”. Before divining in, if you prefer a video format then check out our video review for this paper: Motivation In recent years, we’ve seen a tremendous success of large language…