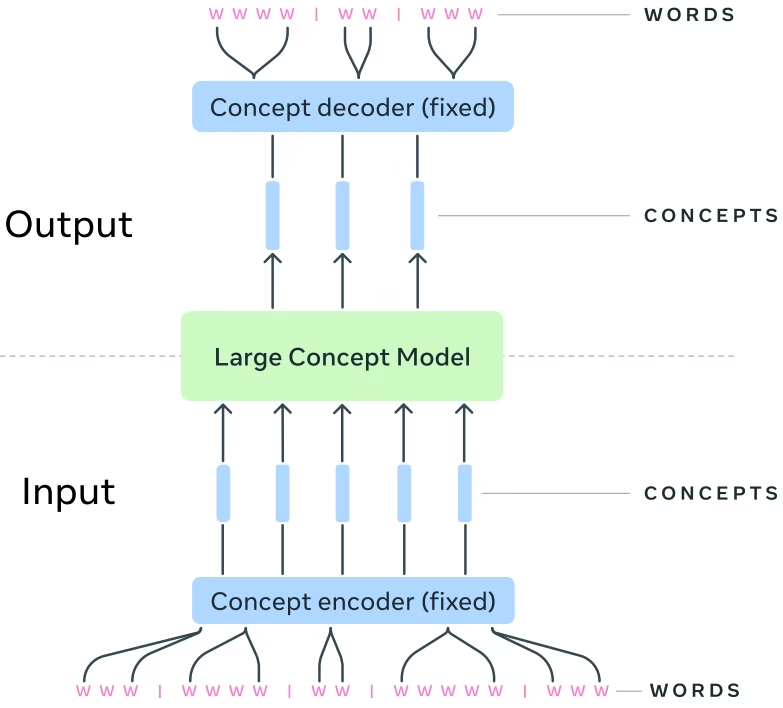

Large Concept Models (LCMs) by Meta: The Era of AI After LLMs?

Explore Meta’s Large Concept Models (LCMs) - an AI model that processes concepts instead of tokens. Can it become the next LLM architecture?

Large Concept Models (LCMs) by Meta: The Era of AI After LLMs? Read More »