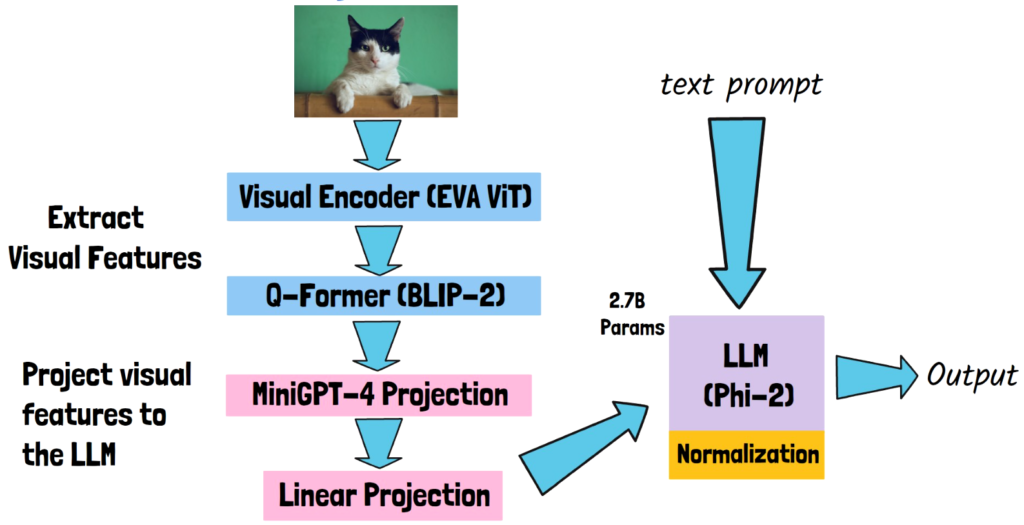

TinyGPT-V: Efficient Multimodal Large Language Model via Small Backbones

In this post we dive into TinyGPT-V, a new multimodal large language model which was introduced in a research paper titled “TinyGPT-V: Efficient Multimodal Large Language Model via Small Backbones”. Before divining in, if you prefer a video format then check out our video review for this paper: Motivation In recent years we’ve seen a […]

TinyGPT-V: Efficient Multimodal Large Language Model via Small Backbones Read More »