Introduction to Mixture-of-Experts (MoE)

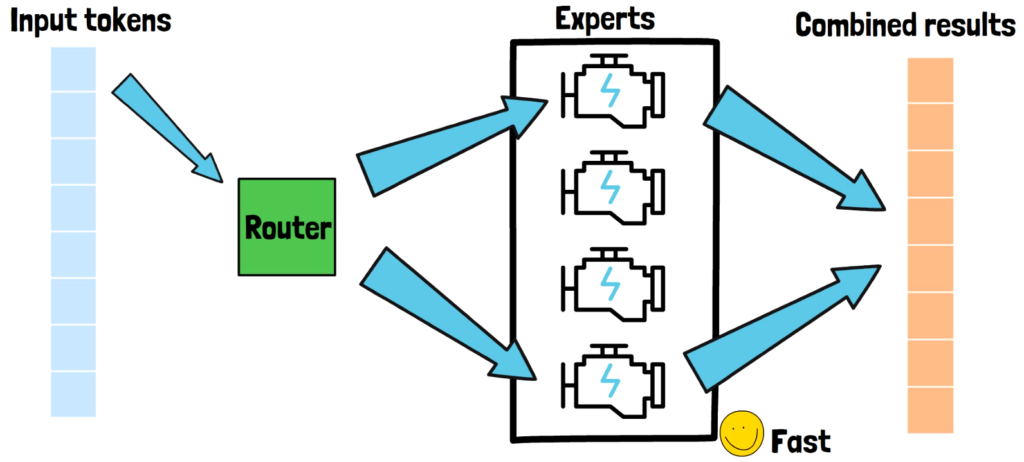

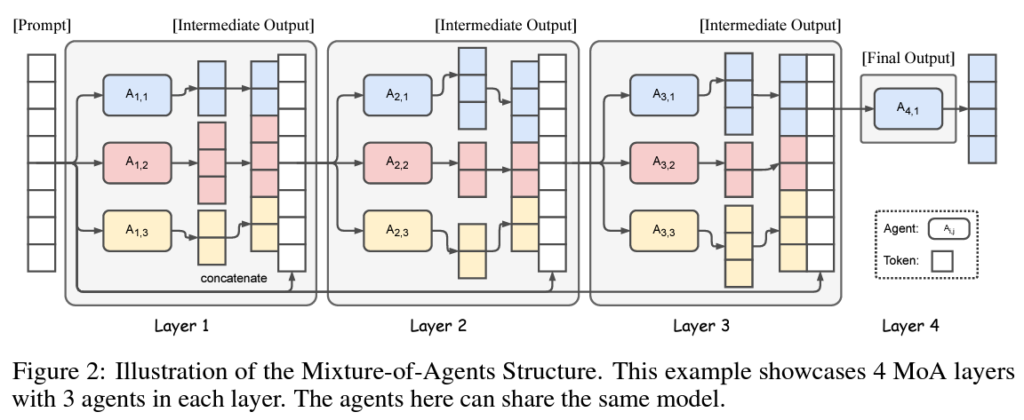

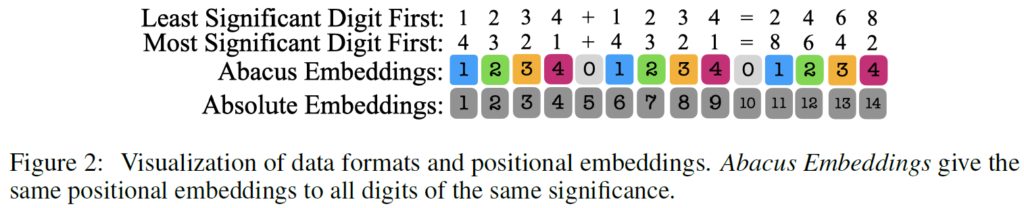

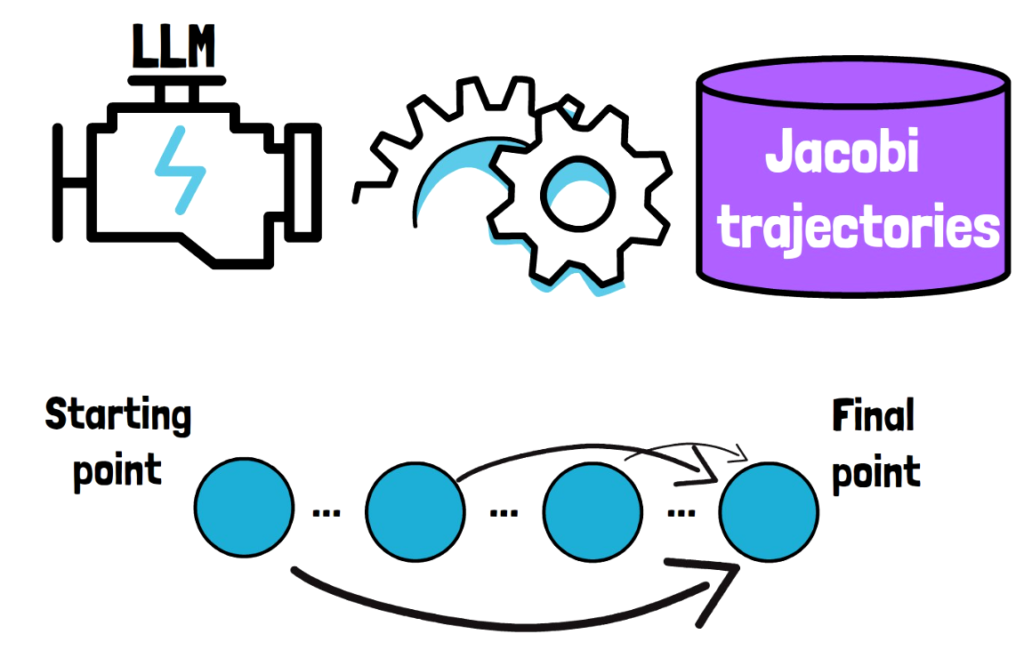

In recent years, large language models are in charge of remarkable advances in AI, with models such as GPT-3 and 4 which are closed source and with open-source models such as LLaMA 2 and 3, and many more. However, as we moved forward, these models got larger and larger and it became important to find […]

Introduction to Mixture-of-Experts (MoE) Read More »