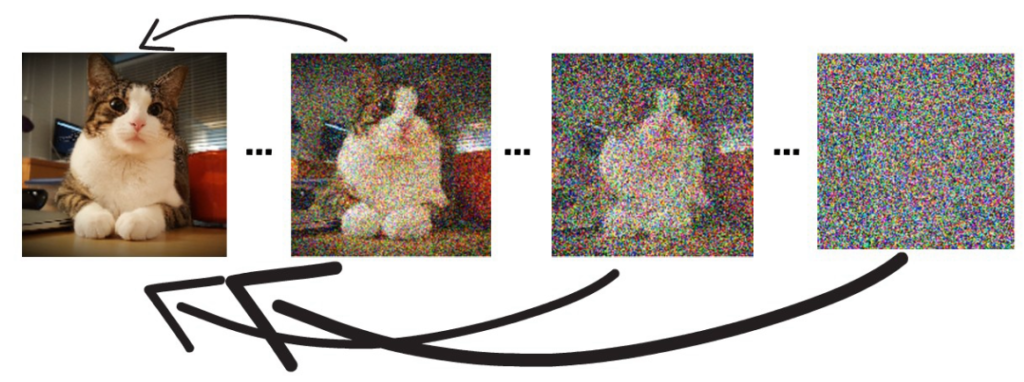

Consistency Models – Optimizing Diffusion Models Inference

Consistency models are a new type of generative models which were introduced by Open AI, and in this post we will dive into how they work

Consistency Models – Optimizing Diffusion Models Inference Read More »