Consistency models are a new type of generative models which were introduced by Open AI in a paper titled Consistency Models.

In this post we will discuss about why consistency models are interesting, what they are and how they are created.

Let’s start by asking why should we care about consistency models?

If you prefer a video format then most of what we cover here is also covered in the following video:

Why Should We Care About Consistency Models?

Dominant image generation models such as DALL-E 2, Imagen and Stable Diffusion are based on a type of generative models called Diffusion Models. These models are able to generate amazing results such as the avocado chair and the cute dog above, but they have a drawback which consistency models can help to solve.

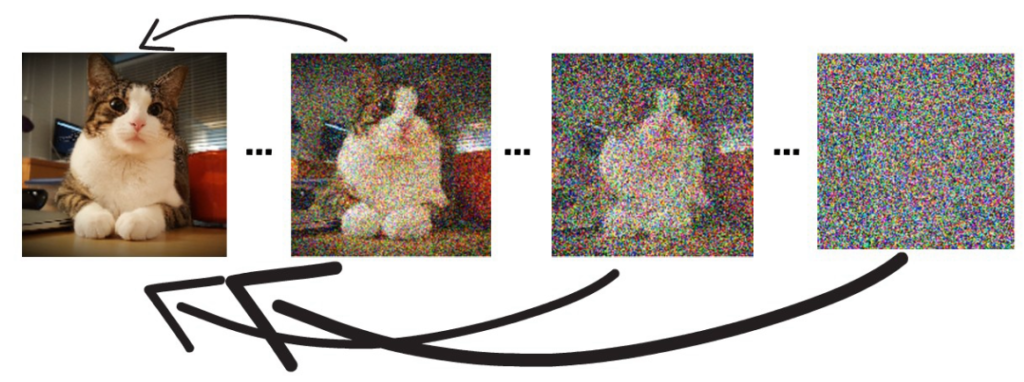

Diffusion models learn to gradually remove noise from an image in order to get a clear sample. The model starts with a random noise image like we can see on the right in the picture above, and each step it removes some of the noise. The 3 dots imply that we skip steps in this example. Finally, we get a nice clear image of a cat.

The drawback is that in order to generate a clear image, we need to go through this process of gradually removing the noise, which can consist of tens to thousands of steps, which can get quite slow. So for example, using diffusion models in real time applications is not a good fit. So let’s move on to see how consistency models can help.

What Are Consistency Models?

Consistency Models are similar to Diffusion Models in the sense that they also learn to remove noise from an image. However, consistency models add a new idea of also learning to map between any image on the same denoisening path to the clear image.

So the name consistency models is because the model learns to be consistent for producing the same clear image for any point on the same path.

With that capability, consistency models can jump directly from a completely noisy image, to a clear image, skipping the slow process of diffusion models.

Another super powerful attribute which is new with consistency models is that it is possible to use few steps instead of one if higher quality image is needed, paying off with more compute.

The way it is being done, is not exactly as it is done with diffusion models where in order to get a better quality, we apply the model again over our current result image. Rather, we start with a noisy image, then we run our trained consistency model over the noisy image to get a clear image. Then we add a bit of random noise to it and get a noisy image again. We then repeat the process for as many iterations as we want and according to the consistency model research paper this should yield better results.

Methods of Creating Consistency Models

There are two possible ways to train a consistency model. One way is distillation of a pre-trained diffusion model.

What it means is that we take a large pre-trained diffusion model, such as Stable Diffusion and transfer knowledge from it to a new smaller consistency model.

In general, distillation means transferring knowledge from a large model to a new lighter model.

Using this approach the researchers were able to get better results than other distillation techniques which were applied to diffusion models, such as progressive distillation.

The second method is to train consistency models from scratch, which the researchers were also able to produce good results with.

Consistency Models Training Process

During the training process we follow the destruction path of clear images to a complete noise. The training process looks at pairs of points in this destruction path.

The consistency model is invoked on each of the images from that pair, to try and restore the original cat image, and each produce a result which is not identical to the other. The training process goal is to minimize that difference so when the model is applied on points in the same destruction path, they will produce the same clear cat image. The training process will stop once this metric is accurate to a satisfactory level.

References

- Paper – https://arxiv.org/abs/2303.01469

- Code – https://github.com/openai/consistency_models

- Video – https://youtu.be/Bm63OwAl2Io

If you’re interested in more advancements in Computer Vision then here is another recommended read – https://aipapersacademy.com/dinov2-from-meta-ai-finally-a-foundational-model-in-computer-vision/