ImageBind is a model by Meta AI which can make sense out of six different types of data. This is exciting because it brings AI a step closer to how humans are observing the environment using multiple senses. In this post, we will explain what is this model, why should we care about it by exploring what we can do with such a model, and afterwards we’ll explain how the researchers were able to create it.

If you prefer a video format then most of what we cover here is also covered in the following video:

What is ImageBind?

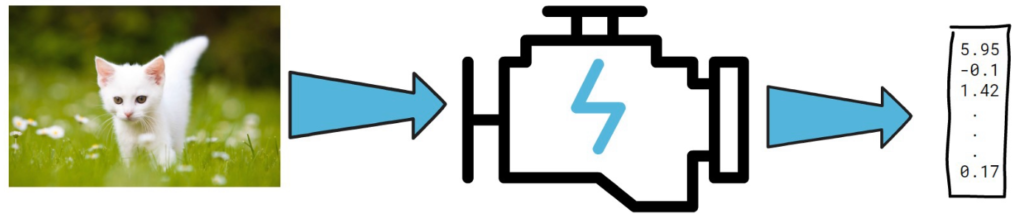

In the most simple level, ImageBind is a model that yields vectors of numbers which are called embeddings. These embeddings grasp the meaning of the model inputs.

In the picture below for example, a cat image is provided to a model which yields embeddings.

The cool thing about ImageBind, is that we can provide it with various input types, so in addition to the cat image, we can also provide an input of a cat sound and the model will also yield a vector of embeddings. And similarly, we can also provide a text describing a white cat standing on the grass and also get an embedding.

With ImageBind, the embeddings we get here are not identical, but they share a common embedding space and each output grasps a similar meaning related to the different cat input.

In addition to image, audio and text the model can also understand video, depth sensor data, IMU which is a sensor that can tell when we tilt or shake our phone, and thermal data

That’s cool but why is it so interesting? Let’s take a look at the power it gives us starting with cross-modal retrieval.

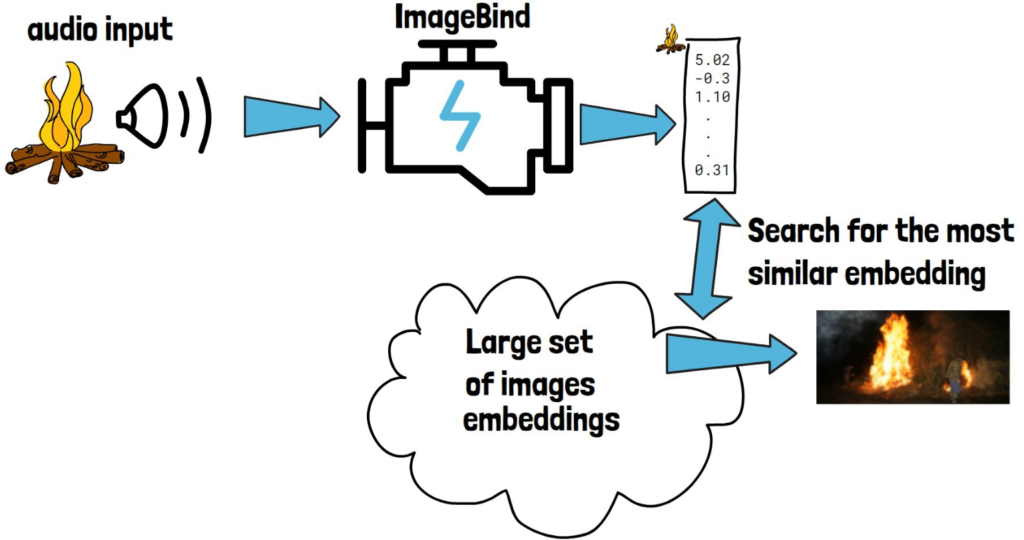

Cross-Modal Retrieval with ImageBind

By modal the meaning is for a type of data, so image is a modality and text is a modality for example. Cross-modal retrieval is the process of being able to provide a query input from one modality, say the sound of a crackle of a fire, and given a usually large set of data from other modality, for example images, to retrieve an image that is a good fit to the query audio, like the image of fire in the example below.

ImageBind helps with that by first running the model on the input audio, which could be of a different modality as well, which will yield an embedding. The large set of images can actually be stored with their embeddings, so then we can search for the image with the most similar embedding.

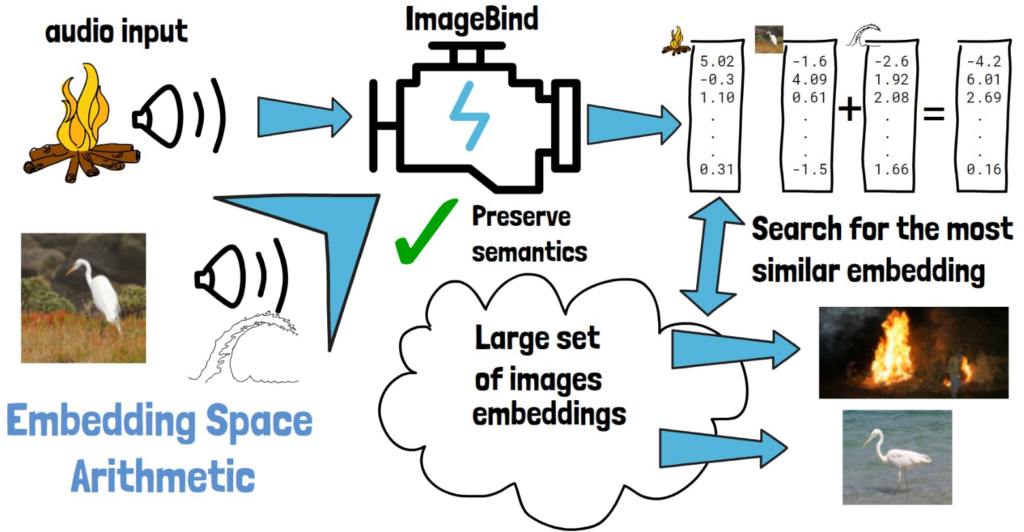

This is super cool capability already but what’s even crazier is that with ImageBind we can also take an image of a bird and the sound of waves (see picture below on the bottom left), get their embeddings from ImageBind, then sum these embeddings together and retrieve an image that is similar to the embeddings sum, and get an image of the same bird in the sea. This shows that embedding space arithmetic naturally composes their semantics which is simply mind blowing.

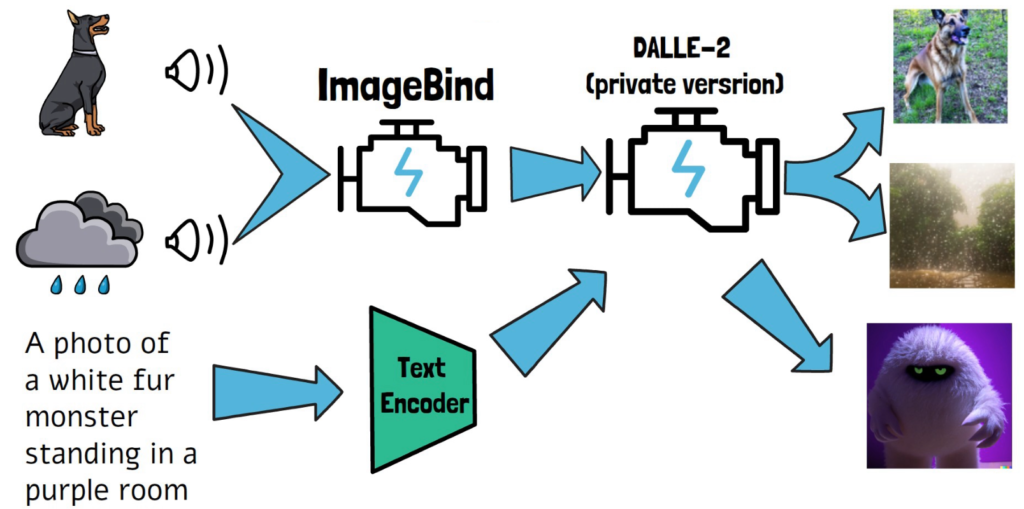

Audio to Image Generation with ImageBind

Another capability that ImageBind enables is audio to image generation. As an example the researchers show that they were able to provide the sound of a dog barks as input and generate an image of a dog, and also use the sound of rain to generate a rainy image.

The way they did that is by taking a pre-trained version of the image generation model DALLE-2, actually a private Meta AI implementation of DALLE-2, and usually DALLE-2 would get a text prompt, create an embedding for the text and use that to generate an image. With ImageBind what the researchers did is to run the audio via ImageBind to get an embedding, and use that embedding instead of a text prompt embedding in DALLE-2, and this way it is capable of generating images using audio which is very impressive. This opens the door to do similar things with other models that operate on text embeddings so we may hear on such progress in the future.

How ImageBind Was Created

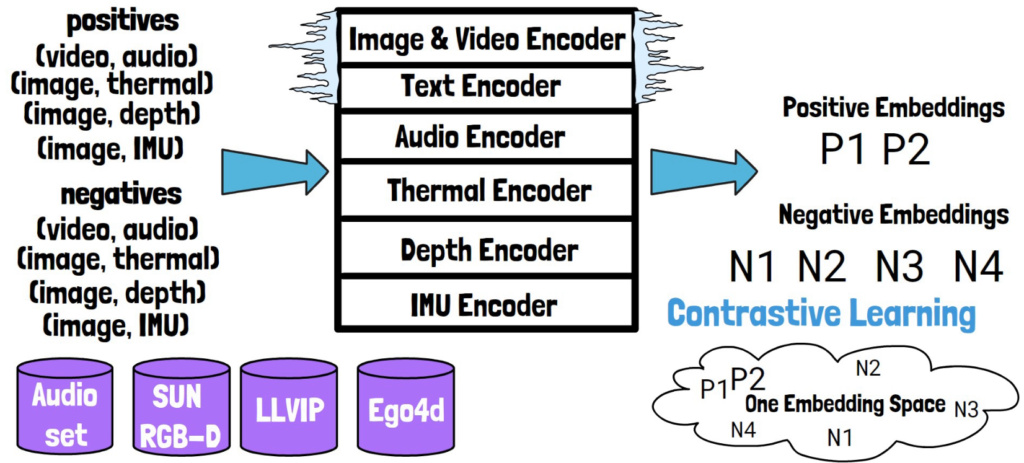

ImageBind model can be thought of as a model with six channels, where each of the channels is an encoder of a different type of data. They use the same encoder for image and video and different encoder for each of the other types of data. The image and text encoders are actually taken as is from CLIP, which is a model by OpenAI that connects text and images, and these encoders are kept frozen during training so they do not change at all. So, ImageBind relies heavily on CLIP. This decision is likely what enabled to replace the text encoder in DALLE-2 and generate images from audio.

To train the other encoders they take pairs of naturally matching samples, like audio and video from Audioset dataset, and they were also able to extract samples of images with matching thermal, depth and IMU data from existing datasets such as SUN RGB-D, LLVIP and Ego4d.

Additionally they created not matching or negative pairs.

Then, they take a batch that include a matching sample and not matching samples and run the model on them to get encoding for each of the inputs in each of the pairs in the batch.

They then use contrastive learning approach, which means that the loss function that the training process minimizes is pushing the embeddings of the positive samples to get closer to each other, and also pushing the embeddings of the negative samples to get further away from each other.

This way the model gradually brings all modalities to the same embedding space.

An important note here is that it would not be feasible to find matching pairs from all the different types of modalities. And so they don’t do that but rather only find pairs from each modality with images, and from here the source of the name ImageBind, since the image modality binds together all other modalities, and from here the paper name of one embedding space to bind them all.

References

- Paper – https://arxiv.org/abs/2305.05665

- Demo – https://imagebind.metademolab.com/

- Video – https://youtu.be/pVa-r8Heu-A

Another recommended read about a more recent advancement from Meta AI – https://aipapersacademy.com/i-jepa-a-human-like-computer-vision-model/