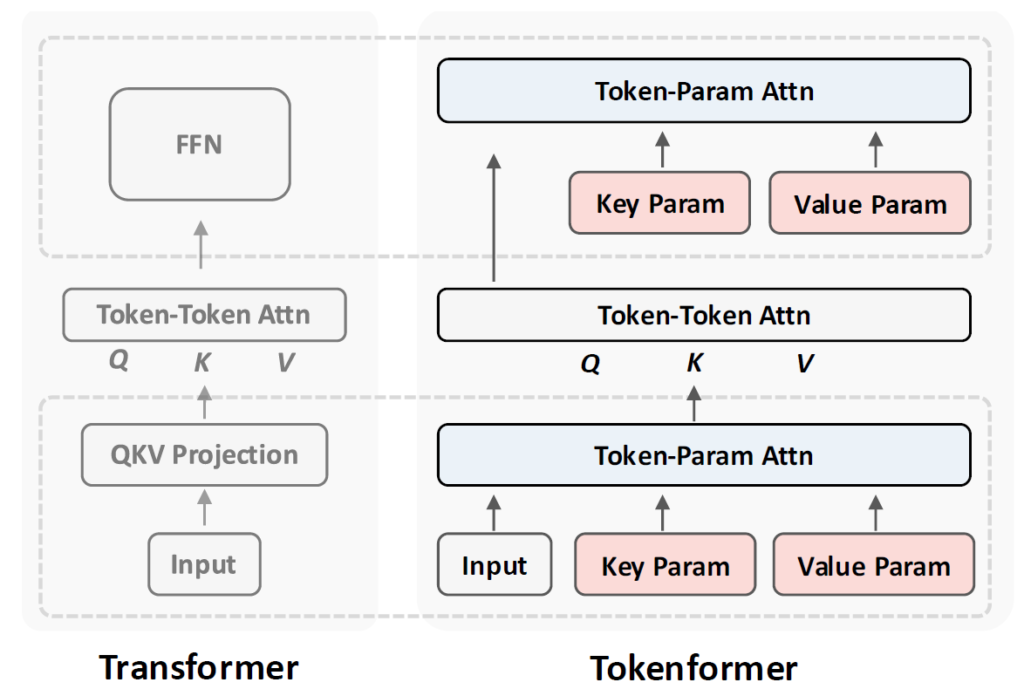

Titans by Google: The Era of AI After Transformers?

Dive into Titans, a new AI architecture by Google, showing promising results comparing to Transformers! Paving the way for a new era in AI?

Titans by Google: The Era of AI After Transformers? Read More »