Trending

DeepSeek’s mHC Explained: Manifold-Constrained Hyper-Connections

Manifold-Constrained Hyper-Connections (mHC) explained: How DeepSeek rewires residual connections in LLMs for next-gen AI…

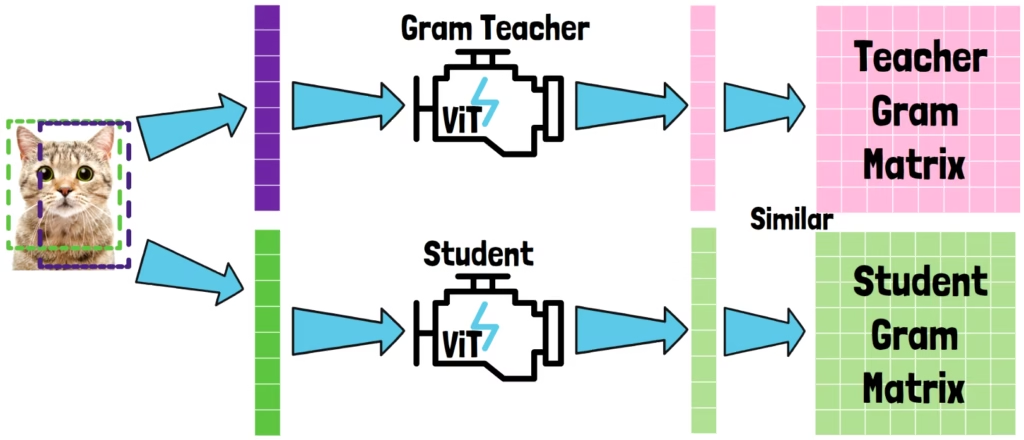

DINOv3 Paper Explained: The Computer Vision Foundation Model

In this post we break down Meta AI’s DINOv3 research paper, which introduces a state-of-the-art Computer Vision foundation models family…

Looking for a specific paper or subject?

Latest AI Papers Reviews

DeepSeek’s mHC Explained: Manifold-Constrained Hyper-Connections

Manifold-Constrained Hyper-Connections (mHC) explained: How DeepSeek rewires residual connections in LLMs for next-gen AI…

Emergent Hierarchical Reasoning in LLMs Through Reinforcement Learning

Discover how reinforcement learning enables hierarchical reasoning in LLMs and how HICRA improves on top of GRPO…

Less Is More: Tiny Recursive Model (TRM) Paper Explained

In this post, we break down the TRM paper, a simpler version of the HRM, that beats HRM and top reasoning LLMs with a tiny 7M params model…

DINOv3 Paper Explained: The Computer Vision Foundation Model

In this post we break down Meta AI’s DINOv3 research paper, which introduces a state-of-the-art Computer Vision foundation models family…

The Era of Hierarchical Reasoning Models?

In this post we break down the Hierarchical Reasoning Model (HRM), a new model that rivals top LLMs on reasoning benchmarks with only 27M…

Microsoft’s Reinforcement Pre-Training (RPT) – A New Direction in LLM Training?

In this post we break down Microsoft’s Reinforcement Pre-Training, which scales up reinforcement learninng with next-token reasoning…

Darwin Gödel Machine: Self-Improving AI Agents

In this post we explain the Darwin Gödel Machine, a novel method for self-improving AI agents by Sakana AI…

Continuous Thought Machines (CTMs) – The Era of AI Beyond Transformers?

Dive into Continuous Thought Machines, a novel architecture that strive to push AI closer to how the human brain works…

Perception Language Models (PLMs) by Meta – A Fully Open SOTA VLM

Dive into Perception Language Models by Meta, a family of fully open SOTA vision-language models with detailed visual understanding…

GRPO Reinforcement Learning Explained (DeepSeekMath Paper)

DeepSeekMath is the fundamental GRPO paper, the reinforcement learning method used in DeepSeek-R1. Dive in to understand how it works…

DAPO: Enhancing GRPO For LLM Reinforcement Learning

Explore DAPO, an innovative open-source Reinforcement Learning paradigm for LLMs that rivals DeepSeek-R1 GRPO method…

Cheating LLMs & How (Not) To Stop Them | OpenAI Paper Explained

Discover how OpenAI’s research reveals AI models cheating the system through reward hacking — and what happens when trying to stop them…

Most Read AI Papers Reviews

GRPO Reinforcement Learning Explained (DeepSeekMath Paper)

DeepSeekMath is the fundamental GRPO paper, the reinforcement learning method used in DeepSeek-R1. Dive in to understand how it works…

DeepSeek-R1 Paper Explained – A New RL LLMs Era in AI?

Dive into the groundbreaking DeepSeek-R1 research paper, introduces open-source reasoning models that rivals the performance OpenAI’s o1!…

Titans by Google: The Era of AI After Transformers?

Dive into Titans, a new AI architecture by Google, showing promising results comparing to Transformers! Paving the way for a new era in AI?…

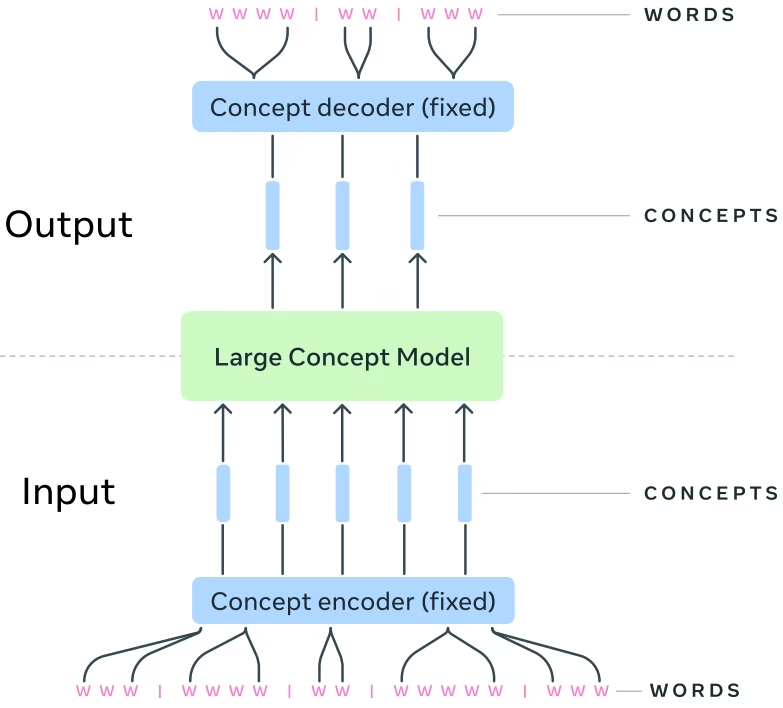

Large Concept Models (LCMs) by Meta: The Era of AI After LLMs?

Explore Meta’s Large Concept Models (LCMs) - an AI model that processes concepts instead of tokens. Can it become the next LLM architecture?…

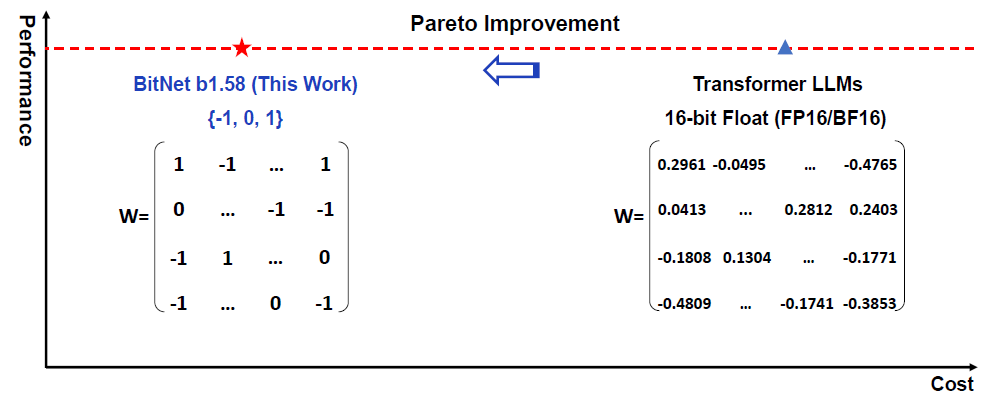

The Era of 1-bit LLMs: All Large Language Models are in 1.58 Bits

In this post we dive into the era of 1-bit LLMs paper by Microsoft, which shows a promising direction for low cost large language models…

DINOv2 from Meta AI – A Foundational Model in Computer Vision

DINOv2 by Meta AI finally gives us a foundational model for computer vision. We’ll explain what it means and why DINOv2 can count as such…