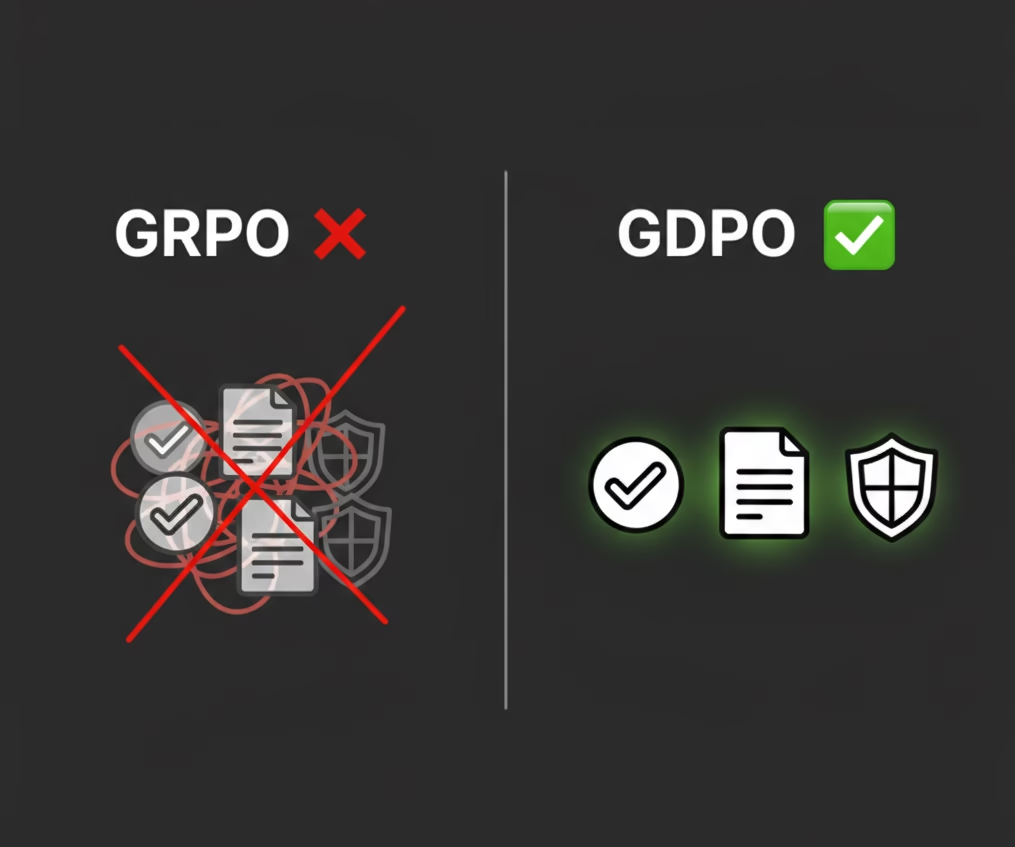

GDPO Explained: How NVIDIA Fixes GRPO for Multi-Reward LLM Reinforcement Learning

GDPO is NVIDIA’s solution to GRPO’s limitations in multi-reward RL for large language models. We break down the paper in this post.

GDPO Explained: How NVIDIA Fixes GRPO for Multi-Reward LLM Reinforcement Learning Read More »