From Sparse to Soft Mixture of Experts

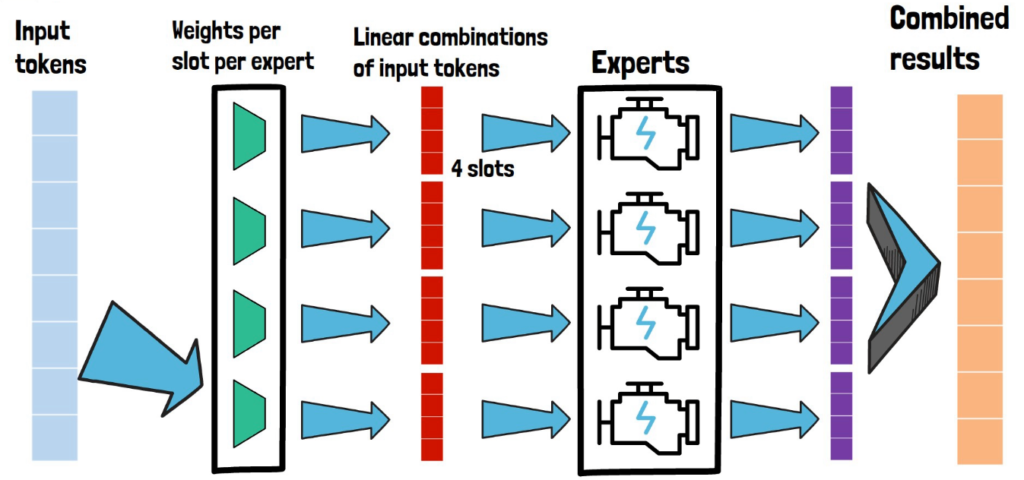

In this post we will dive into a research paper by Google DeepMind titled “From Sparse to Soft Mixtures of Experts”. In recent years we see that transformer-based models are getting larger and larger in order to improve their performance. An undesirable consequence is that the computational cost is also getting larger. And here comes […]

From Sparse to Soft Mixture of Experts Read More »