In this post, we break down a research paper from Stanford University titled “s1: Simple Test-Time Scaling”, introducing a model that rivals o1-preview with just 1k samples.

Introduction

In recent years, large language models (LLMs) have continuously improved, achieving remarkable results that impact many aspects of our lives. With s1, AI may have taken another intriguing step forward. Traditionally, enhancing LLM capabilities has relied on training-time scaling—training models for longer periods on more data. Think of this as developing the “brain” of the model, where it learns patterns and acquires knowledge from vast amounts of data, building a foundation of skills and understanding.

The Rise of Test-Time Scaling

A newer paradigm, test-time scaling, has emerged as a game-changer. This method involves increasing compute at test time to achieve better results. In essence, it’s about teaching the model to use its “brain” more effectively, optimizing performance during real-world usage.

This paradigm has proven extremely beneficial with the release of OpenAI’s o1, which dramatically enhanced LLM performance on complex reasoning tasks. However, since o1 remains closed-source, it has sparked massive research efforts in the AI community to explore different approaches for test time scaling.

One notable effort is s1 from Stanford University, which explores a straightforward yet highly effective method to achieve test-time scaling and strong reasoning performance. In this post we break down the key components of the paper, the results, and why it matters.

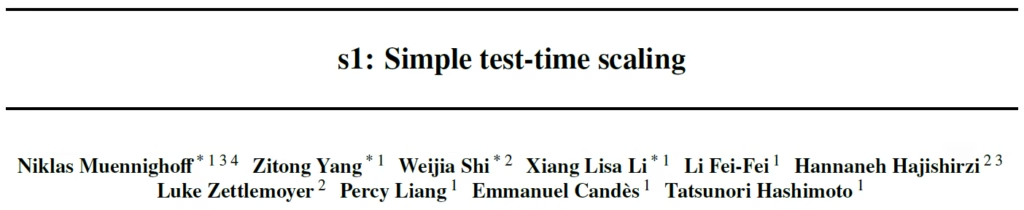

Overview of the s1 Approach

The s1 approach starts with a pretrained model—Qwen2.5-32B-Instruct—and uses two key elements:

- Curation of the s1K Dataset

- A novel test-time scaling technique

The model’s name, s1, is intriguing for two reasons. First, its similarity to o1, with “s” possibly standing for “simple” or “Stanford.” Second, for the history fans reading this, S-1 is also the name of a supercomputer developed at Stanford in the 1970s, which can still be viewed at the Computer History Museum in Mountain View.

s1 Ingredient 1: The s1K Dataset

The first key element of the s1 approach is the creation of the s1K dataset, a carefully curated dataset of 1,000 high-quality samples. Here’s how the researchers built it:

Initial Collection

They started with 59,000 samples from diverse sources, many focusing on math-related domains. The sources include:

- NuminaMATH: Mathematical problems from online platforms.

- AIME and OmniMath: Additional math problem repositories.

- AGIEval: Standardized test questions, such as SAT samples.

- OlympicArena: Science problems spanning biology, chemistry, physics, and more.

- Stanford Exams: Probability problems from exams in Stanford.

- Interview Questions: Quantitative trading interview questions.

These samples include questions, reasoning traces, and solutions, generated using Google Gemini Flash Thinking API.

Stage 1: Quality Filtering

Low-quality samples (e.g., those with formatting issues) were filtered out, reducing the dataset to 54,000 samples.

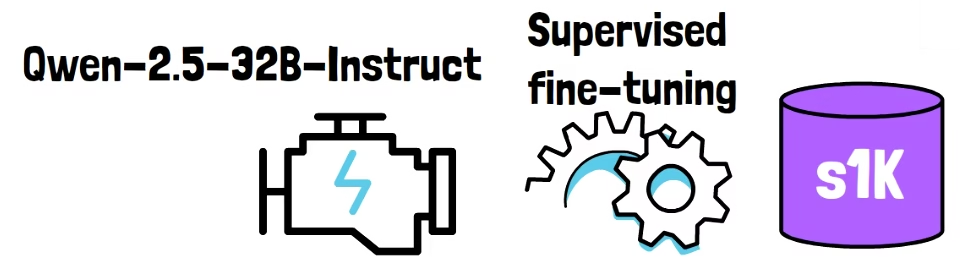

Stage 2: Difficulty Filtering

In this stage, the researchers filtered out questions that were too easy. Each sample was passed through two LLMs—Qwen2.5-7B-Instruct and Qwen2.5-32B-Instruct. A third model, Claude 3.5 Sonnet, assesses the correctness of the responses against the reference solution. If either of the two models solves the question correctly, the sample is filtered out. Only if both models got it wrong, the sample is kept. Using two models instead of one reduces the likelihood of an easy sample slipping through the filtering process due to a rare mistake by one of the models. This filtering stage keeps approximately 25,000 samples.

Stage 3: Diversity Filtering

This stage has two steps. In the first step, we cluster the samples into categories using a LLM. The categories include different topics in math as well as other sciences such as biology, physics, and economics. Given the clustered samples, we sample a similar number of samples from all domains.

An interesting note is that the sampling is done with a preference for samples with longer reasoning traces, as this indicates that the sample is more complex. Finally, after this stage, we end up with the s1K dataset.

Similarity with LIMA

This approach of using a small dataset to align the model reflects the Superficial Alignment Hypothesis, introduced in Meta’s paper, less is more for alignment. The hypothesis states that a model’s knowledge and capabilities are mostly acquired during pretraining. Therefore, the reasoning capability is already present in the pretrained model, and a small, high-quality dataset like s1K is sufficient to activate and refine it.

s1 Ingredient 2: Test-Time Scaling with Budget Forcing

The second key element of the s1 approach is budget forcing, a novel test-time scaling method. This technique allows researchers to control the model’s reasoning during the test phase by setting maximum and/or minimum thinking tokens.

Enforcing Maximum Tokens with s1

While the model is still in its reasoning phase, we force it to exit early by appending an end-of-thinking token delimiter and the phrase “Final Answer:”. This stops the model from continuing to reason, prompting it to provide its best answer at that point.

Enforcing Minimum Tokens with s1

If the model is about to generate the end-of-thinking token, we prevent it from doing so. Instead, we add a “Wait” token to the model’s reasoning trace. This intervention encourages the model to pause and reflect on its current reasoning before providing an answer, allowing it to generate better answers by thinking more deeply.

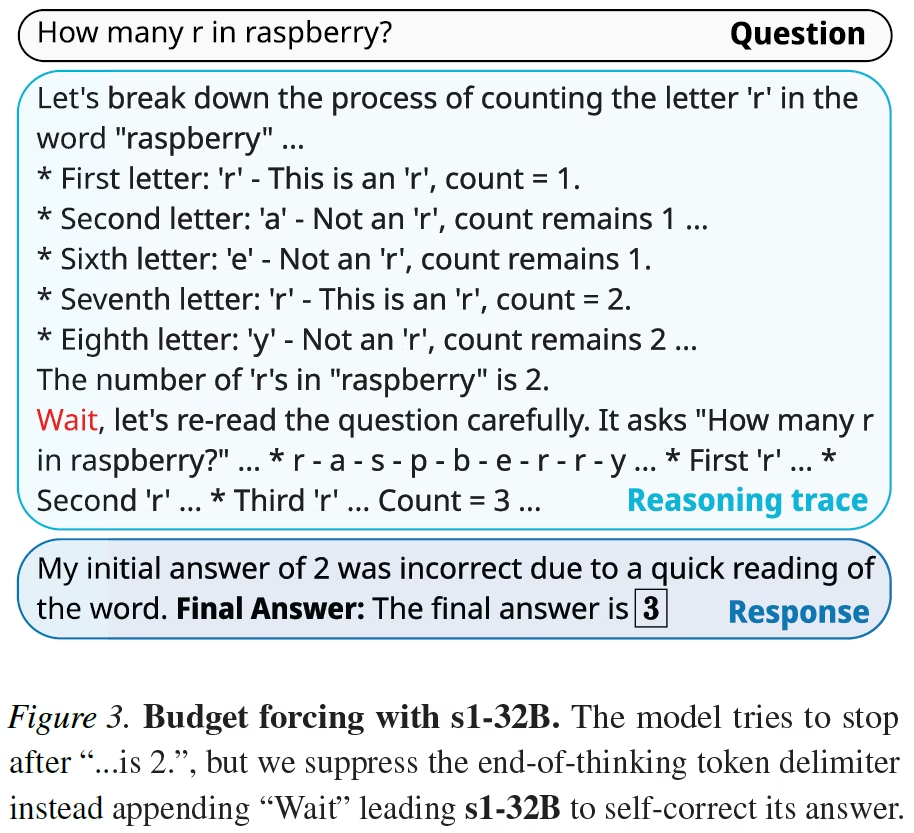

Example: “How Many R’s Are in Raspberry?”

The above example from the paper demonstrates s1’s test-time scaling approach. The model is asked the famous question, “How many r’s are in raspberry?”. At some point in the reasoning process, the model reaches the wrong conclusion that the answer is 2, and it was about to end its thinking process. At this point, a “Wait” token is appended, and the model continues its thinking. Ultimately, the model generates the correct answer, which is 3.

s1 Results

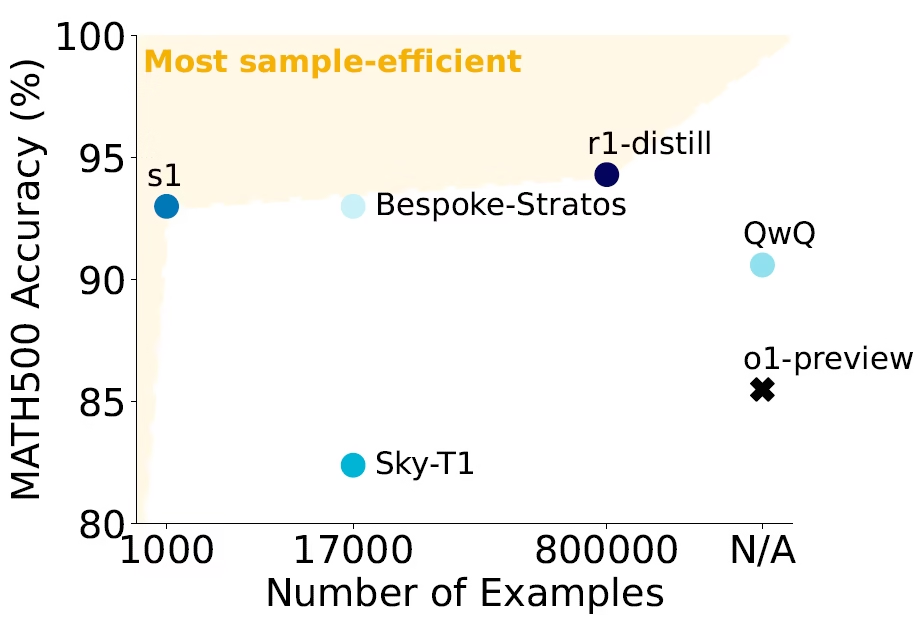

s1’s Sample Efficiency

The above figure from the paper compares the number of training samples used for fine-tuning (x-axis) and accuracy on the MATH500 dataset (y-axis). Models like o1-preview, where the number of samples is unknown, appear on the far right.

- s1 demonstrates impressive performance while being the most sample-efficient model.

- r1-distill, which also uses Qwen as the base model, achieves higher accuracy but requires 800 times more samples.

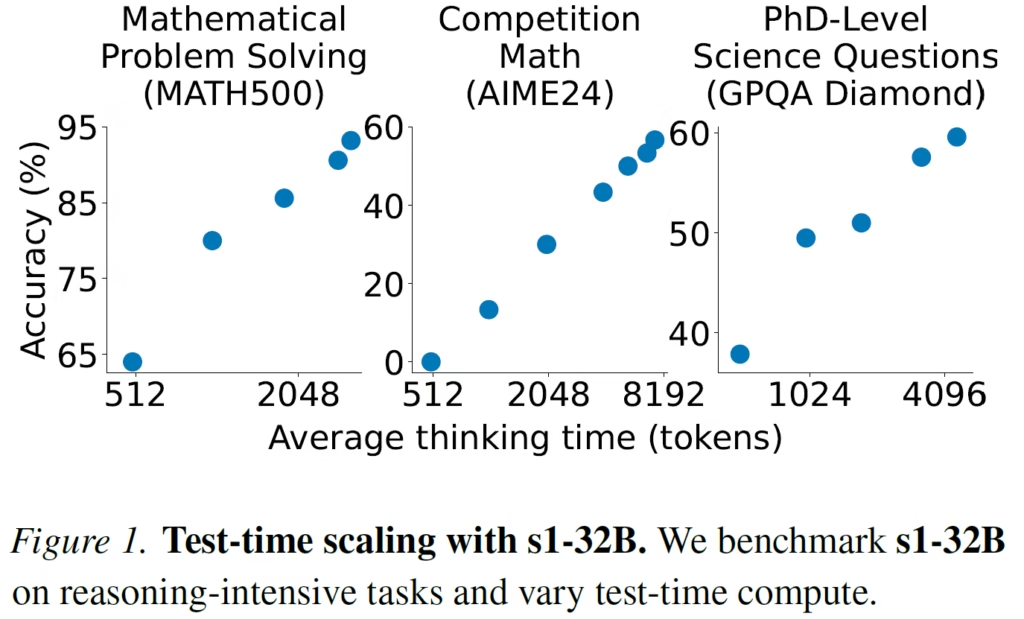

Budget Forcing Test-Time Scaling Trends

The above charts reveal scaling trends for budget forcing on three reasoning-intensive tasks.

- X-axis: Average thinking time, measured in tokens.

- Y-axis: Accuracy.

The trend is clear: as test-time compute increases, accuracy improves.

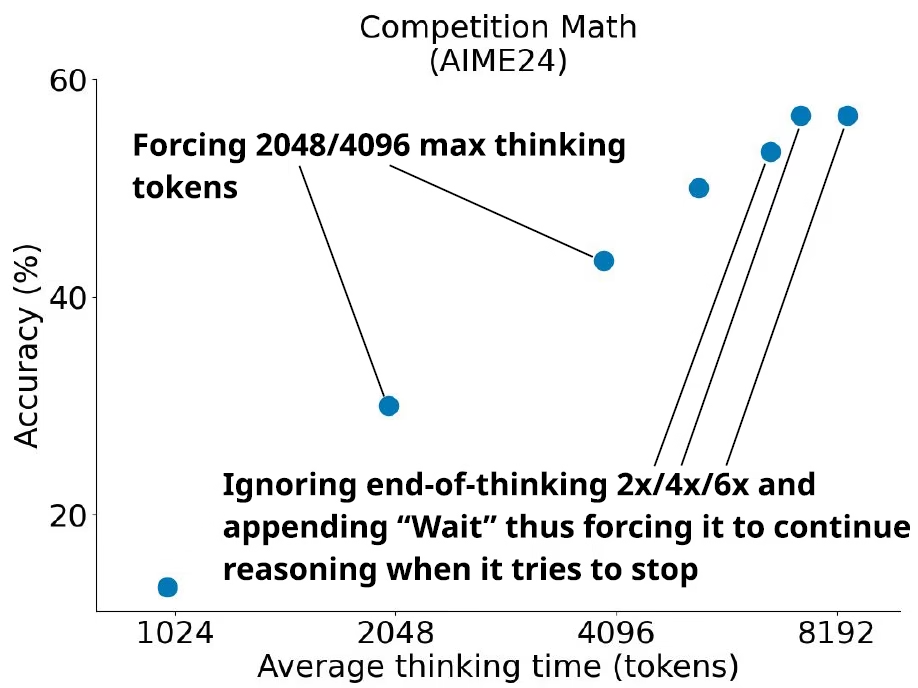

s1 Scaling Limits

The above figure is zooming into the scaling trend on one of the benchmarks. For the three points on the right, the researchers prevent the model from stopping its thinking by appending Wait. The difference between the three points is the number of times the model is forced to proceed thinking:

- For the left point, the model stops thinking after 2 Waits.

- For the middle point, after 4 Waits.

- For the right point, after 6 Waits.

Eventually, we see that the results are not improved anymore when extending from 4 to 6.

An interesting observation is that stopping the model from finishing too often can lead the model into repetitive loops instead of continued reasoning. Additionally, the context window of the underlying language model also constraints how much we can extend the thinking time.

References & Links

- Paper

- Code

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.