In this post, we break down the Mixture-of-Experts (MoE), one of the most fundamental AI concepts today, introduced in the paper Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer, co-authored by Geoffrey Hinton, famously known as the Godfather of AI.

Introduction

In recent years, large language models (LLMs) have driven remarkable advances in AI, with closed-source models like GPT-3 and 4, and open-source models like LLaMA 2 and 3, among many others. However, as we moved forward, these models got larger and larger and it became important to find ways to improve their efficiency. Mixture-of-Experts (MoE) to the rescue.

Introducing Mixture-of-Experts (MoE)

Researchers have successfully adapted a method called Mixture-of-Experts (MoE), which increases model capacity without a proportional increase in computational cost. The idea is that different parts of the model, which are the experts, learn to handle certain types of inputs, and the model learns when to use each expert. For a given input, the model uses only a portion of the experts, which makes it more compute-efficient.

A bit of “History”

If someone were to ask what was invented first, MoE or Transformers, how would you respond? Transformers were invented in June 2017, in the famous ‘Attention Is All You Need’ paper from Google. Meanwhile, Google also invented the Mixture-of-Experts (MoE) layer earlier that same year, in the paper titled ‘Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer’.

The Sparse Mixture-of-Experts Layer

As mentioned above, the idea with mixture of experts is that instead of having one large model that handles all of the input space, we divide the problem such that different inputs are handled by different segments of the model. These different model segments are called the experts. What does it mean?

Mixture-of-Experts High-Level Architecture

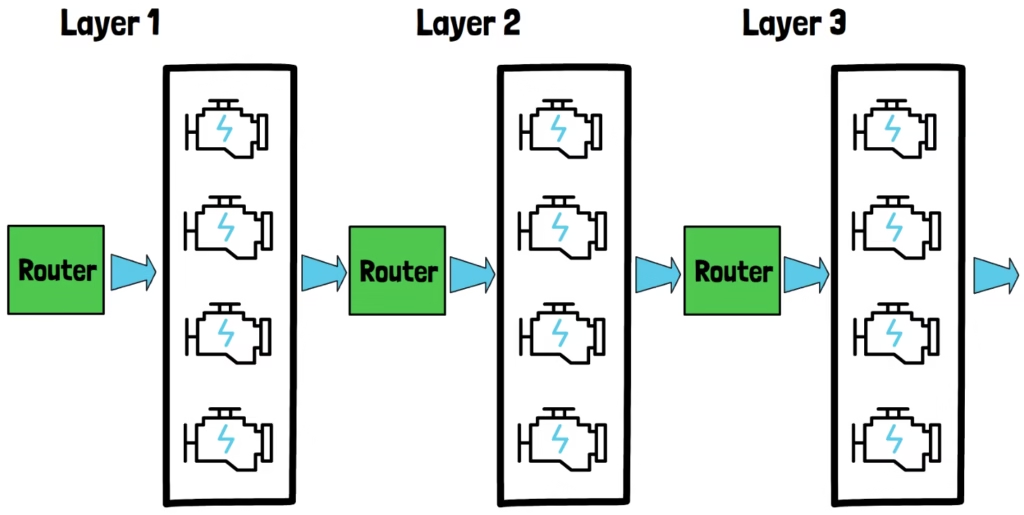

The MoE layer has a router component, also called a gating component, and an experts component, where the experts component is comprised of multiple distinct experts, each with its own weights.

The Routing Component

Given input tokens such as the 8 tokens on the left, each token passes through the router. The router decides which expert should handle each token and routes the token to be processed by that expert. More commonly, the router chooses more than one expert for each token, so in this example we choose 2 experts out of 4.

The Experts Component

The chosen experts yield outputs for the input, which we combine together. These experts can be smaller than if we would use one large model to process all tokens, and they can run in parallel and not all of them need to run for each input, so this is why the computational cost is reduced.

Repeating The Process For All Tokens

We repeat this flow for each input token, so the second token also passes through the router, which can choose different experts to activate. For the input prompt, the model handles the tokens together and we do not do this one after the other as shown in this example.

Multiple MoE Layers

We’ve discussed a single MoE layer, but in practice, there are multiple layers. Therefore, the outputs from one MoE layer propagate to the next layer in the model.

MoE Layer – Paper Diagram

Let’s now also review a figure from the paper which presents the MoE layer. Since this is from before the Transformers era, the model used here is a recurrent language model.

At the bottom we can see the input to the MoE layer which is provided from the previous layer. The input first passes via the gating network which decides which experts should process the input. We observe that there are n experts and we use two of them.

Weighted Sum Of The Experts Output

Another role of the gating network is not only to decide which experts to use, but also to determine the weight of each expert output. Finally, we combine the chosen experts’ outputs based on the gating network output. This combination creates a weighted sum of the experts’ outputs, which we then forward to the next model layer.

Final Note About Training

The different MoE layers, the gating components and the experts, are all trained together as part of the same model.

Conclusion

In summary, Mixture-of-Experts (MoE) offers a revolutionary approach to enhancing the efficiency and scalability of large language models. By leveraging specialized experts within the model, MoE reduces computational costs while maintaining high performance. This architecture not only optimizes resource usage but also paves the way for more sophisticated AI systems capable of handling diverse tasks and inputs.

As AI continues to evolve, Mixture-of-Experts stands out as a pivotal innovation, contributing significantly to the advancement of AI research and applications. The ongoing development and adoption of MoE underscore its importance in building the next generation of intelligent systems.

Recommended Reading

To dive deeper into further AI research to improve the Mixture-of-Experts (MoE) method, check out the following highly recommended papers that explore various aspects and advancements in this field:

- Mixture-of-Experts Offloading: Explore how offloading experts’ weights to manage limited memory can improve inference efficiency in Mixture-of-Experts models. Our Review.

- Soft Mixture-of-Experts: A method by Google that mixes results from all experts. Our Review.

- Mixture of Nested Experts: Explore the concept of nested experts, where different experts share weights, allowing the model to use a subset of its weights for some inputs and all of its weights for important inputs. Our Review.

- Mixture-of-Agents: Inspired by Mixture-of-Experts, this paper explores using full-blown LLMs as experts. Our Review.

Links & References

- Paper – https://arxiv.org/abs/1706.03762

- Video – https://youtu.be/kb6eH0zCnl8

- Join our newsletter to receive concise 1 minute read summaries of the papers we review – https://aipapersacademy.com/newsletter/

All credit for the research goes to the researchers who wrote the paper we covered in this post.