In this post, we break down Titans, a new model architecture by Google, which has sparked curiosity about its potential to become the new backbone for Large Language Models (LLMs).

Introduction

In 2017, Google published the groundbreaking paper titled “Attention is All You Need“, which sparked the AI revolution we experience today. This paper introduced Transformers, which have become the backbone of most, if not all, top large language models (LLMs) out there today.

The Strength And Cost Of Transformers

The strength of Transformers is largely attributed to their use of attention. Given a sequence of tokens, Transformers process the entire sequence at once, capturing dependencies across the entire sequence using the attention mechanism to provide high-quality output. However, this powerful capability comes with a cost, a quadratic dependency on the input sequence length. This cost poses limitations on the ability of Transformers to scale up to longer sequences.

Recurrent Models

On the other hand, recurrent models do not suffer from the same quadratic dependency. Instead of processing the entire sequence at once, they do it gradually, compressing the data from the sequence into a compressed memory, also called the hidden state. This linear dependency contributes to the enhanced scalability of recurrent models. However, recurrent models have not proven to be as performant as Transformers.

Introducing Titans

In this post, we dive into a new paper by Google Research titled “Titans: Learning to Memorize at Test Time“. The paper introduces a new model architecture called Titans, showing promising results while mitigating the quadratic cost issue of Transformers. The Titan models are designed with inspiration from how memory works in the human brain. An interesting quote from the paper mentions that memory is a fundamental mental process and is an inseparable component of human learning. Without a properly functioning memory system, humans and animals would be restricted to basic reflexes and stereotyped behaviors.

Deep Neural Long-Term Memory Module

A key contribution of the Titans paper is the deep neural long-term memory module is. Let’s start by understanding what it is, and afterwards, we’ll understand how it is incorporated into the Titans models.

Unlike in recurrent neural networks, where the memory is encoded into a fixed vector, the neural long-term memory module is a model, a neural network with multiple layers, that encodes the abstraction of past history into its parameters. To train such a model, one idea is to train the model to memorize its training data. However, memorization is known to limit models’ generalization and may result in poor performance.

Memorization Without Overfitting

The researchers designed a fascinating approach to create a model capable of memorization, but without overfitting the model to the training data. This approach is inspired by an analogy from human memory. When we encounter an event that surprises us, we are more likely to remember that event. The learning process of the neural long-term memory module is designed to reflect that.

Modeling Surprise

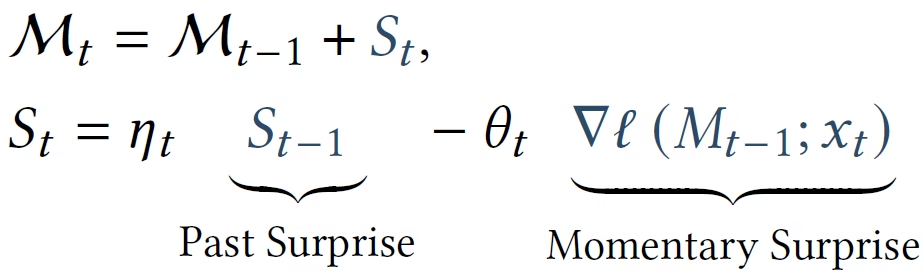

We can learn about how the researchers modeled surprised using the above definition from the paper. Mt represents the neural long-term memory module at time t. It is updated using its parameters from the previous timestep, and a surprise element modeled as a gradient. If the gradient is large, the model is more surprised by the input, resulting in a more significant update to the model weights.

However, this definition is still not ideal, since the model may miss important information happening right after the surprising moment happened.

Modeling Past Surprise

From a human perspective, a surprising event will not continue to surprise us through a long period of time, although it remains memorable. We usually adapt to the surprising event. Nevertheless, the event may have been surprising enough to get our attention through a long timeframe, leading to memorizing the entire timeframe.

We can learn about the improved modeling from the above definitions from the paper that include modeling of past surprise. Now, we update the weights of the neural long-term memory using the state of the previous weights, and a surprise component, noted as St. The surprise component is now measured over time, and is composed of the previous surprise, with a decay factor and the same momentary surprise we already discussed in the previous section.

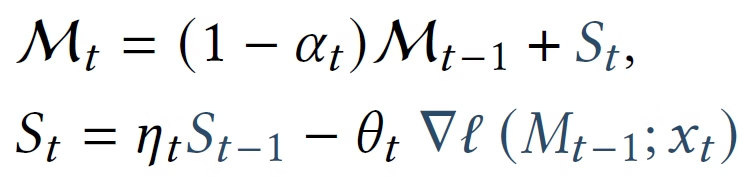

Another factor that is not modeled yet is forgetting.

Modeling Forgetting

When dealing with very large sequences, millions of tokens for example, it is crucial to manage which past information should be forgotten. We can see the final modeling in the above definitions from the paper. These definitions are identical to the ones in the previous section, except that we add an adaptive forgetting mechanism, noted with alpha and is also called a gating mechanism. This allows the memory to forget the information that is not needed anymore.

The Loss Function

The loss function is defined with the above equations. The loss aims to model associative memory, by storing the past data as pairs of keys and values, and teach the model to map between keys and values. Similarly to Transformers, linear layers project the input into keys and values. The loss then measures how well the memory module learns the associations between keys and values.

To clarify, the model does not process the entire sequence at once, but rather processes it gradually, accumulating memory information in its weights.

Titans Architecture #1 – Memory as a Context (MAC)

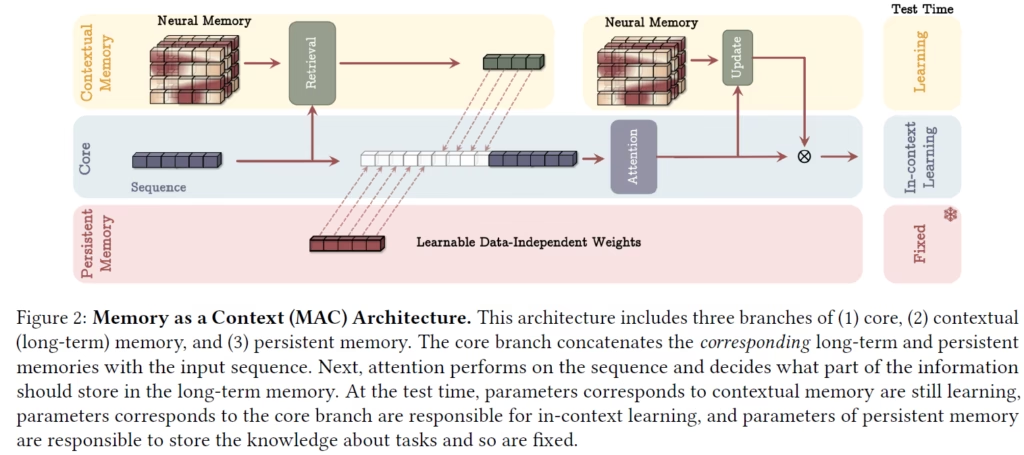

The paper suggests a few different architectures. The first is called Memory as a Context (MAC). We can learn about it using the following figure from the paper.

The model consists of 3 components: persistent memory, core, and contextual memory. Given a long input sequence, we chunk it into smaller sequences. The sequence shown at the Core component is actually a chunked sequence.

Persistent Memory

We first incorporate persistent memory by adding learnable data-independent weights to the beginning of the sequence. These are in practice learnable tokens that store global information which is not impacted by the input. The sequence is also passed via an attention block and adding the learnable tokens at the beginning of the sequence helps avoid attention drain, where due to bias, attention weights are highly active toward initial tokens. Adding learnable meta tokens also proved successful in Hymba by Nvidia, which we covered not long ago.

Contextual Memory

Contextual memory consists of the deep neural long-term memory module, since it depends on the context. We retrieve memory tokens from the long-term memory using a forward pass of the neural long-term memory module, with the input chunked sequence as input. Since the neural memory keeps being updated even in test time, when we process a chunk of a long sequence, the neural memory is already updated with information from previous chunks of the same sequence. The retrieved memory tokens are added to the input sequence, after the persistent memory tokens.

The Core Component

The core component brings it all together, using an attention block that is fed with the extended sequence, that contains information from the persistent memory, the contextual memory and the input itself. This way the model can leverage multiple memory types. The output from the attention block is used to update the neural memory. The attention mechanism helps to determine for a given input, whether the long-term memory should be used or not. Additionally, attention helps the long-term memory to store only useful information from the current context. The final output is determined based on the attention block output and the output from the neural memory.

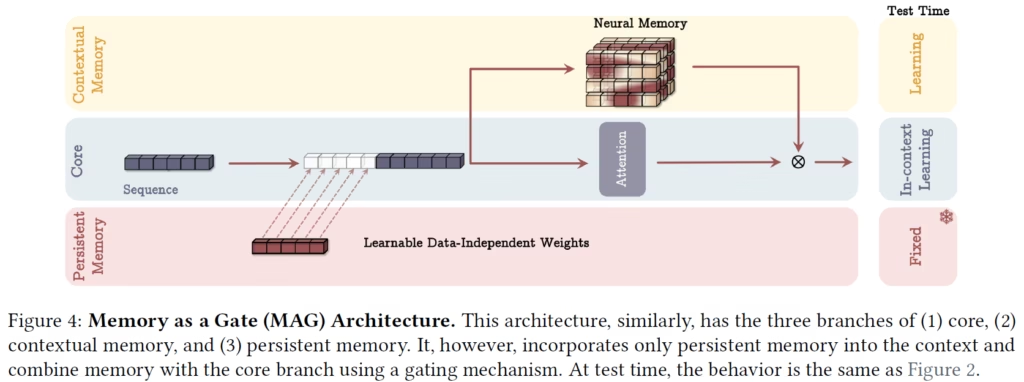

Titans Architecture #2 – Memory as a Gate (MAG)

The next Titan architecture version is called Memory as a Gate (MAG), and it also has a great illustration from the paper we can see below.

In this version, we also have 3 branches that represent persistent memory, core, and contextual memory. A difference from the previous version, is that the sequence is not chunked. The input sequence here is the full input sequence. This is made possible by utilizing sliding window attention in the attention block. The persistent memory learnable weights are again added to the beginning of the sequence. But unlike before, the neural memory does not contribute data into the context for the attention block. Instead, the neural memory is updated from the input sequence and its output is combined with the core branch using a gating mechanism.

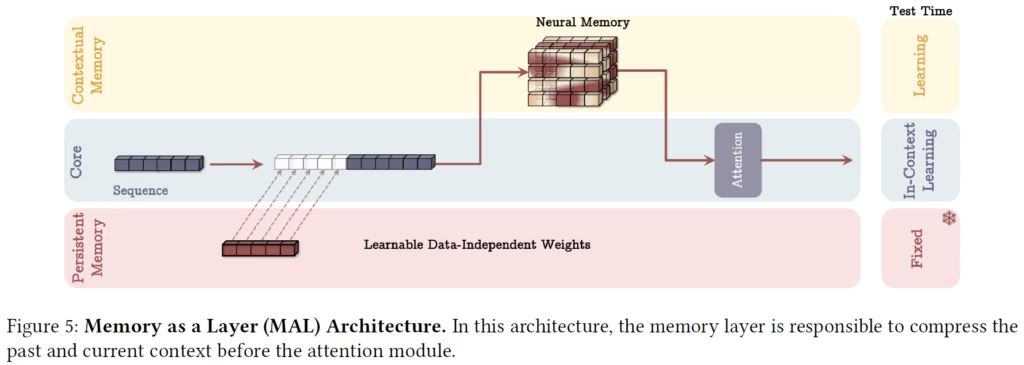

Titans Architecture #3 – Memory as a Layer (MAL)

The third variant of Titan architecture is called Memory as a Layer (MAL). We can learn about it using the following figure from the paper.

Similarly to the previous version, the sequence is not chunked, and we use sliding window attention.

In this version, we use the neural memory as a model layer, where the input sequence, together with the learnable weights, first pass via the neural memory, and afterwards via the attention block.

This design allows stacking layers of multiple neural memory modules and attention blocks, similar to how Transformer layers are usually stacked. However, the sequential design limits the power of the model by the power of each of the layers.

This is again a similar observation to the Hymba paper where different components placed in parallel, rather than sequential, for the same reason.

Titans Architecture #4 – LMM

The last Titans variant is called LMM, which is identical to the previous version, but without an attention block, solely relying on the memory module.

Results

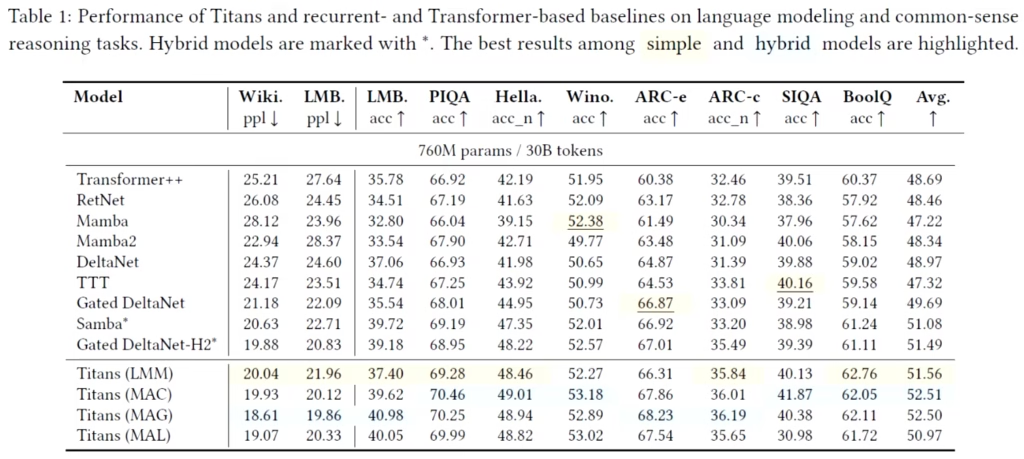

Language Tasks Comparison

In the above table, we can see comparison of Titan models with baselines on language modeling and commonsense reasoning tasks. Best results are marked in blue for hybrid models, that utilize both recurrent and attention mechanisms, and in yellow the best results for non-hybrid models.

Titan LMM achieves the best results comparing to other non-hybrid models, showcasing the power of the neural long-term memory module. Among the hybrid models, MAC Titan achieves the overall best results, where Memory as a Gate Titan is slightly behind it.

Needle in a Haystack

Another interesting comparison is for the needle in a haystack task. We can see the results in the above table from the paper. In this task, the models need to retrieve a piece of information from a very long text, thus measuring the actual effective context length of models.

The numbers in the title show the length of the evaluated sequences. We see a clear win for Titans comparing to baselines as the sequence length is increased on all three benchmarks.

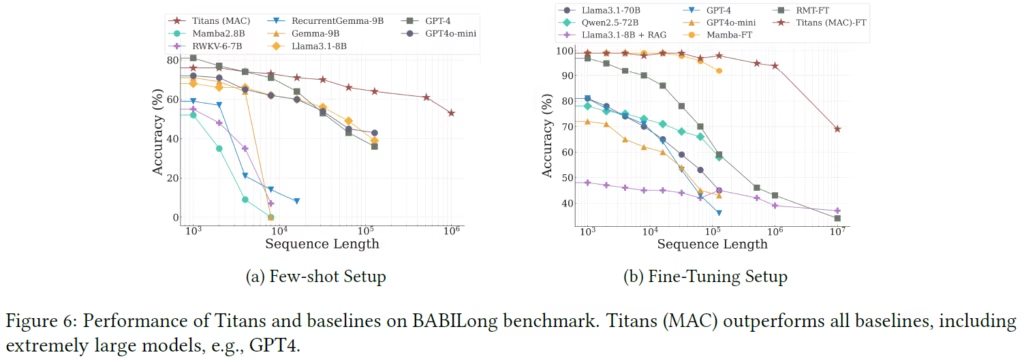

BABILong Benchmark

Another interesting result can be seen in the above figure, that shows comparison of Titans with top models on the BABILong benchmark. This is a harder benchmark for long sequences, in which the model needs to reason across facts distributed in extremely long documents.

On the x-axis we see the sequence length and on the y-axis we measure the accuracy of each model. The results of MAC Titan are shown in the red line, significantly outperforms other models on very long sequences!

Links & Resources

- Paper

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.