In this post we break down a recent Alibaba’s paper: START: Self-taught Reasoner with Tools. This paper shows how Large Language Models (LLMs) can teach themselves to debug their own thinking using Python.

Introduction

Top reasoning models, such as DeepSeek-R1, achieve remarkable results with long chain-of-thought (CoT) reasoning. These models are presented with complex problems as input, and through an extended reasoning process, also known as long CoT, the models can repeatedly reevaluate their solutions before providing a final answer. However, when it comes to very complex problems, even these models may struggle to arrive at the correct solution. One possible reason is that they rely solely on their internal reasoning processes.

But what if a model could leverage external tools, like Python, as part of its reasoning? For example, in a coding task, the model could validate its solution by running tests and then refine its answer based on the test results. Interestingly, OpenAI reported that they trained their o1 model to use external tools, particularly for writing and executing code in a secure environment. However, they didn’t share technical details on how this is achieved.

The paper we review today may bridge that gap, as it demonstrates how LLMs can integrate Python into their reasoning process. Let’s now dive into its details.

Hint-Infer – LLMs Inference Using Tools

We start by exploring Hint-infer, which is the name of the inference process of a LLM using external tools. At the middle of the Hint-infer illustration, we have a LLM. The one used in this paper is QwQ-32B, a large reasoning language model. It’s worth noting that this is the 2024 version of the model, not to be confused with the newer version released just days before this paper.

Tackling Math and Coding Problems

The problems tackled in the paper are math and code problems. Given such a problem, the model generates a CoT reasoning. However, at certain points during the reasoning process, hints are injected to enable the model to leverage Python as part of the reasoning process.

Strategic Hint Injection

These hints are strategically injected after tokens that indicate that the model may be questioning its own reasoning or considering alternative approaches, such as ‘alternatively’ or ‘wait’. Additionally, hints are also injected when the model is about to stop its reasoning process, encouraging it to extend its thinking.

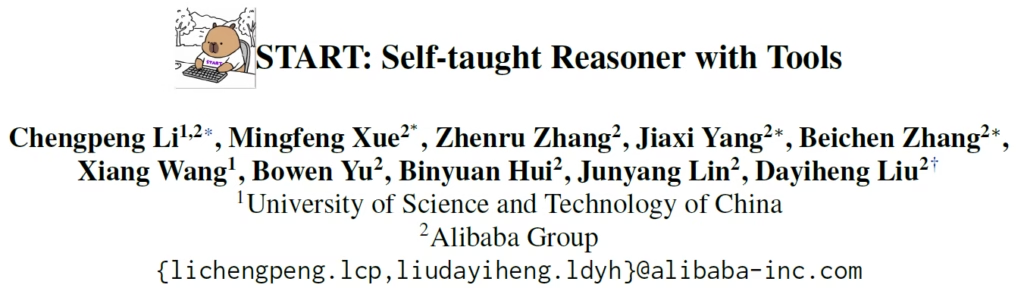

The hints are taken from a collection crafted by the researchers, called the Hint-Library. We can see a few examples for how these hints look like in the below figure from the paper. On the left, there’s a debug hint, used for coding tasks. The debug hint encourages the model to write code that include inputs from test cases provided as part of the problem, and to output the results for these test inputs. Then, the model is also encouraged to assess the correctness of the code by comparing the outputs with the expected outputs, and refine the solution if needed.

On the right, we see examples of hints for math problems, that encourage the model to adopt various problem-solving strategies, such as reflection, logical verification, and exploring alternative methods.

Now, given that the model generates python code as part of its reasoning, a code interpreter is used to execute the code. The outputs from that code are then fed back into the reasoning process.

Python Execution and Iterative Improvement

This process can repeat multiple times as part of the reasoning process, allowing the model to iteratively improve its reasoning, until finally providing the final answer.

To become an expert in using python as part of its reasoning process, the base model undergoes a two-phase training which we will review soon. By the end of the training process, the trained model is called START. Before diving into the training process, to understand the inference process better, let’s take a look at another example from the paper.

Python Execution During Inference – Example

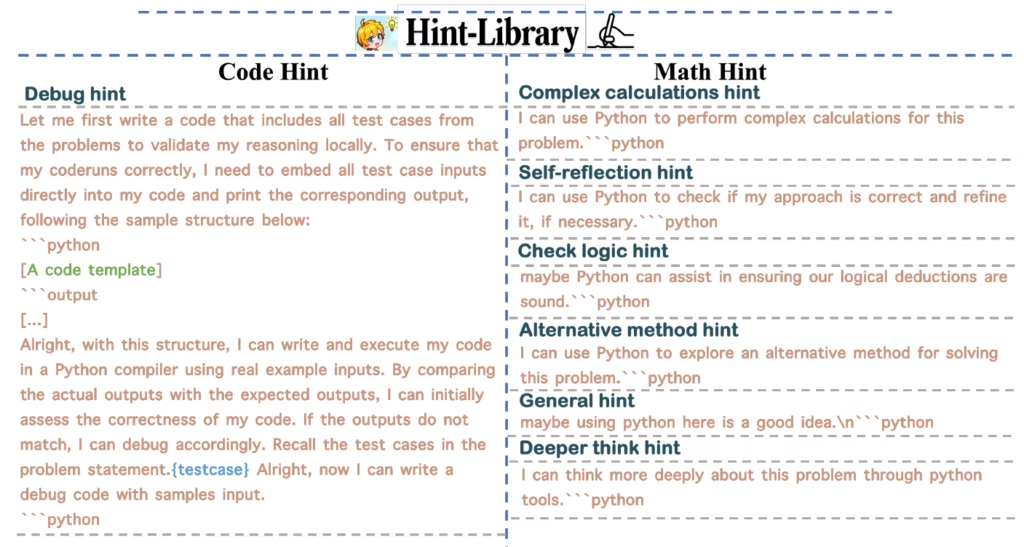

In this example, we see a challenging coding problem, and a comparison between QwQ without external tools, and START.

On the left, we see the response of QwQ, which generates a long chain-of-thought reasoning. While it attempts different approaches, it remains constrained by its internal reasoning process and ultimately produces incorrect code.

On the right, we see the response of the START model, where it retains the cognitive framework of its base model, but integrates multiple code executions. The details of the example problem are not critical to understand, but note that there are two examples attached to the problem, which the model is using to asses the solution correctness, reaching wrong results in the first two attempts, and get it right in the third one, which helps it to output a correct solution at the end.

Training the START Model: Two Phases

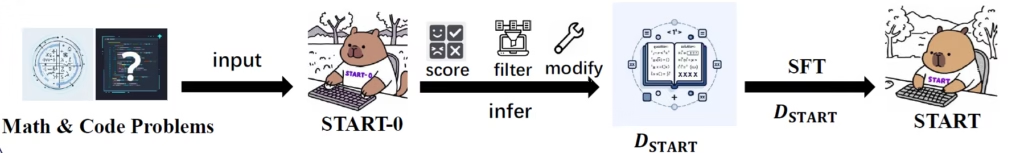

Phase 1: Hint Rejection Sampling Fine-Tuning (Hint-RFT)

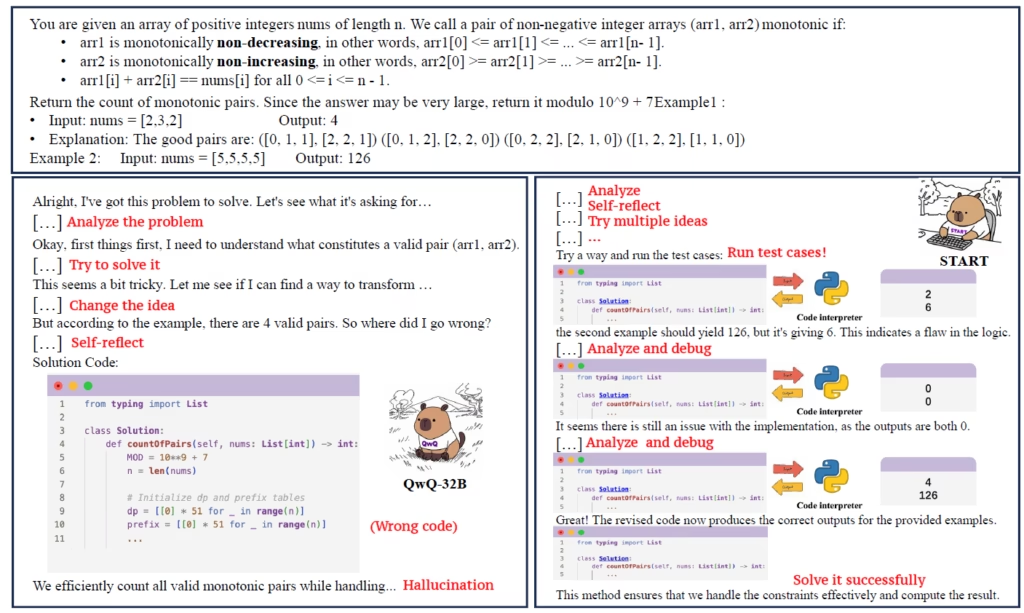

We can understand this phase using the above illustration from the paper. The upper part illustrates the process to create a seed dataset for training.

The dataset curation is done using a method called rejection sampling. Rejection sampling is a process where multiple responses are generated for the same problem, and only those meeting specific criteria are selected.

The process starts with a large collection of math and coding problems from various sources. For each sample, the Hint-infer process which we’ve described earlier is used to create multiple responses.

These responses are then evaluated using several conditions. Notably, we only keep samples where the Hint-infer process was able to solve the problem correctly, but standard inference without tools does not. Additionally, to filter out low-quality responses, responses with repetitive patterns are removed. The remaining valid responses are scored, though the paper doesn’t elaborate on the scoring rules. A single response, along with the original question, is added to the curated seed dataset.

By the end of this step, the researchers produced a seed dataset of 10,000 math samples and 2,000 code samples.

The lower part of the illustration describes that the base model is trained on the seed dataset using supervised fine-tuning. The resulting model from this phase is referred to as START-0.

Phase 2: Rejection Sampling Fine-Tuning (RFT)

The second training phase is called RFT. As the name implies, this phase also incorporates rejection sampling fine-tuning, where a curated dataset is created, followed by fine-tuning the model on that dataset. The process is illustrated in the above figure from the paper.

In this phase, the researchers reprocess the entire collection of samples, and not only the seed dataset generated earlier. Each sample is handled using the START-0 model to produce multiple responses. These responses are evaluated using various rules similar to the first phase, and some are also manually modified to remove unreasonable content.

For each sample added to the curated dataset, only one response is retained. Finally, we end with a dataset that contains 40,000 math problems and 10,000 code problems.

START-0 is then fine-tuned on that dataset, resulting in the final START model.

Results: How Does START Perform?

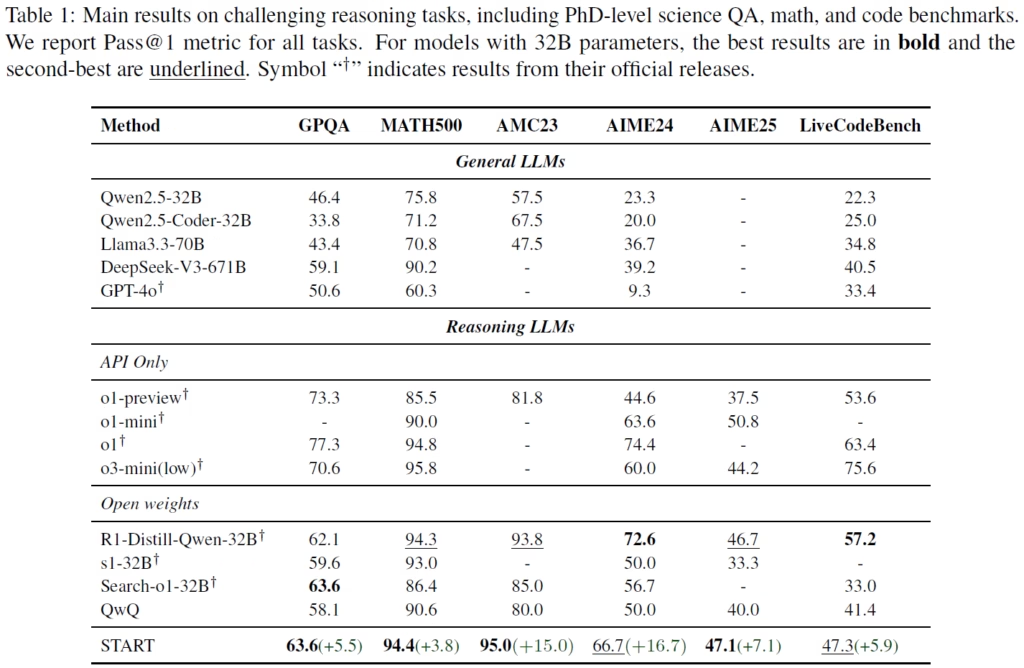

The main results presented in the paper are summarized in the above table. The table shows the pass@1 score, which evaluates how often a model generates a correct answer on its first attempt. The benchmarks include the challenging PhD-level GPQA, as well as math and coding benchmarks.

At the bottom of the table, we can see the results of the START model, along with its improvement over the base model. Impressively, the START model consistently improves upon the base model and achieves impressive results compared to other strong models.

Notably, across all benchmarks, it outperforms other baselines of a similar size or ranks second when it doesn’t. This includes the distilled R1 model and the recent Stanford s1 model. In most cases, it even outperforms o1-preview and o1-mini. And this is while using the older version of QwQ.

It will be fascinating to see how this approach performs when applied to the newer version of the QwQ model or larger-sized models.

References & Links

- Paper

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.