In this post we breakdown a recent paper from Microsoft titled Reinforcement Pre-Training (RPT), that introduces a simple yet powerful idea to significantly increase reinforcement learning training scale.

Introduction

Reinforcement learning (RL) has played a crucial role in shaping the highly capable large language models (LLMs) that we rely on today. From reinforcement learning from human feedback (RLHF), which is used to align models with human preferences, to reinforcement learning with verifiable rewards (RLVR) on code and math datasets that enhance long-form reasoning, it’s clear that RL is a powerful tool in the AI landscape.

Yet, despite its success, reinforcement learning has not yet been widely integrated into the pre-training stage of large language models.

Microsoft’s Reinforcement Pre-Training (RPT) introduces a novel mechanism, called next-token reasoning, to apply reinforcement learning directly on the pre-training data.

To understand this paper, let’s start with a quick recap of how LLMs are typically trained and how RPT comes in.

LLM Training Recap

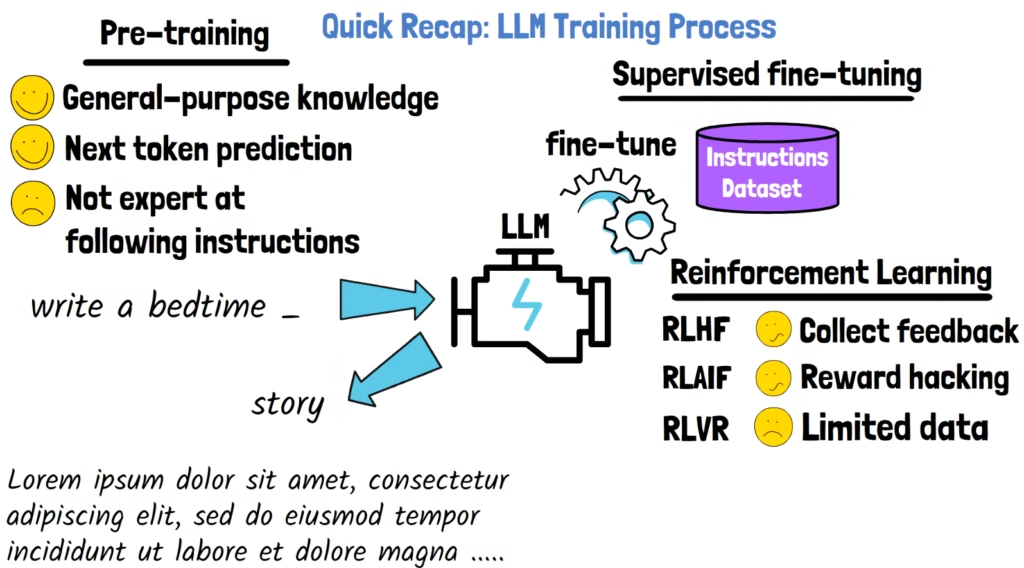

There are three main stages in the training process of LLMs.

- Pre-Training: In this stage, the model is trained on massive amounts of text and code to learn general-purpose knowledge. This step helps the model to become proficient at predicting the next token in a sequence. For example, given an input like “write a bedtime _,” the model would be able to complete it with a reasonable continuation, such as “story”. However, after the pre-training stage, the model still struggles to follow instructions. To address this, we have the second stage.

- Supervised Fine-Tuning: In this stage, the model is fine-tuned on an instruction dataset. This step teaches the model how to respond more helpfully, but it is not the focus of this paper.

- Reinforcement Learning: In practice, LLMs continue to be improved in a third stage, which is where reinforcement learning typically comes in. Below are few common methods.

Reinforcement Learning Methods For LLMs

- Reinforcement Learning from Human Feedback (RLHF) – In this method, the model learns from responses scored by humans. However, collecting high-quality human feedback at large scale, especially for complex tasks, is expensive and time-consuming.

- Reinforcement Learning from AI Feedback (RLAIF) – In this method, the model provides the feedback. For reinforcement learning from AI feedback to work well, a highly capable model is needed so the feedback it provides will be of high-quality.

- Reinforcement Learning with Verifiable Rewards (RLVR) -One concern with RL is reward hacking, when the model discovers a loophole or unintended way to maximize the reward, which does not align with the desired goal. This concern is mitigated quite well using RLVR. In this setup, the reward is determined using predefined rules. For example, given a math question, the reward for the model’s answer is determined based on whether the answer is correct. Starting with DeepSeek-R1, RLVR has become popular for developing reasoning capabilities in LLMs. However, this kind of training relies on domain-specific datasets where verifiable rewards are available.

Reinforcement Pre-Training (RPT) Motivation

After the LLM training recap, we know that RL is typically applied in post-training of LLMs. Another important observation is that the size of the pre-training data, which consists of huge text corpus, is orders of magnitude larger than the size of data used for RL, which either relies on feedback data, or domain-specific datasets.

What if we could somehow use all that pre-training data for reinforcement learning?

That’s exactly what’s being proposed by Reinforcement Pre-Training (RPT). The key idea is to reframe the next-token prediction task as a new RL task, called next-token reasoning.

It is important to note that RPT does not replace the original pre-training. Instead, it introduces an additional stage to the training process, that aligns the pre-training more closely with RL. This should be a better starting point for subsequent post-training reinforcement learning.

RPT’s Key Ingredient: Next-Token Reasoning

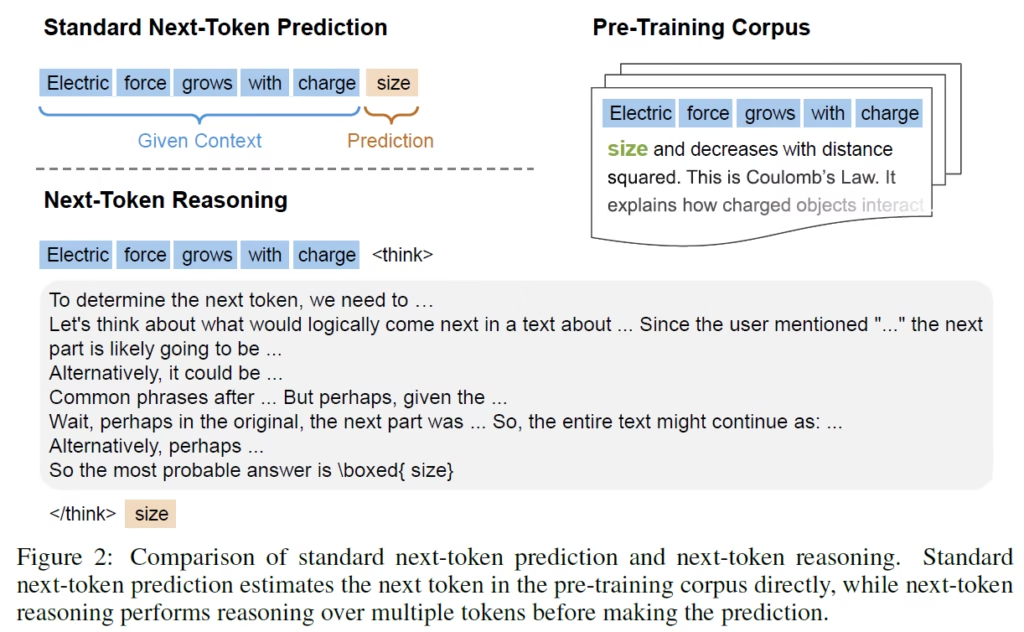

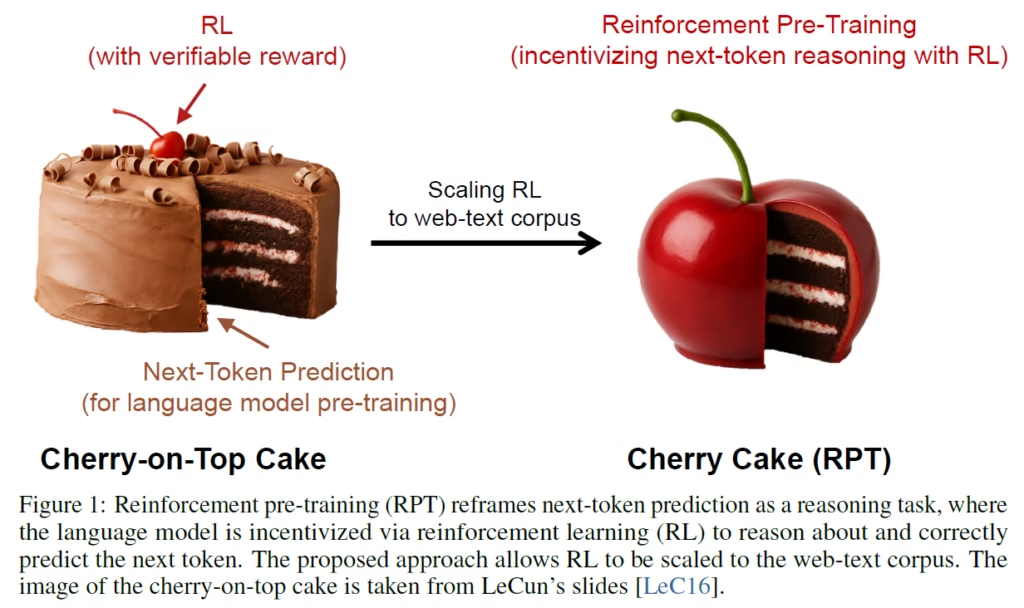

To understand what the authors mean by next-token reasoning, let’s look at the above figure from the paper.

- In the top right, we see a snippet of text from the pre-training corpus.

- On the top left, we see the standard next-token prediction setup that is used in traditional pre-training, where given some context, the model simply predicts the next token.

- On the bottom we see how next-token reasoning works. Instead of directly predicting the next token, the model is asked to first generate a chain-of-thought reasoning sequence, and then return its final prediction for the next token. This approach transforms each token prediction into a reasoning task.

RPT Training With RL

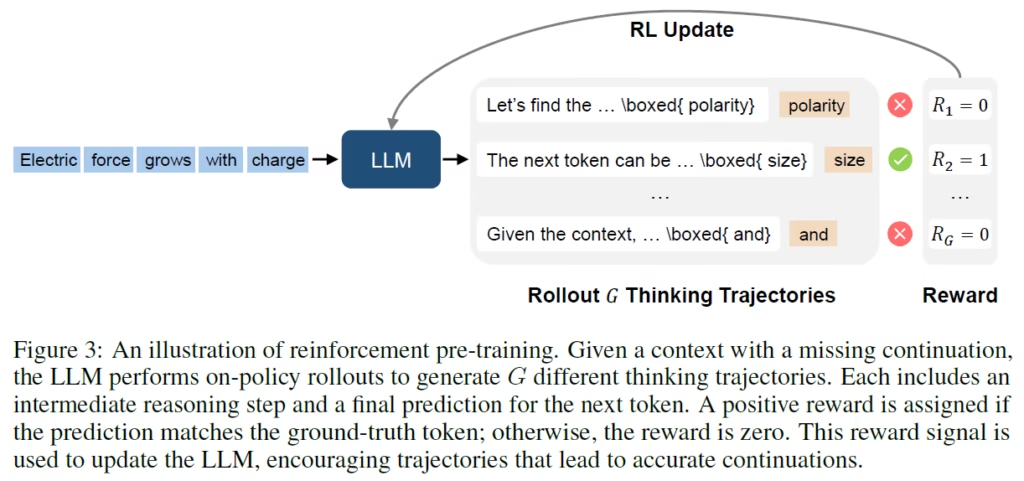

We can learn how reinforcement learning is applied with next-token reasoning using the above figure. The researchers apply a RL algorithm called Group Relative Policy Optimization (GRPO), the same method used to train DeepSeek-R1. We covered GRPO in a dedicated post here.

Given a context, we sample multiple outputs from the LLM. Specifically, for next-token reasoning, this gives us a group of outputs with different reasoning traces and different predictions for the next token.

To train the model, we use the actual next token from the pre-training data as the ground-truth label. This allows us to calculate a verifiable reward for each sampled output. There can be more than a single correct response, and the reward is calculated for each response based on the correctness of the predicted next token and relatively to the other responses in that group. The model is then updated to prefer the correct responses.

Scaling Up RL Data

One major benefit we get with Reinforcement Pre-Training (RPT) is the increase in scale of RL data. To illustrate that, let’s take a look at the above figure from the paper.

Without RPT, we can think of the pre-training dataset as a large cake, with a much smaller RL dataset as just a cherry on top. But, with RPT, using next-token reasoning, we now have a cherry cake. Meaning that the whole pre-training corpus becomes usable for RL.

Results

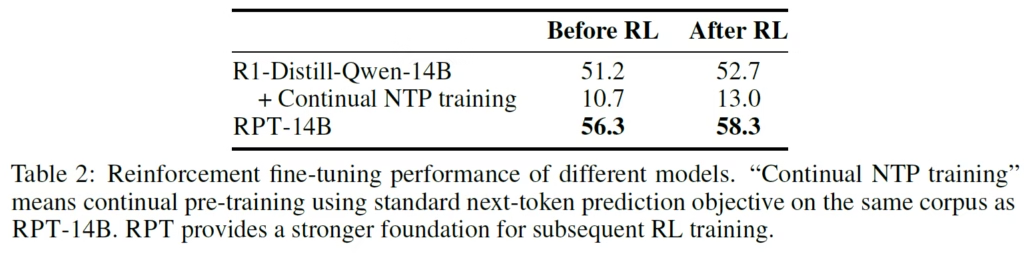

The researchers evaluate reinforcement pre-training using the OmniMATH benchmark, with R1-Distill-Qwen-14B as the base model.

OmniMATH is a benchmark designed to test large language models on Olympiad-level mathematical reasoning. It consists of competition-grade problems from various math sub-domains.

Next-token Prediction Accuracy

So, does reinforcement pre-training actually improve the model’s ability to predict the next-token?

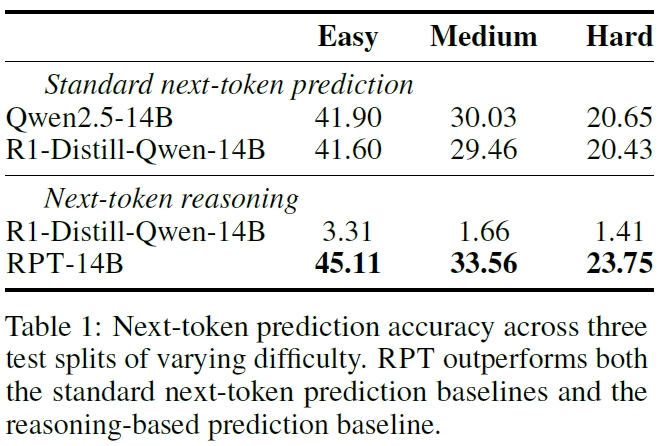

In the above table, we see the next-token prediction accuracy across three difficulty levels: easy, medium and hard. These levels are determined using another LLM, based on the entropy of its predictions. Higher entropy means the next token is harder to predict.

R1-Distill-Qwen-14B is the base model for the Reinforcement Pre-Training model, called RPT, shown at the bottom of the table. At the top, we see Qwen-2.5-14B, the base model for R1-Distill-Qwen-14B.

Across all difficulty levels, RPT outperforms the base models, showing a better next-token prediction accuracy using next-token reasoning.

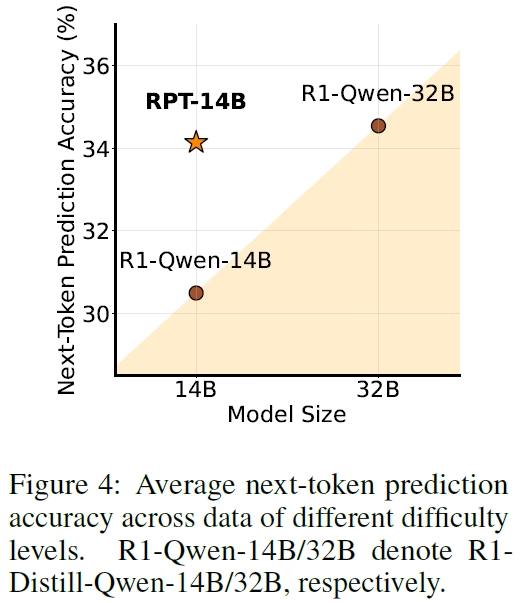

An impressive result is shown in the below figure from the paper. On the x-axis, we see the models’ size, and on the y-axis we see the next-token prediction accuracy. We see that RPT with 14 billion parameters achieves comparable performance to R1-Qwen-32B, a model more than twice its size.

RPT Scaling Trend

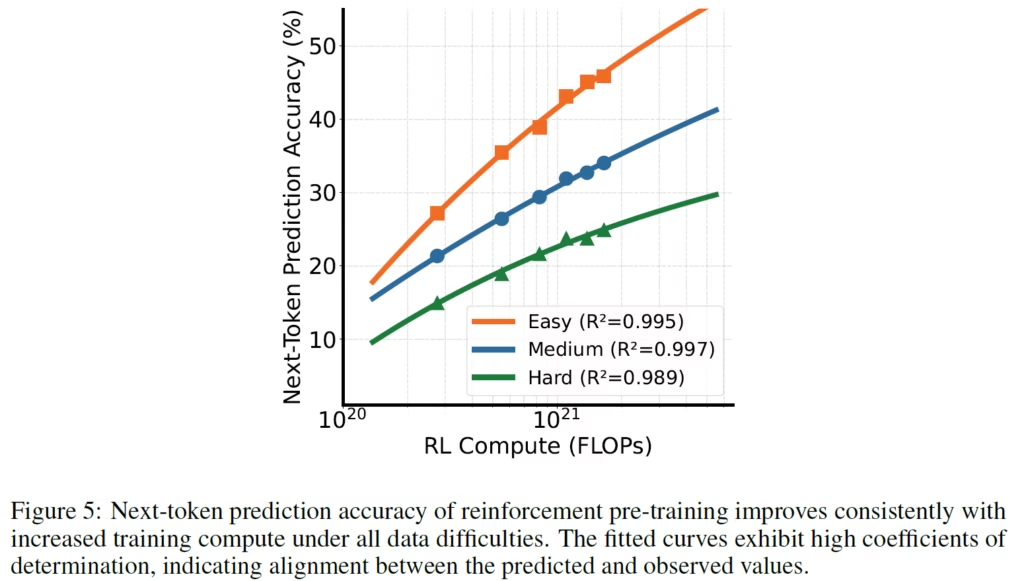

The above chart shows how Reinforcement Pre-Training scales with compute. The x-axis represents the training compute, and the y-axis shows the next-token prediction accuracy. The curves correspond to the different difficulty levels. We see a healthy scaling trend, whereas we allocate more compute, the accuracy is improved, across all difficulty levels.

Foundation For Subsequent RL

Another interesting question is whether reinforcement pre-training provides a stronger foundation for subsequent reinforcement learning.

In the above table, we see what happens when we continue to train both the base model, at the top row, and the RPT model, at the bottom row, on another RLVR dataset. Both models improve with this subsequent reinforcement learning. The improvement size is slightly larger for RPT, which also outperforms the base model by a large margin.

In the middle row, we see that when the base model is trained using next-token prediction objective on the same data, it drastically loses its reasoning capabilities.

References & Links

- Paper Page

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.