The race toward AGI is driven mainly by large language models (LLMs), and most of them are built on the Transformer architecture. But what if the road to AGI doesn’t go through Transformers at all?

In this post, we’ll explain a new model architecture called Hierarchical Reasoning Model (HRM), introduced in a recent paper of the same name. With just 27 million parameters, a fraction of today’s top reasoning models, and trained on only 1,000 examples, it managed to outperform leading LLMs on some of the hardest reasoning benchmarks.

Let’s dive in.

The Limits of Transformer-Based Reasoning

Current Transformer-based LLMs rely on chain-of-thought for reasoning.

- Given an input prompt, the model generates tokens that represent its reasoning process.

- These tokens are fed back into the model, repeating until a final answer is produced.

But there are two key limitations:

- Reasoning in human language: This forces the model to reason through words, even though human reasoning isn’t always expressed in language.

- Efficiency cost: Generating long chain-of-thought traces means many forward passes with a growing context window, making the process slow and expensive.

Enter Hierarchical Reasoning Models

Instead, HRMs take a different approach: latent reasoning.

- Given a prompt, the model performs its entire reasoning process internally.

- It outputs only the final answer, without the reasoning traces.

This might sound like a standard recurrent neural network (RNN), but HRM goes much further.

Brain-Inspired Dual Modules

Inspired by the human brain, HRM introduces two recurrent modules working together:

- H-Module (High-Level Module) – handles abstract reasoning and planning.

- L-Module (Low-Level Module) – manages fast, detailed computations.

These two modules are coupled and interact throughout the reasoning process. We’ll break down exactly how it works, but first, let’s look at the results.

HRM vs Top LLMs

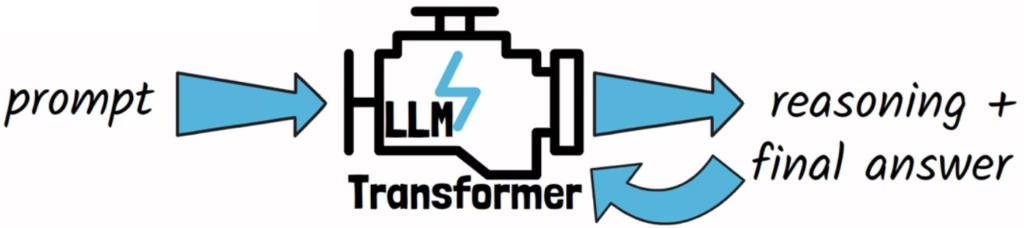

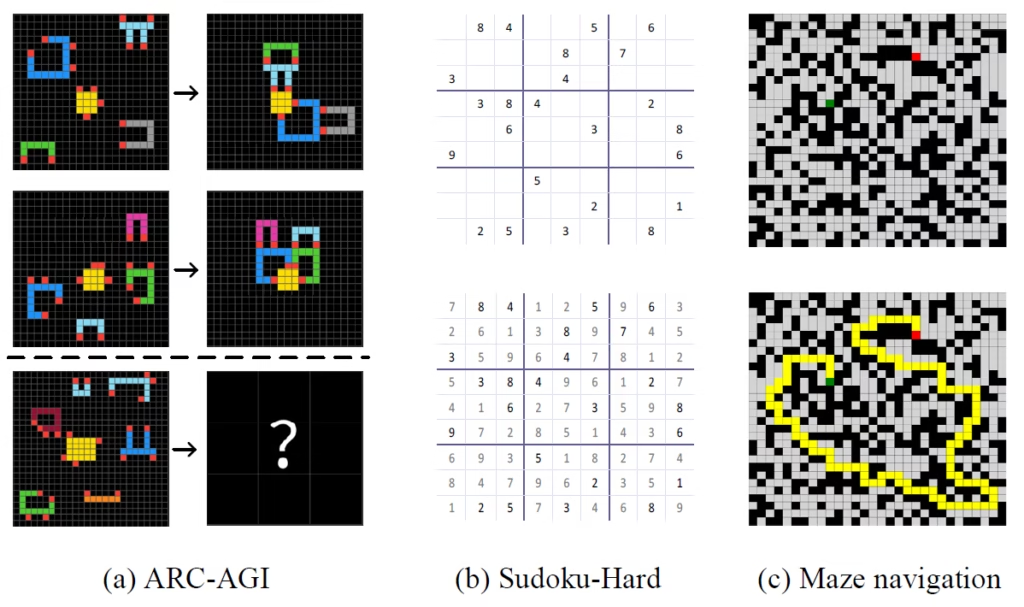

In the figure above, we see examples from the benchmarks used to evaluate the Hierarchical Reasoning Model.

- ARC-AGI: an IQ-test–like puzzle where the model needs to figure out rules from a few examples.

- Sudoku-Extreme: a dataset the researchers extracted from existing Sudoku datasets and made it significantly harder.

- Maze solving: where the model must find the optimal path between two points.

Trained on only 1,000 training examples and with just 27 million parameters, the Hierarchical Reasoning Model outperforms top reasoning models like DeepSeek-R1, Claude 3.7, and o3-mini-high across these benchmarks.

With that potential in mind, we’re now ready to break down how this model works.

The Hierarchical Reasoning Model (HRM) Architecture

Input and Embedding

First, the input goes through a trainable embedding layer, which turns it into a representation the model can work with.

Two Coupled Recurrent Modules

The Hierarchical Reasoning Model then uses two coupled recurrent modules, working together at different time scales:

- High-level module (the planner): Handles abstract reasoning and sets the overall direction.

- Low-level module (the doer): Runs fast, detailed computations to follow the high-level plan and work out the specifics.

While this analogy may not be 100% accurate it helps to understand the idea of the model.

To avoid confusion in the architecture figure above, note that there is a single set of weights for the high-level module and a single set of weights for the low-level modules. They are just drawn multiple times since they are running multiple times in a single forward pass.

HRM Forward Pass Flow

The low-level module starts first. As input, it takes the input embeddings, and the initial hidden states of both the high-level module and the low-level module. It then updates its hidden state.

Then, it runs T recurrent steps, on each one it consumes its hidden state from the previous step, together with the original input embeddings and the hidden state of the high-level module, which is still the initial one since the high-level module did not run yet.

Once done, its hidden state is sent up to the high-level module. The high-level module processes it along with its own previous hidden state, updates its plan, and sends a new high-level hidden state back down.

The low-level module then runs another T steps, now with the new hidden state of the high-level module, and sends the result back up. In the architecture drawing we have 2 cycles, but this nested loop can continue for N cycles until the model converges.

Finally, the last high-level hidden state is fed into a trainable output layer that produces the final tokens.

To simplify a bit, the low-level module takes several quick steps to reach a partial solution. That result is sent up to the high-level module, which then updates its plan. The low-level module resets, takes another run, and the cycle repeats, until the model converges on a final answer.

Overcoming the Limits of Standard RNNs

Avoiding Early Convergence

Recurrent networks often suffer from early convergence, meaning that they finish after only a few steps.

This interaction between the two modules in HRM helps to overcome this and achieve significant computational depth. When the low-level module starts to converge, the update from the high-level model acts like a reset for its convergence.

This allows HRM to reach greater computational depth comparing to standard recurrent networks.

Training with One-Step Gradient Approximation

Normally, recurrent models are trained using Backpropagation Through Time, or BPTT. In BPTT, the loss is backpropagated through every single step, which requires huge memory and often becomes unstable when the reasoning chain is long.

HRM avoids this problem by using a one-step gradient approximation. Instead of backpropagating through all T steps of the low-level module and all N cycles of the high-level loop, it only updates parameters based on the current step’s computation, colored with blue in the architecture drawing above.

- Benefit 1: Memory stays constant regardless of reasoning depth.

- Benefit 2: Training stability improves, avoiding exploding or vanishing gradients.

Adaptive Halting: Thinking as Long as Needed

Another key ingredient is adaptive halting.

After N cycles are completed, the last hidden state of the high-level module is not just passed to the output layer. Instead, it first passes via a halting head, that decides whether the model should stop, or reason for another N cycles. This way, the model can dynamically adjust its thinking time depending on task complexity.

- Easy problems → stop early, save compute.

- Harder problems → keep reasoning until solved.

This halting mechanism is trained with reinforcement learning, rewarding the model for stopping at the right time and producing a correct answer.

Why Depth Matters for Reasoning

Depth Scaling in Transformers

On the left, the researchers compare Transformers trained by scaling depth (more layers) versus width (more neurons per layer). Based on the above figure, clearly depth helps, but only up to a point, as performance eventually saturates.

Deeper Reasoning with HRM

On the right, when comparing Transformer, Recurrent Transformer, and HRM, the Hierarchical Reasoning Model achieves near-perfect accuracy on challenging Sudoku tasks. Its hierarchical structure allows it to benefit from depth in ways Transformers can’t.

References & Links

- Paper

- GitHub

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.