In this post, we break down the paper “Emergent Hierarchical Reasoning in LLMs Through Reinforcement Learning” and explain how reinforcement learning develops reasoning in large language models, and where the aha moments come from. Finally, we also review a new RL algorithm called HICRA that leverages these insights.

The Rise of Large Reasoning Models

Near the end of 2024, OpenAI released a series of models called o1, which demonstrated reasoning capabilities we hadn’t really seen before. These models marked the rise of what are now called large reasoning models, which are LLMs that spend significant thinking time to solve complex problems, thinking through problems step by step using long chains of thought. This long reasoning utilizes computing resources at inference time to increase the model’s capabilities, also known as test-time compute reasoning.

DeepSeek-R1: Open-Source Reasoning

In early 2025, DeepSeek shocked the AI community with the release of DeepSeek-R1, a reasoning model that rivals OpenAI’s models, but was released fully open-source, free for anyone to use and study. From the DeepSeek-R1 paper, a crucial learning was that reinforcement learning plays a critical role in developing these reasoning abilities in large language models. Specifically, DeepSeek used an RL algorithm called GRPO (Group Relative Policy Optimization), which became extremely popular since.

The Mystery of Emergent Reasoning

Despite these breakthroughs, a big question remains: what is actually happening during reinforcement learning that enables reasoning to emerge in LLMs?

Researchers observed surprising moments during training, where the model starts to reflect on its reasoning or reconsider its solution. Another such phenomenon is length-scaling, where reasoning performance improves with longer and more detailed outputs. These were described as “aha moments”, without a clear understanding of why they appear.

This Paper: Emergent Hierarchical Reasoning in LLMs

In this post, we dive into the paper “Emergent Hierarchical Reasoning In LLMs Through Reinforcement Learning”, which aims to shed clarity on why reinforcement learning works for large language models and why these aha moments appear.

Building on that, the paper also proposes an improvement to GRPO called HICRA (Hierarchy-Aware Credit Assignment), which we’ll review later in the post.

What Reasoning Means in Large Language Models

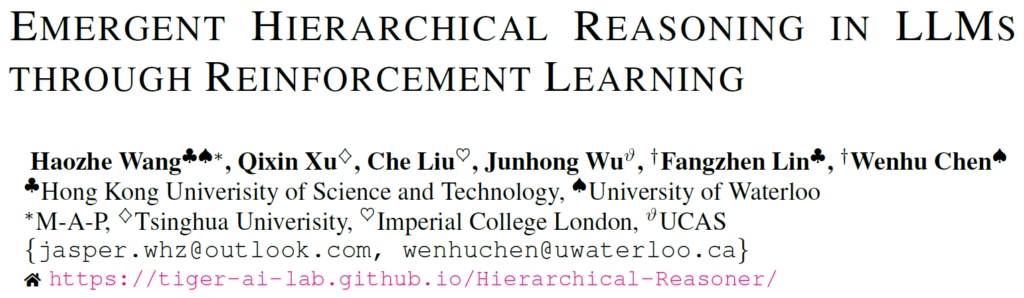

Before diving into reinforcement learning, it’s important to clarify what we mean by reasoning in large language models (LLMs). The paper illustrates reasoning as a hierarchical process with two levels: high-level strategic planning and low-level procedural execution. At the root of the reasoning tree, the model identifies the problem and generates high-level planning steps, represented as yellow nodes in the figure. These steps include strategic decisions such as “First I need to understand” or “I notice that”, guiding the model on what to do next.

Once a high-level plan is formed, the model transitions to low-level execution (blue nodes), carrying out concrete actions like mathematical calculations or applying previously learned skills. Importantly, this process is non-linear: the model can return to high-level planning to self-reflect, reevaluate its approach, and consider alternatives before continuing execution. After iterating through high-level planning and low-level execution, the model ultimately reaches a final answer.

How Reinforcement Learning Unlocks Hierarchical Reasoning in LLMs

With the picture of hierarchical reasoning in mind, let’s look at what actually happens during reinforcement learning.

LLMs Have Reasoning Priors from Pretraining

Large language models are not trained from scratch using reinforcement learning. Instead, RL is applied on top of base models that have already been extensively pretrained on large corpora of human-written text.

As a result, LLMs already contain a strong prior for this kind of hierarchical reasoning, simply because it appears throughout the pretraining data.

The central hypothesis of the paper is that RL unlocks reasoning by discovering, or rediscovering, this latent hierarchical structure inside the large language model.

Emergent Two-Phase Learning During RL

The paper observes two distinct phases during RL training:

- Phase One – Mastering Low-Level Skills: The model becomes proficient at foundational procedural tasks, ensuring reliable low-level execution.

- Phase Two – Exploring High-Level Planning: Once low-level skills are consolidated, the model shifts focus to strategic planning, exploring new approaches to solving problems.

These phases are emergent, not explicitly programmed, highlighting how hierarchical reasoning naturally arises during training.

Explaining the “Aha Moments”

These phases also shed light on the famous “aha moments” observed during RL training. Such moments occur when the learning bottleneck transitions from low-level execution to high-level planning, when the model discovers new strategies. Understanding this shift is a key insight into how reinforcement learning unlocks reasoning in LLMs.

Distinguishing Planning and Execution in LLM Outputs

The next question is how do we actually verify that this is what’s happening?

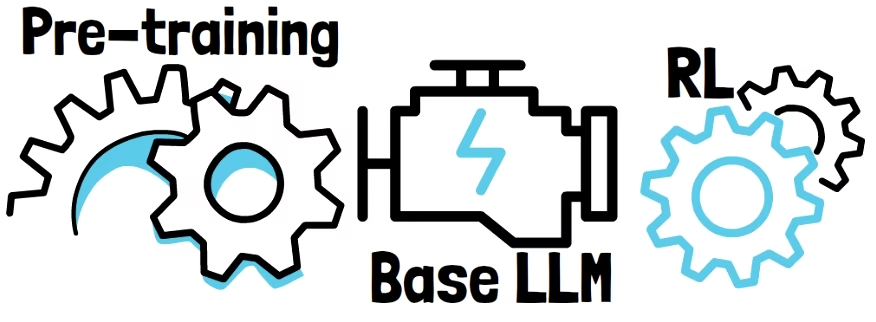

But to analyze reinforcement learning through this hierarchical lens, we first need a way to separate planning from execution in the model’s outputs. Concretely, the researchers distinguish between high-level planning tokens and low-level execution tokens.

Identifying Planning and Execution Tokens

In the above example from the paper, planning tokens are highlighted, while the remaining tokens are the low-level procedural execution tokens. One immediate observation is that most tokens are low-level tokens. We’ll come back to this while analyzing the results.

Another key observation is that planning tokens tend to appear in short sequences that represent strategic decisions, such as deciding to analyze the problem differently or to verify an intermediate result.

How To Distinguish Between Tokens In Training

Importantly, these strategic phrases are fairly generic. They’re not specific to a particular problem, but rather they represent reasoning strategies types. So, if we look across many successful reasoning traces, we would expect these strategic phrases to repeat frequently.

Building on this insight, the researchers build a set of strategic grams, which are short strategic planning phrases, identified based on their high frequency in successful reasoning traces. Then, determining whether an output token is a low-level token or a strategic planning token is straightforward, as it is determined by whether the token is part of a known strategic phrase.

Now that we can distinguish between execution and planning tokens, let’s see what we can learn from it.

Emergent Hierarchical Reasoning During RL

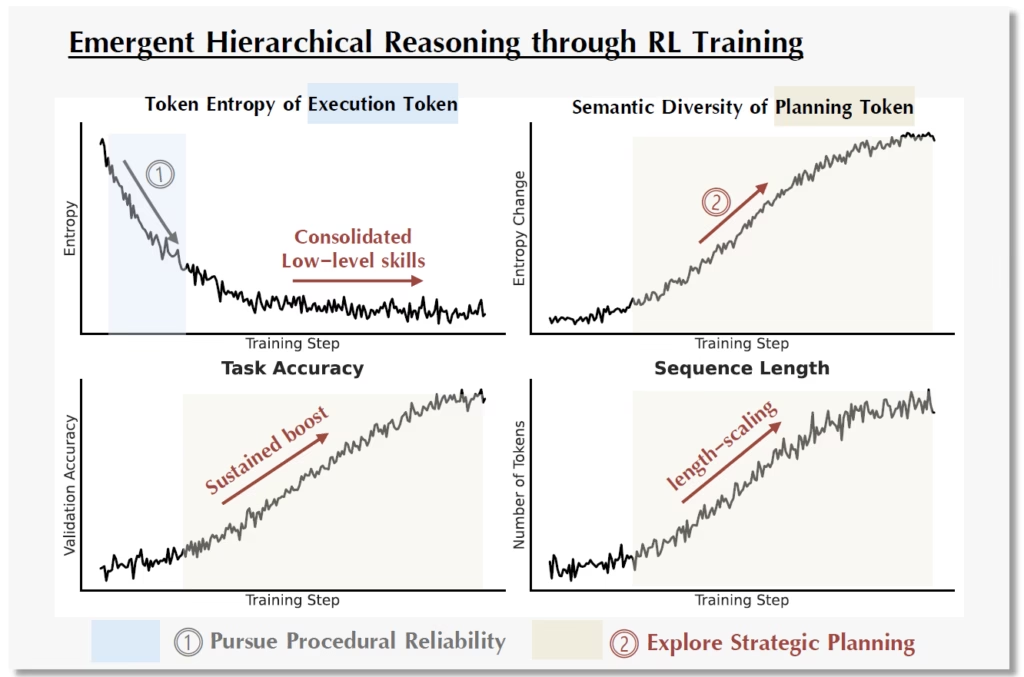

In the above figure from the paper, we see evidence for emergent hierarchical reasoning during reinforcement learning. Across all plots, the x-axis represents the training progress.

Phase One – Mastering Low-Level Execution

In the top-left plot, the y-axis shows the entropy computed only over low-level execution tokens. The token entropy is a measure of uncertainty in predicting the next token.

Early in training, we see a sharp drop in execution-token entropy, followed by consolidation. This indicates that the model starts with mastering low-level procedural execution.

However, if we look at the bottom-left plot, showing the model’s accuracy, we see that phase one does not yet produce a significant performance improvement. In other words, mastering execution is necessary, but not sufficient.

Phase Two – High-Level Planning Strategies Exploration

The top-right plot focuses on high-level planning tokens. For strategic planning, instead of token entropy, the authors introduce a new metric that measures semantic diversity, which is based on the frequency in which the different strategic phrases appear in the model’s outputs. We see a sharp increase in strategic diversity in this plot.

This indicates that the model is not converging on a single optimal strategy but is instead actively expanding its repertoire of strategic plans. This observation is important. Mastery in reasoning here is achieved by developing a rich and varied strategic playbook.

Why Semantic Diversity Matters

Importantly, if we only looked at standard token entropy, aggregated across all tokens, we would be misled into thinking that exploration has stopped due to consolidated low values. This is because token entropy is dominated by low-level execution tokens, which make up the majority of the output.

The semantic diversity metric however, completely contradicts that and shows that high-level planning is still actively evolving.

Performance Is Driven By Strategic Planning

Looking at the bottom plots, we see that the increase in strategic diversity closely correlates with both the performance gains in accuracy on the bottom-left plot, and with an increase in output length, shown in the bottom-right plot.

Improving Reinforcement Learning for Reasoning in LLMs

With these new insights, we can ask a natural follow-up question: Can we design better reinforcement learning algorithms specifically for reasoning models?

Algorithms like GRPO may suffer from a core inefficiency. They optimize the model agnostically across all tokens, without distinguishing between low-level execution tokens and high-level strategic planning tokens.

Building on this observation, the paper introduces a new reinforcement learning algorithm called HICRA, short for Hierarchy-Aware Credit Assignment.

HICRA builds directly on top of GRPO, but explicitly addresses the inefficiency of treating all tokens the same.

GRPO (Group Relative Policy Optimization) – Recap

We have a dedicated post on GRPO’s paper, but let’s do a brief recap so we can understand the idea behind HICRA.

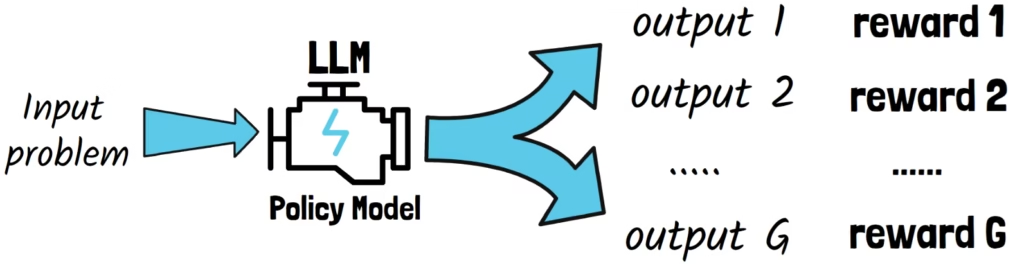

In GRPO, we start with a pre-trained large language model which we want to further optimize using reinforcement learning. In RL, we refer to the LLM we train as the policy model.

Given an input prompt, instead of generating a single response, GRPO samples multiple responses. We denote the number of outputs with capital G. Each response is then scored by a reward model or using pre-defined rules. The rewards provide a measure for the quality of the responses. We’ll want to update the model toward the best response.

However, these raw rewards are not used directly to update the model. Instead, GRPO computes a quantity called the advantage.

Advantage In GRPO – Opportunity For HICRA

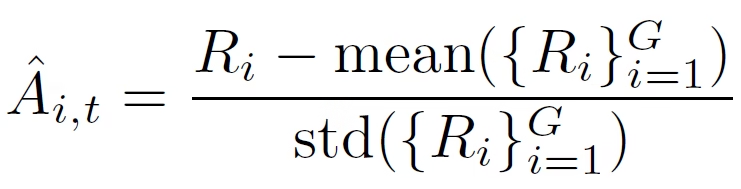

The advantage tells us whether a given response is better or worse than the average response for that prompt. The key observation for HICRA is that while rewards are defined at the response level, the advantage is applied at the token level. Now, it is not critical for understanding HICRA, but the following formula shows how the advantage is calculated in GRPO.

To calculate the advantage, we use an estimation for the average response value using the rewards of the sampled responses. This is why it’s called Group Relative Policy Optimization, since the quality of a response is measured relative to the group of sampled responses.

Importantly, we can see that in standard GRPO, every token in a response receives the same advantage value.

Understanding HICRA: Hierarchy-Aware Credit Assignment

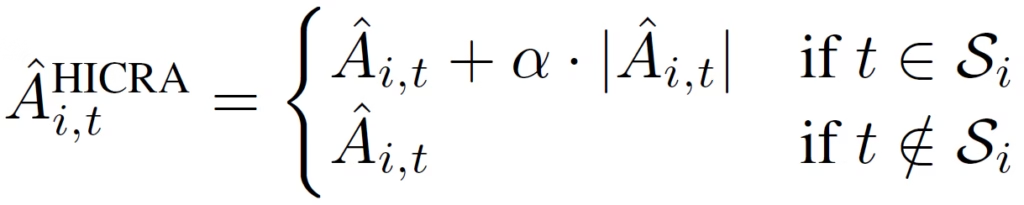

Let’s now understand what HICRA changes in the GRPO algorithm. HICRA leverages the hierarchy structure to do introduce a new innovation. If a token is part of a high-level strategic phrase, HICRA amplifies its advantage. It does so by introducing the following advantage formula:

If a token is not part of a strategic phrase, then it simply uses the previous formula. But if the token is part of a strategic phrase, it adds an extra term, proportional to the absolute value of the advantage. This scaling is controlled by a hyperparameter alpha between zero and one.

This new component amplifies the update for strategic tokens. The effect is that HICRA focuses the model’s learning signal on strategic planning decisions, while still preserving the stability of low-level execution learning.

HICRA Results

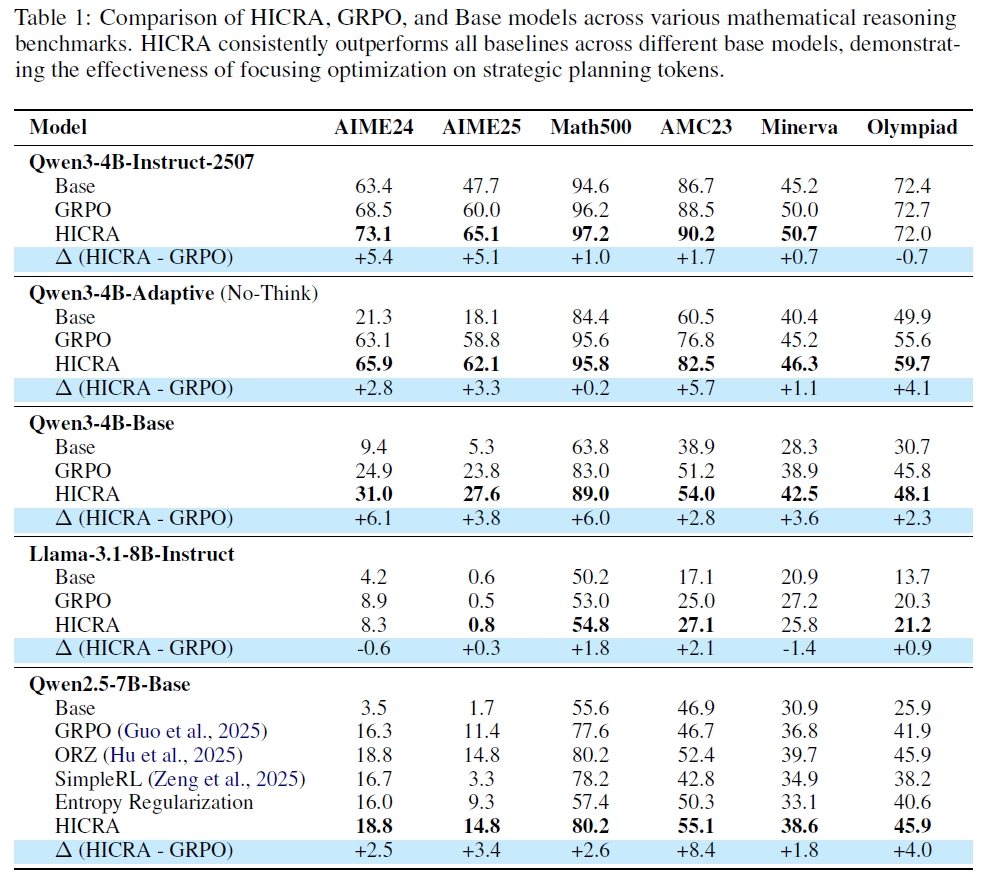

To evaluate the impact of HICRA, the researchers compare it directly against GRPO across multiple base models and a range of reasoning benchmarks.

Comparing HICRA and GRPO on Mathematical Reasoning

In the first table from the paper, we see results on several mathematical reasoning benchmarks. We can see that HICRA consistently outperforms GRPO where the improvement is highlighted in blue. These gains are observed across different models and benchmarks.

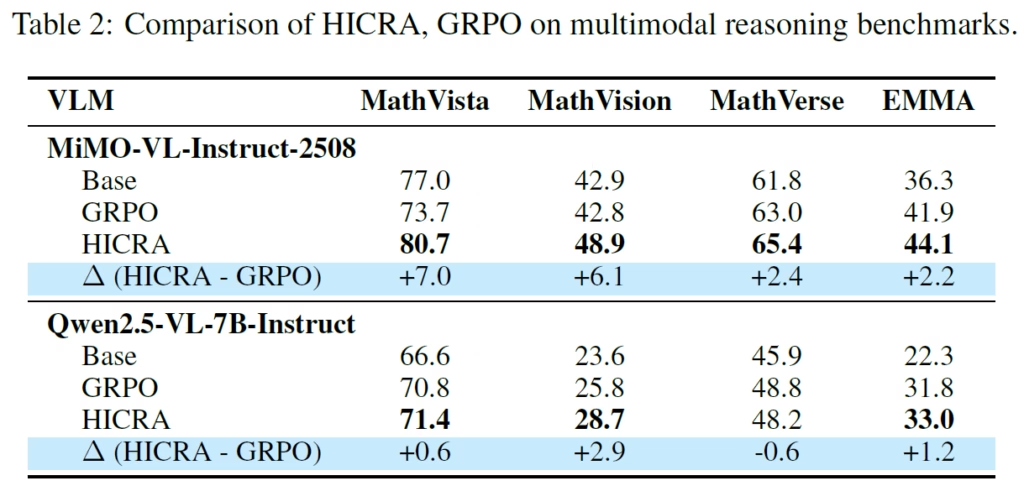

Results on Multimodal Reasoning Benchmarks

The paper reports a similar pattern on multimodal reasoning benchmarks, shown in the next table. Once again, HICRA delivers consistent and meaningful improvements over GRPO.

These experiments provide strong empirical support for the claim that focusing credit assignment on the emergent strategic bottleneck allows HICRA to develop advanced reasoning abilities more efficiently than token-agnostic approaches like GRPO.

References & Links

- Paper Page

- Code

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.