This post breaks down GDPO, NVIDIA’s solution to a key limitation of GRPO in reinforcement learning for large language models.

Introduction

Reinforcement Learning and Reasoning in Large Language Models

Reinforcement learning (RL) has become a core design element in the training process of large language models (LLMs). In particular, RL is used to shape reasoning capabilities, where models learn to spend significant thinking time to solve complex problems step by step using long chains of thought.

This wave was sparked in early 2025 with the release of DeepSeek-R1, which demonstrated that RL plays a critical role in developing these reasoning abilities in LLMs.

DeepSeek relied on an RL algorithm called GRPO, short for Group Relative Policy Optimization, which quickly became extremely popular.

GRPO Limitation for Modern LLMs

While GRPO has been very successful, it is primarily designed to work with a single reward signal, such as whether a model’s response is correct.

In practice, however, large language models should do more than simply generate a correct response. Users expect LLMs to have behaviors that align with diverse human preferences across a variety of scenarios, for example answering in a specific format of following structured outputs. For this reason, it has become crucial to incorporate multiple rewards at the same time, such as correctness, format compliance, safety constraints, response length, and more.

GRPO does not explicitly tell us how to handle this multi-reward setting.

GDPO: Extending GRPO to Multi-Reward RL

This is exactly the gap addressed by a recent NVIDIA paper titled GDPO: Group reward-Decoupled Normalization Policy Optimization for Multi-reward RL Optimization, which shows how to adapt GRPO to work reliably with multiple reward signals.

We have a dedicated post where we cover GRPO in depth. In this post, we’ll focus on what GDPO changes.

We’ll soon see what goes wrong when standard GRPO is applied to multiple rewards. But first, let’s do a quick recap on GRPO so we have the right context.

GRPO (Group Relative Policy Optimization) – Recap

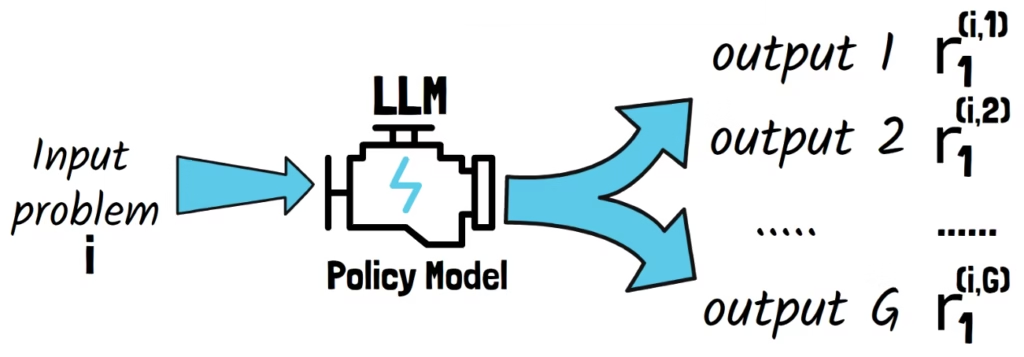

In GRPO, we start with a pre-trained large language model which we want to further optimize using reinforcement learning. In RL, we refer to the LLM we train as the policy model.

Given an input prompt, instead of generating a single response, GRPO samples multiple responses. We denote the number of outputs with capital G.

Each response is then scored, either by a reward model or using pre-defined rules. These rewards provide a measure for the quality of the responses.

Explaining The Notations

The notations in the illustration above could be simplified, but we use notation that will be helpful when we discuss GDPO in just a moment.

- G represents the total number of outputs.

- Lowercase i represents the input prompt

- The number next to i (right item on the tuple) represents the sampled output.

- The subscript of 1 for the reward means that this is the first reward signal, the only one we have at the moment.

GRPO Model Updates

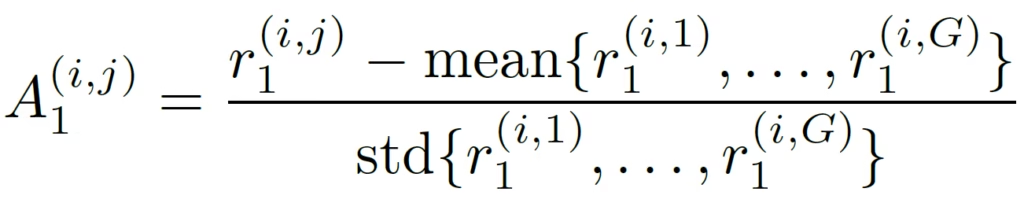

Given these multiple scored outputs for the same input, we want to update the model toward the best responses.

However, the raw rewards are not used directly to update the model. Instead, GRPO computes a quantity called the advantage, which tells us whether a given response is better or worse than the average response for that prompt. The following formula shows how the advantage is computed in GRPO. Lowercase j represents the sampled output, or rollout.

GRPO uses an estimation for the average response value using the rewards of the sampled responses. This is why it’s called Group Relative Policy Optimization, since the quality of a response is measured relative to the group of sampled responses.

This replaces the need for a different value model to estimate that.

GRPO With Multiple Rewards

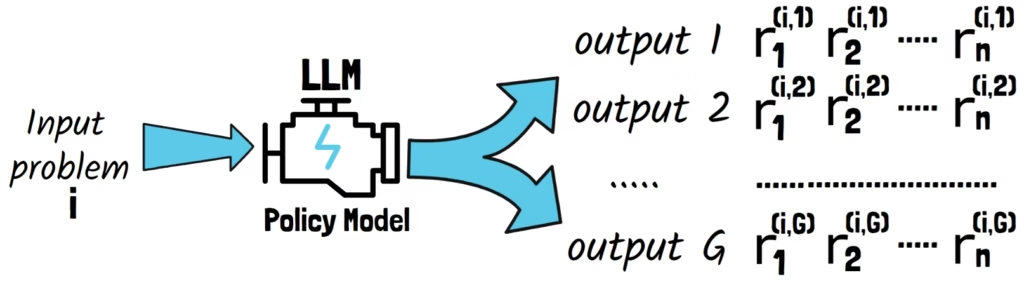

So far, this setup assumes a single reward signal. Now, what happens when we introduce multiple reward signals?

Instead of assigning a single reward to each response, each output is now scored with multiple rewards. We denote the number of reward signals with lowercase n.

A naïve way to extend GRPO to multiple rewards is to sum all of the rewards for each response. This summed reward is then treated as a single reward signal in the same advantage formula, as we can see below.

Why Naïvely Extending GRPO to Multiple Rewards Fails

Simple Example Setting

To illustrate the issue, let’s consider a simple setting.

- First, we only sample two responses for each sample, meaning capital G is 2.

- Second, we only have two different rewards for each response, meaning lowercase n is set to 2.

- Finally, we assume the rewards are binary, so each reward value is either 0 or 1.

- As a result, the total reward for each response can be 0, 1, or 2, since it is the sum of two binary rewards.

GRPO With Multiple Rewards Example

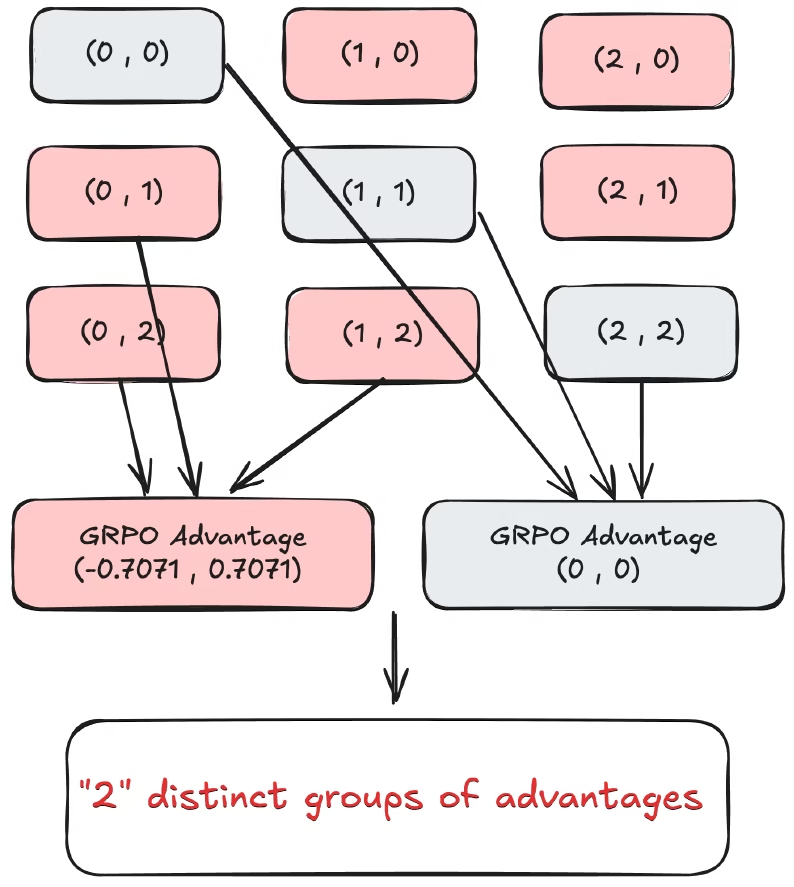

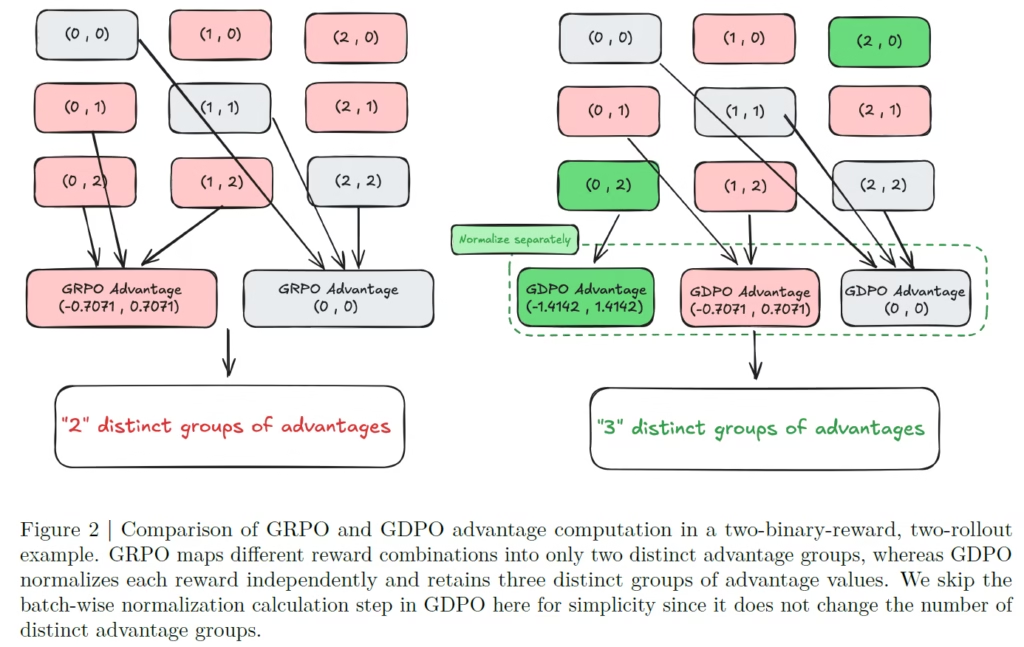

The above figure from the paper shows all possible reward combinations for this simple setting. Each tuple represents one prompt, with two responses. The left value is the total reward for the first response, and the right value is the total reward for the second response.

If we compute the GRPO advantage for each of these tuples, we get only two distinct advantage signals, shown in the middle.

When the total rewards are equal, the advantage is zero, since the advantage is calculated relatively to the group, and if all rewards in the group are equal, then none is better than the others.

More interestingly, all cases when the total rewards are not equal produce an advantage signal which is identical in its magnitude.

Note that the ordering does not matter. Since the responses are sampled randomly, swapping two responses simply flips the sign, but from a learning perspective, this still corresponds to the same magnitude signal.

Reward Collapse and Loss of Learning Signal

Now let’s consider the two cases shown in the upper-right of the figure, the (1,0) and (2,0) tuples. In both cases, the second response got a total reward of zero, meaning neither reward is satisfied. However, in one case the first response receives a total reward of one, while in the other it receives a total reward of two.

Despite this difference, GRPO assigns the same advantage in both situations. Intuitively, a response that satisfies two reward signals compared to none should produce a stronger learning signal than one that satisfies only a single reward.

This collapse of distinct reward combinations into identical advantage values hides important information from the training process and leads to inaccurate model updates.

Let’s now see how GDPO fixes this issue.

How GDPO Fixes Reward Collapse

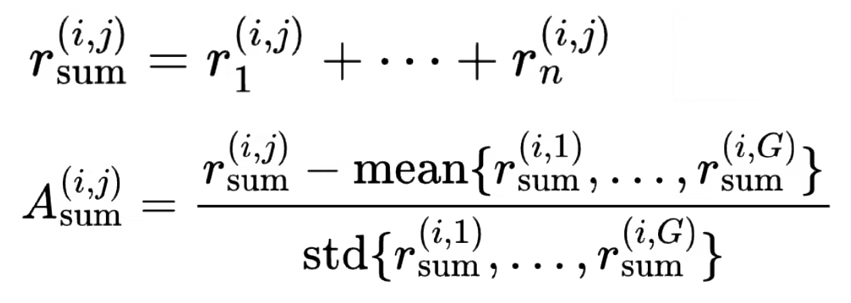

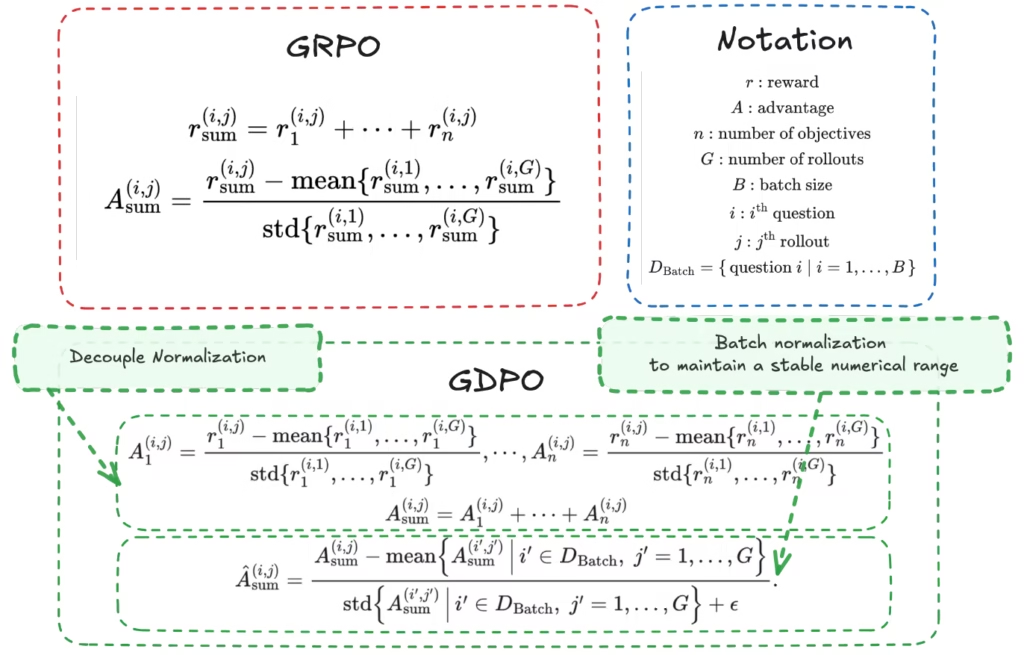

In the above figure from the paper, we see the advantage formula for both GRPO and GDPO in the multi-reward setting.

As we’ve seen, the naïve approach simply sums all reward signals and treats the result as a single reward. At the bottom of the figure, we see what GDPO changes.

Reward-Decoupled Normalization

Instead of directly summing the rewards, GDPO introduces an additional preliminary step. First, each reward type is normalized separately within the group of sampled responses. Correctness rewards are normalized relative to other correctness rewards, format rewards relative to other format rewards, and so on.

This is equivalent to computing the original GRPO advantage independently for each reward signal, as if it was the only reward. This is the meaning of reward-decoupled in the GDPO name.

These per-reward normalized advantages are then summed together to form a single learning signal.

Batch-Level Normalization for Training Stability

However, this summed signal can grow in magnitude as we increase the number of reward types, which may destabilize training. To ensure training stability, GDPO applies an additional batch-level normalization step.

This is another difference comparing to GRPO. GRPO normalizes advantages only across responses sampled for the same prompt. GDPO normalization includes both responses sampled for the same prompt, and also responses from other prompts in the same training batch.

GDPO Preserves More Information Than GRPO

Fixing The Simple Setting Example

If we go back to our earlier example, where GRPO produced only two distinct advantage value combinations, we now see that GDPO resolves this issue by producing three distinct advantage combinations.

Specifically, the two reward combinations we’ve discussed earlier, (1,0) and (2,0), now receive different advantage values, restoring information that was previously lost. Of course, this is a very simple setting, so we just see an increase of one advantage group. In practice, we see below that this effect scales significantly in more realistic scenarios.

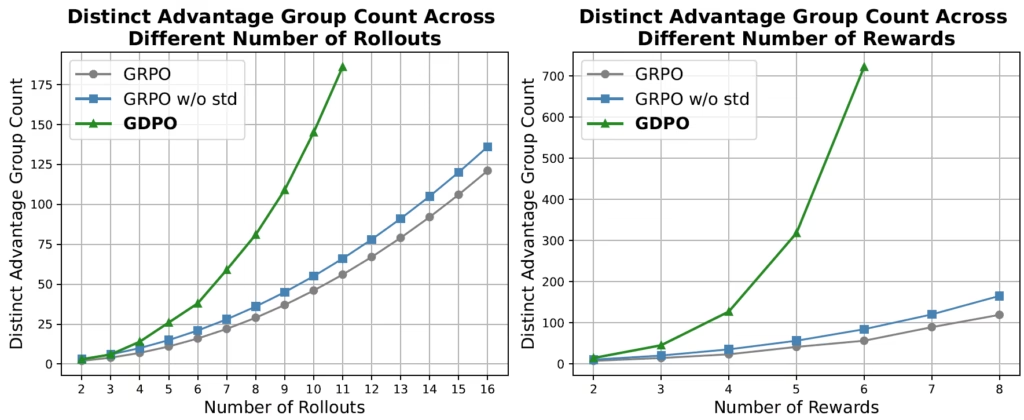

GDPO vs GRPO Information Preservation At Scale

In both plots, the y-axis shows the number of distinct advantage values. The left plot shows the number of sampled responses on the x-axis, while the right plot shows the number of reward signals on the x-axis.

In both cases, the green curve shows that GDPO produces significantly more advantage combinations comparing to GRPO, indicating that GDPO preserves far more information in the learning signal.

GDPO vs GRPO Performance Comparison

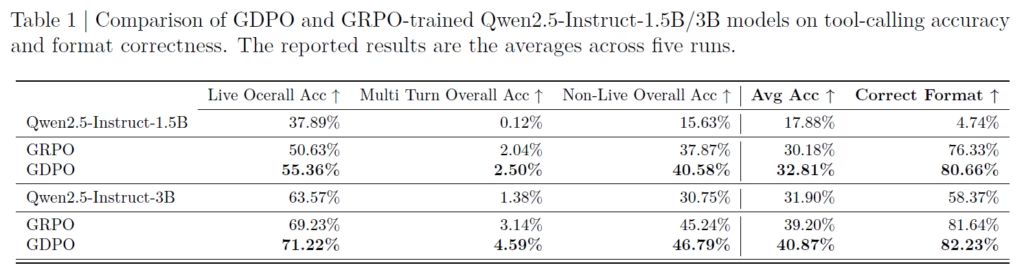

Finally, the above table shows a performance comparison between GDPO and GRPO on tool-calling accuracy and format correctness.

NVIDIA fine-tunes the 1.5B billion parameters and 3 billion parameters versions of Qwen2.5-Instruct, and in both cases GDPO achieves clear improvements in both tool-calling accuracy and format compliance.

References & Links

- Paper Page

- GitHub Page

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.