Nowadays, AI plays a significant role in our daily lives. Yet, most AI systems are still crafted by humans, who design both the system architecture and the learning process. But what if instead, AI could design and continuously improve itself? Such automation has the potential to significantly accelerate the AI development.

In this post, we dive into a recent paper from Sakana AI and the University of British Columbia that pushes the idea of self-improving AI a step forward, titled Darwin Gödel Machine: Open-Ended Evolution of Self-Improving Agents.

Introduction – From Gödel Machine to Darwin Gödel Machine

The concept of a Gödel Machine was first proposed by Jürgen Schmidhuber in 2003. Inspired by the work of Kurt Gödel, it describes a theoretical AI system that can rewrite its own code in a provably beneficial way. The Gödel Machine continuously searches for improvements and only modifies itself when it can mathematically prove that the new version is better. While this concept is fascinating, its reliance on formal proofs makes it difficult to implement in practice.

Sakana AI’s Darwin Gödel Machine (DGM) takes a more empirical approach. The empirical approach is inspired by biological evolution, hence the addition of Darwin to the name. Instead of relying on strict mathematical proofs, the DGM uses open-ended evolution to refine AI systems. We’ll explore what open-ended evolution means shortly.

Before we dive into the details, it’s important to note that the researchers envision frameworks like this having the power to transform the training process of large language models (LLMs) and even improve their architecture. However, such a process would require substantial computation resources. Therefore, the scope of this paper is focused on developing self-improving AI agents that use frozen LLMs/. Specifically, the paper explores the self-evolution of coding agents.

How the Darwin Gödel Machine Works

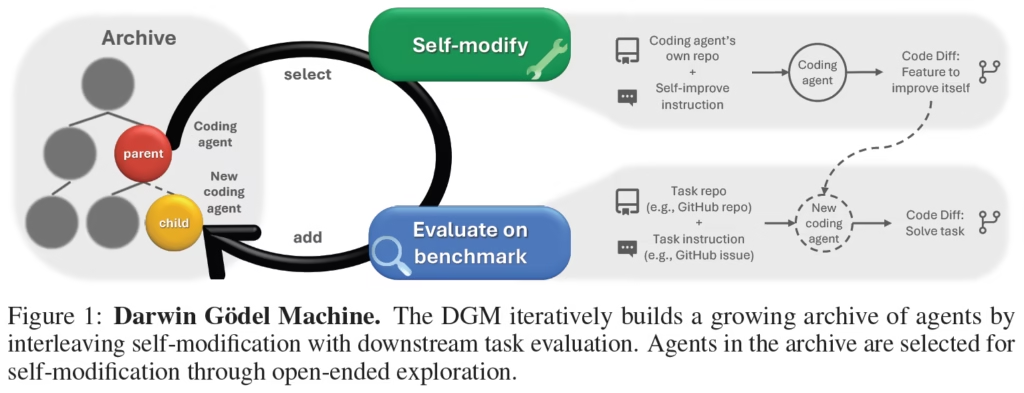

To understand how the Darwin Gödel Machine works, let’s examine the following figure from the paper.

Archive of Agents

On the left side, we see a tree structure, which represents an archive of the evolutionary history of coding agents. The process starts with a single root node, which is the original agent. Each branch in the tree represents a self-modification event, where an agent rewrites part of its own code to create a new version.

Agent Selection for Evolution

During each evolution step, the system selects an existing agent from the archive. Importantly, the chosen agent is not necessarily the best one. The system does not simply refine a single version until no further improvements can be made.

Instead, every agent in the archive has a non-zero probability of being selected, where better performing agents have a higher probability. To ensure diversity, the probability is also influenced by the number of children an agent has already produced, preventing excessive evolution from a single node.

This mechanism is a crucial part of the open-ended evolution process, helping to explore the solution space more broadly and avoid getting stuck at a local optimum.

Occasionally, a single evolution step might underperform compared to other agents, but it could introduce a crucial skill which will take after a few more evolution steps to mature.

Self-Modification

Once an agent is selected, it is instructed to modify itself (top-right of the image above). It gets its own code repository as input, along with a self-improvement instruction, to generate a new version of its own code.

An important clarification is that the agent isn’t just improving its coding skills, but also its self-improvement abilities. In other words, the part of the agent responsible for modifying itself is also evolving during each step of the process. This meant to ensure that over time, the agent becomes better not only at solving tasks but also at rewriting its own architecture.

Evaluating The New Agent

Finally, the newly created agent enters the evaluation phase. First, the agent must compile successfully and demonstrate self-improvement skills on a small set of dedicated tasks. If it fails this initial test, the new agent is discarded. Otherwise, it is further evaluated against a benchmark and being added to the archive with its benchmark result.

Darwin Gödel Machines Results

Evaluation Setup

The researchers used two benchmarks for evaluation during the evolution process:

- SWE-bench Verified – a set of real-world software engineering tasks that have been validated as solvable by humans.

- Polyglot – while SWE-bench Verified includes only python tasks, Polyglot consists of challenging coding exercises across multiple programming languages.

DGM Comparison With Baselines

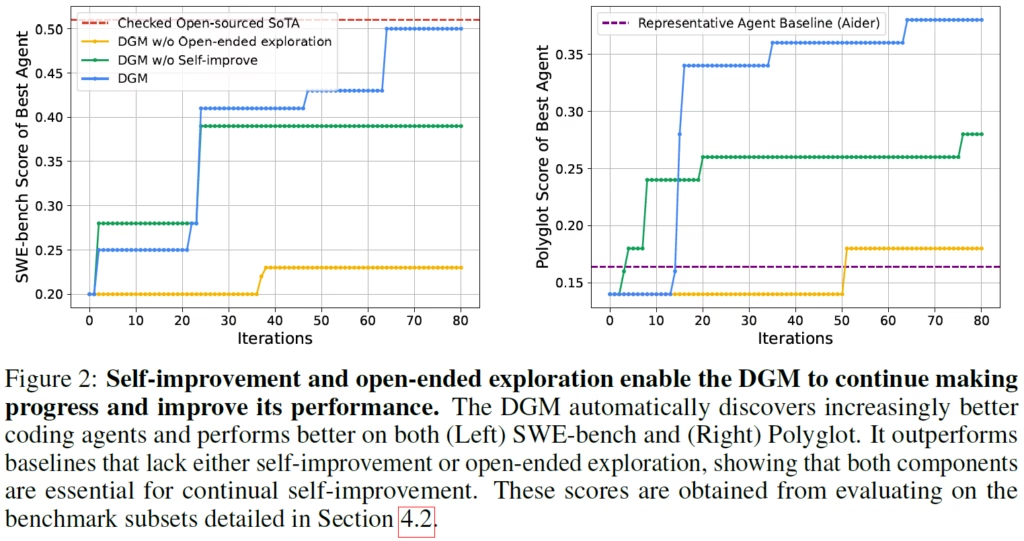

In the above figure, we can see the performance of Darwin Godel Machine comparing to baselines as the evolution process progresses. The x-axis represents the number of iterations passed in the evolution process, and the y-axis shows the performance score of the models.

- The blue line indicates the performance of the Darwin Gödel Machine (DGM) across iterations.

- The red line shows the performance of the top open-source model, which was validated by the SWE-bench team. After 80 iterations, the DGM reaches a comparable performance level.

- The yellow line represents a baseline version of the DGM, that does not employ open-ended exploration. In this version, the initial agent is refined until it stops improving or until it loses its self-improvement capability. The significant performance gap shows the value of maintaining an archive of agents and leveraging open-ended evolution.

- The green line shows the impact of evolving the self-improvement capability in addition to the coding skills. In this version, the agent only improves its coding skills while the self-improvement module remains fixed, resulting in a noticeable performance gap.

On the right, a similar trend between the baselines is observed for the Polyglot benchmark. The purple line represents an open-source baseline for the Polyglot benchmark, called Aider. Notably, while starting with an initial agent below this level, we see that the Darwin Gödel Machine significantly outperforms this baseline at the end.

Full Benchmark Vs Benchmark Subset Results

It is worth mentioning that the numbers we see in the charts reflect the agents’ score on the samples used for evaluation and not on the full benchmarks. Only the initial agent and the best agent were evaluated on the full benchmarks.

On the full benchmarks, the Polyglot agent was improved from 14.2% to 30.7%, and for SWE-bench the paper mentions that the results are comparable to those obtained on the benchmark subset, which is an improvement from 20% to 50% as we see on the chart.

DGM Evolution Example

Another interesting figure shows how the tree of agents looks like for a Darwin Gödel Machine using SWE-bench for evaluation.

- The node colors represent the score of each agent on the benchmark.

- The tree starts at the top with the initial agent.

- We see that nodes can have multiple children.

- When a child does not meet the requirements, it is not evolved further.

- The top-performing agent is marked with a star.

On the right, the blue line represents the best score in each iteration. The black line represents the evolutionary path from the root node to the best agent. For example, at the fourth iteration, the agent underperformed compared to another branch, since it is below the blue line.

However, in a later iteration, it developed a crucial skill of editing files via string replacement rather than replacing entire files, ultimately leading to the emergence of top agent in the tree.

In each node on that path, we see a new capability the agent developed. Another example is automatically summarize the context when reaching the context limit.

References & Links

- Paper

- Code

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.