In this post, we go back in time to a fundamental paper by DeepSeek, titled DeepSeekMath: Pushing the Limits of Mathematical Reasoning in Open Language Models, which introduced GRPO (Group Relative Policy Optimization), the reinforcement learning (RL) algorithm used to train DeepSeek-R1.

Introduction

Near the end of 2024, OpenAI released a series of models called o1, which demonstrated remarkable reasoning capabilities previously unseen. These releases initiated the development of large reasoning models, which are large language models (LLMs) that are tailored to devote significant thinking time to solve complex problems, using long chain-of-thought reasoning. This long reasoning effectively utilizes computing resources at inference time to increase the model’s capabilities, also known as test-time compute reasoning.

In early 2025,the AI community was stunned, when DeepSeek released a reasoning model that rivals OpenAI reasoning models, called DeepSeek-R1. But not only that, DeepSeek-R1 was released as open-source, free for anyone to use, along with a research paper that described how to train such a model. We already covered DeepSeek-R1 paper in the past, and in this post we devote a dedicated review of DeepSeekMath, the paper that introduced the core component behind it – GRPO (Group Relative Policy Optimization), a novel reinforcement learning algorithm.

For a complete picture , we cover the full paper, describing the development process of a domain-specific model that excels in mathematical reasoning. If you’re only interested in learning about GRPO, you may skip ahead past the first few sections.

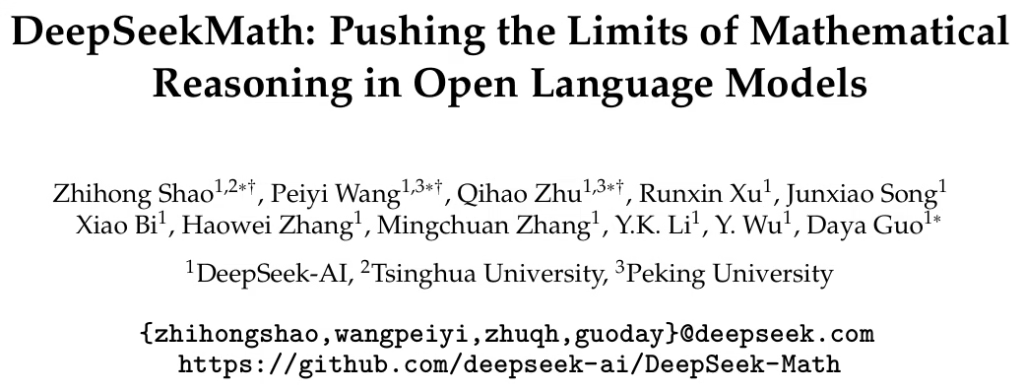

DeepSeekMath Training Pipeline in High-Level

To have an idea on what we’re going to talk about, we start with a high-level description of the DeepSeekMath training pipeline, and afterwards we’ll dive into each component on the pipeline.

DeepSeekMath starts with a pre-trained LLM for code, DeepSeek-Coder-Base-v1.5 7B. The researchers also tried starting with a general LLM , and discovered that starting with a code LLM achieves better results.

The model undergoes three stage training process that resembles how LLMs are trained:

- Math pre-training – a pre-training stage on a large corpus of math data.

- Instruction tuning – a supervised fine-tuning stage on math instructions.

- Improve from feedback – training the model using GRPO reinforcement learning.

We’ll now expand on each of the above stages.

DeepSeekMath Training Stage 1 – Math Pre-Training

The role of the first stage is to create the DeepSeekMath model is a pre-training stage. In this stage, the pretrained model, DeepSeek-Coder-Base-v1.5 7B is continue training on a large math corpus of 120B tokens. The paper does not explicitly state the training objective for this stage, but it is likely trained by predicting the next token in a sequence, as usually done in the pre-training stage of large language models.

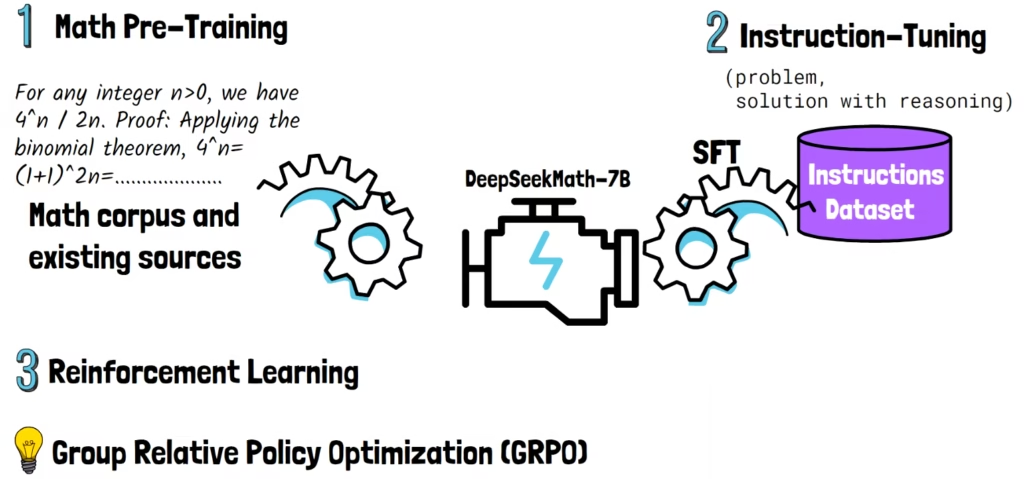

Constructing The Dataset

We can learn about how DeepSeek curated the math corpus using the above figure from the paper. We start with an existing small high-quality math dataset called OpenWebMath (on the left, of the image above), and run the following iterative pipeline:

- Math Classifier Training – Train a model to classify whether an input text is math or not. This is achieved by taking positive examples from the seed dataset, and random web pages as negative examples.

- Collect Math Web Pages – Using that classifier, find math web pages from Common Crawl, a dataset with huge collection of web pages. Before processing the web pages from Common Crawl, the researchers first deduplicate pages based on their URLs and their similarities to other web pages, resulting in 40B web pages. To filter out low-quality mathematical content, only results where the classifier confidence is high are kept. The selected web pages are added to the math corpus (right on the above image).

After this process, the math corpus is not diverse and large enough, mainly due to the limited diversity in the seed dataset, resulting in a not strong enough classifier. To address this, the following two steps are added to the curation process:

- Extract Math Websites – from the current math corpus, we find domains where over 10% of their web pages have been classified as math and collected into the corpus. For example we can think of mathoverflow.net as example, which is a question and answer website for math.

- Extend Seed Dataset – Within extracted math websites, the researchers manually annotate the URLs which are associated with mathematical content. For example, mathoverflow.net/questions, which contains the math questions and answers. Then, web pages under such URLs which were not yet collected are added to the seed corpus, so we can train a smarter classifier in the next iteration.

The researchers ran four iterations of the this process, ending with 35.5M mathematical web pages which comprises 120B tokens. The researchers stopped after 4 iterations since the last iteration contributed just additional 2% of samples comparing to the previous one.

Training DeepSeekMath-Base 7B

In this section we introduce DeepSeekMath-Base 7B, the first version of the model. This model is initialized from DeepSeek-Coder-Base-v1.5 7B, and trained for 500B tokens. This includes the math corpus curated in this paper, and data from various other existing sources of math, coding, and natural text.

In the below table, we can see that the base model outperforms other baselines, including a 540 billion parameters math model.

DeepSeekMath Training Stage 2 – Supervised Fine-Tuning

In this stage, the researchers construct a mathematical instruction-tuning dataset covering English and Chinese problems from different mathematical fields and of varying complexity levels. The researchers pair the problems with solutions that include a reasoning process.

The base model is then fine-tunned on this dataset, to create the second version of the model, called DeepSeekMath-Instruct 7B.

DeepSeekMath Training Stage 3 – GRPO Reinforcement Learning

To further improve the model, the researchers continue training the model using a novel reinforcement learning (RL) algorithm called Group Relative Policy Optimization, or GRPO in short.

GRPO is built on PPO (Proximal Policy Optimization), a widely used RL algorithm from 2017. To be in a better position to understand the innovation behind GRPO, let’s start with a high-level overview of PPO, and afterwards continue to GRPO.

Proximal Policy Optimization (PPO) Overview

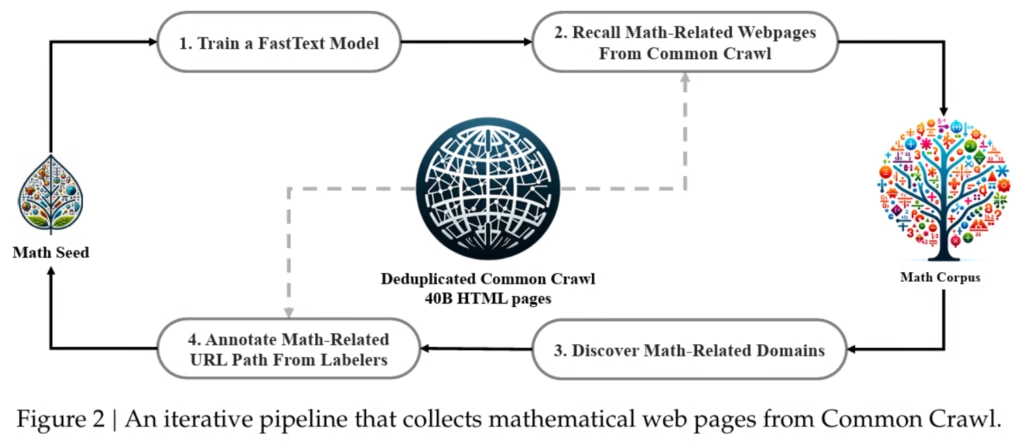

The Policy Model

Let’s say that we have a pre-trained LLM that we want to train with reinforcement learning using PPO. In RL, we refer to the LLM as the policy model. Given an input prompt, we feed it into the LLM to get a response. Then, we want to update the model based on the quality of the response. To do that, few things happen with that response.

The Reward Model

First, we pass the prompt and the response via a reward model, to get a reward for the response. The reward model is another dedicated model trained for the purpose of being a reward model. The reward provides a measure for the quality of the response. However, this is not a strong enough signal to train the model. The signal we’re actually looking for is called an advantage.

The Value Model And The Advantage

The advantage tells us whether a response is better or worse than the average for a given prompt. To calculate the advantage, we need an estimation for the quality of the average response. This value is noted as v, short for value, since it represents the value of the current state. We get this value from another dedicated model called the value model.

The Reference Model And The KL Penalty

With both the value and the reward, we can calculate the quality of the response comparing to the average. However, there is another component that is included in the advantage. During the reinforcement learning stage, we don’t want to drift away too much from the pre-trained large language model that we train. The original pre-trained model is referred to as the reference model.

Using the reference model, we add a KL penalty. The role of the penalty is to be high if the probability of the response is significantly different between the new model and the reference model, punishing the model if it tries to drift away too much. With these three components, we calculate the advantage that is used to update the model.

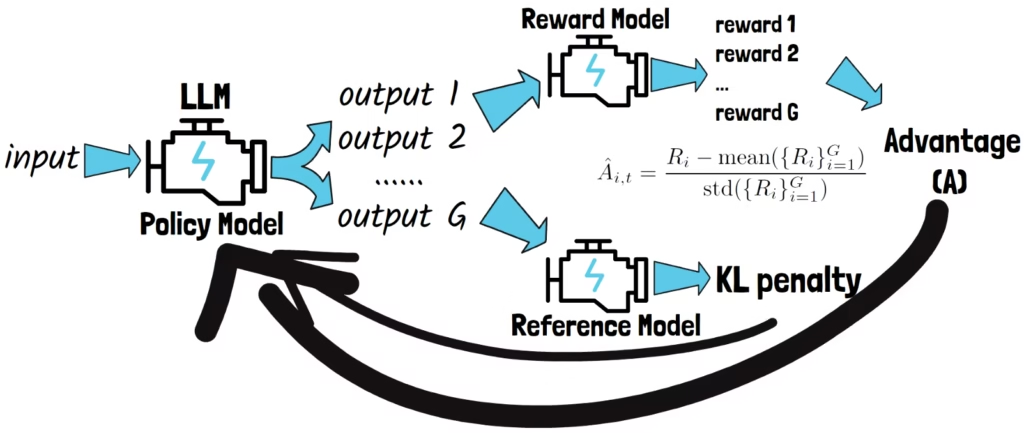

Group Relative Policy Optimization (GRPO) Overview

Let’s now understand what GRPO changes in PPO with a high-level overview of GRPO.

Removing The Value Model

As before, we have a LLM which we want to train with reinforcement learning, which we refer to as the policy model. Before, we had three additional models, the reward model, the value model, and the reference model. GRPO completely removes the value model.

The value model is usually of comparable size to the LLM that we train, which also needs to be trained. This dependency can add a significant memory and compute overhead. Remember that the role of the value model was to estimate the value of an average response, as part of the advantage calculation, so let’s see how GRPO accomplishes that in a different way.

Calculating A Relative Advantage

Instead of a single response, GRPO samples multiple outputs from the policy model, we note the number of outputs with capital G. Then, the reward model generates a reward for each of the sampled responses. It is worth mentioning that in DeepSeek-R1, instead of a reward model, the reward was calculated based on rules, such as whether a math answer is correct.

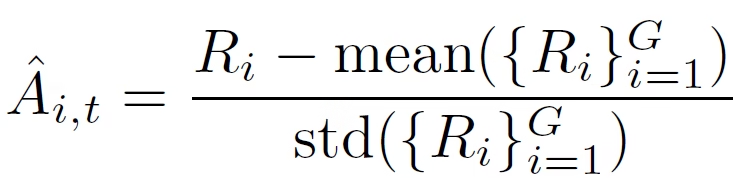

The advantage is then calculated for each response using the following formula.

Notice we normalize the rewards by subtracting the average reward and dividing by the standard deviation of the rewards. So, instead of a value model determining what is the average value, we estimate that using the responses that we sampled. This is the meaning of “group relative” in GRPO’s name, since the advantage is calculated relatively to the group of sampled responses.

The KL Penalty

Unlike PPO, there is no KL penalty as part of the advantage formula. However, GRPO still uses a KL penalty, just not inside the advantage. This will be clearer in the next section where we will look at the optimization objectives.

Both the advantage and the KL penalty contribute to the model update as part of training.

GRPO Optimization Objective

For a deeper understanding, let’s now take a look at optimization objectives of PPO and GRPO. Again, don’t worry about the math as we’ll focus on the essence and describe it in plain words.

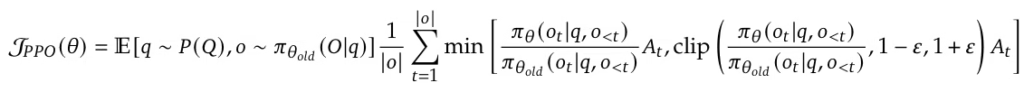

PPO optimization objective:

GRPO optimization objective:

Let’s start by discussing a few similarities between the two objectives.

The Policy Ratio

First, we see an identical ratio that appears in both objectives. This ratio is called the policy ratio. In our case, the policy is the LLM that we train. Given a question q, both the numerator and the denominator represent the probability of a certain response by the LLM, noted with a lowercase o. However, the numerator represents the new probability, after the LLM has been updated in a certain training step, while the denominator represents the old probability before the LLM has been updated.

The denominator is just a number determined by the current state of the model, while the numerator are the parameters that we optimize in each training step. The ratio between them reflects the change in the LLM confidence for a specific response. In other words, how probable is the same response, before and after a training step. If the ratio is greater than 1, the new policy is more confident about the response. If the ratio is less than 1, the new policy is less confident about the response.

Guiding The Model To Prefer Good Responses

As an intuition, we want to update the model to prefer good responses, so if the response is great, we’ll want that the policy ratio will be greater than 1, and if the response is poor, we’ll want that the policy ratio will be lower than 1.

At the core of these objectives, we multiply the policy ratio by the advantage. This guides the updates to the model to increase the probability of responses with positive advantage.

Clipping – Avoid Too Large Updates

Now, why do we have the policy ratio and the advantage twice inside each objective? We see we take the minimal value between the multiplication of the policy ratio and the advantage, and another component created with a clip function. The clip function limits the size of the update using a hyperparameter called epsilon.

Clipping is a reinforcement learning technique that helps to stabilize the training process by preventing large updates to the model. We want each training step to make small, incremental improvements rather than drastic changes that could destabilize training, and clipping helps with that.

Think that each training step is not exposed to the full training data, but rather just to a small batch of training samples. We don’t want any single small batch to have a dramatic impact. Gradual improvements over time lead to a more stable training.

Ok until now we described shared components between GRPO and PPO in the objectives. Let’s now take a look at some differences.

Using Multiple Outputs To Update The Model

As mentioned earlier, the main difference is that in GRPO we sample multiple outputs for each question. We see that we calculate the objective for each of the sampled responses, and average by the number of sampled responses, noted with a capital ‘G’. We also saw that the multiple responses are used to calculate the advantage, where their average reward is used to calculate the relative value for each response.

Token-Level Training Signal

We saw that the advantage is dependent only on the full response, and not on intermediate partial responses. However, we can notice that there is another sum in the objective, that goes over each token in the response.

The reason for that is that even though there is no ground truth label for each token, and only a reward for the entire response, the training signal still needs to be propagated at the token level to guide the model’s learning step by step, since the model generates one token at a time.

The policy ratio for example, is calculated based on the probabilities to generate the next token, for each of the tokens in the response.

Outcome Supervision vs. Process Supervision

DeepSeekMath presents two different mechanisms to use GRPO, that are different in the way we calculate the advantage. The one we’ve discussed so far, which is also the method used in DeepSeek-R1, is called outcome supervision. This is because we only reward the final outcome.

Another mechanism, which we do not dive into its formulas is process supervision, where we reward each reasoning step and not only the final outcome. In this case the advantage is also dependent on where we are in the reasoning process.

KL Penalty in GRPO and PPO

We mentioned before the KL penalty component. We see it exists in the GRPO objective but not in PPO’s objective. The reason is that in PPO it is part of the advantage itself which is not shown in the formula here, while in GRPO it is extracted out of the advantage.

DeepSeekMath Performance

The reinforcement learning is the final phase in the training process, creating the final DeepSeekMath model called DeepSeekMath-7B. In the above chart, we can see the performance of open-source models over time on the MATH benchmark, including math specific and much larger models. Notably, we see DeepSeekMath’s jump in performance, reaching the level of closed source models at the time.

References & Links

- Paper

- DeepSeek-R1 Paper Review

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.