In this post we break down a Meta’s paper: SWE-RL: Advancing LLM Reasoning via Reinforcement Learning on Open Software Evolution. This paper applies DeepSeek-R1 approach to real-world software engineering.

Introduction

The release of DeepSeek-R1 recently made waves in the AI world. Specifically, it showed how powerful reinforcement learning (RL) can be in boosting the reasoning abilities of large language models (LLMs). But, when it comes to coding tasks, DeepSeek-R1 mainly focuses on competitive programming, where every problem is self-contained, and you can easily run the code to check if the solution works. That makes it easy to measure success by simply executing the code.

In the real world, though, like fixing a bug in a complex backend service, it’s not always that simple. Running the code might need a dedicated environment, and even if you can execute it, figuring out if the solution is actually correct can be much trickier. This is why models like DeepSeek-R1 still struggle when it comes to real-world software engineering.

In this post, we explain a new paper from Meta, a natural follow-up to DeepSeek-R1, which proposes a way to scale RL for real-world software engineering by training models on how open-source software evolves over time.

Building The Dataset: GitHub PRs Curation

Gathering Unstructured Raw Data

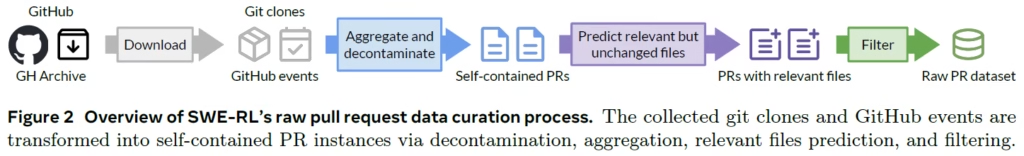

To train the model, the researchers curated a dataset of pull requests (PRs) from GitHub. They leveraged GH Archive, a project that tracks all public activity on GitHub. So rather than just code, it also provides issues, comments, pull requests, and more. To obtain the source code, the researchers cloned the repositories, this way capturing the commit history rather than a code snapshot. This approach was applied to 4.6 million repositories.

An important note is that all repositories used by the SWE-bench benchmark are excluded, since this is the benchmark used to analyze the model performance.

Pull Request Data Aggregation

The gathered data so far is not structured for training. In this step, the purpose is to aggregate all relevant information for each PR, to have self-contained PR data for training. All PRs that were not eventually merged are filtered out. For each PR that is kept, we aggregate all of its relevant data. Specifically, the description of the issue associated with the PR, comments, and the content of the edited files before the change. We take the final merged changes to serve as a reference solution.

Relevant Files Prediction

In this step, we add files that are related to the PR but are not changed as part of it. The researchers discovered that feeding the model only with the files that are edited is causing the model to develop a bias where it will generate edits to all of its input files. This is undesirable of course since in practice some files are relevant to the code change but do not require a change themselves. The researchers use Llama-3.1-70B-Instruct to predict which files are related, given the pull request description and the paths of the edited files.

Filtering Out Low-Quality PRs

Not all GitHub pull requests are of high-quality. Some for example are generated by bots, and others include just a version bump. Therefore, the researchers employed various filtering rules to remain with a dataset of approximately 11 million high-quality pull requests.

SWE-RL Training Process

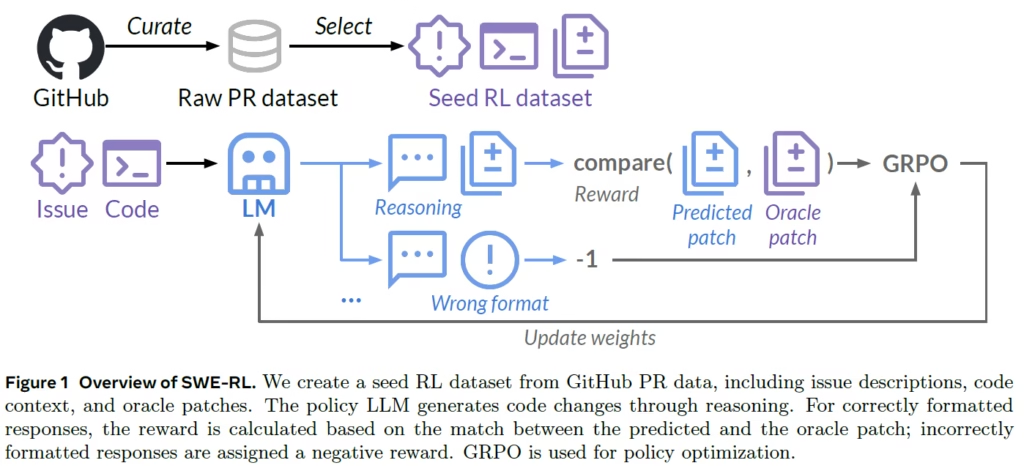

The above figure from the paper can help us understand the training process. Let’s analyze it.

Seed RL Dataset

First, we already covered the data curation step, where the researchers built a large pull request dataset. From this dataset, we select a subset of high-quality samples to create what they call the seed dataset for reinforcement learning. Each selected sample should have at lease one linked GitHub issue, where the issue should describe a bug-fixing request, and the code changes should involve programming files.

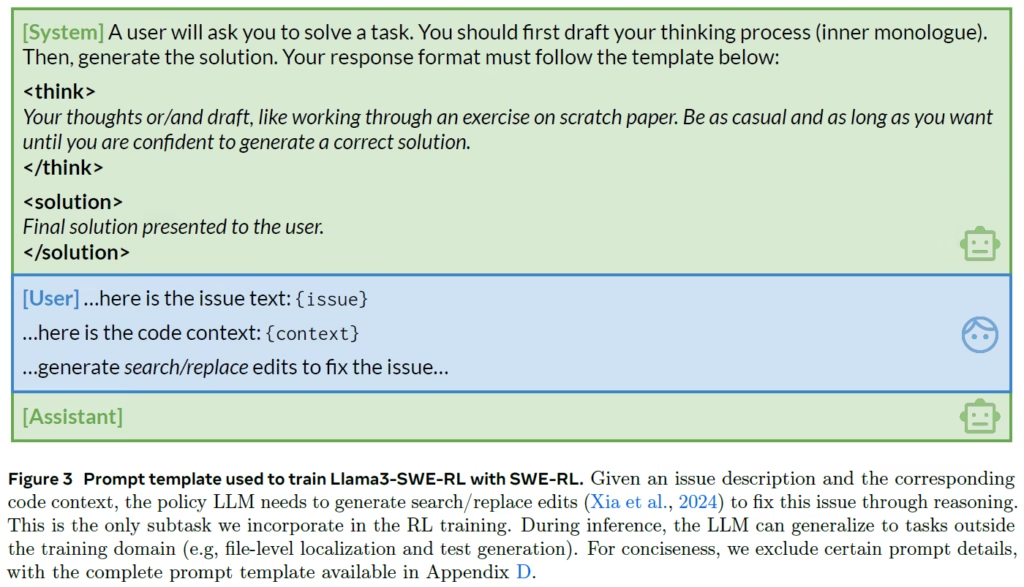

LLM Prompt Format

Each sample from the seed RL dataset is fed into the LLM after being converted into a consistent input prompt format. In the above figure, we can see the prompt template. It starts with a system instruction that tells the model to output its reasoning process wrapped within <think> tags, followed by its proposed solution, wrapped within <solution> tags. This part is used for all samples, and for each sample the prompt body includes the GitHub issue description, and the relevant code context, extracted during data curation.

Sampling Multiple Outputs

Going back to the training process, given an input prompt, we sample multiple outputs from the model. In the training process figure above, one output represents a valid solution, and another represents an invalid format solution. In practice, more than two solutions are sampled. Next, we calculate a reward for each of the outputs.

Calculating a Reward For Each Output

Traditionally, a reward model is used to calculate the reward. But ,in this case, we use rule-based reinforcement learning, similarly to DeepSeek-R1 approach. The rule is different than DeepSeek-R1 though.

- For outputs with illegal format, the reward is -1.

- For valid format outputs, the reward is determined using a similarity score between the predicted patch, the code changes that the model generated, to the oracle patch, which is the final real merged changes for the pull request. This provides a value between 0 to 1.

Some limitation for this reward calculation is that it may prevent the model from exploring alternative solution approaches than the one used in the original pull request.

Update The Model Weights With GRPO

The reinforcement learning algorithm used is GRPO, short for Group Relative Policy Optimization, same as with DeepSeek-R1. Given a group of outputs, this algorithm steers the model towards the response with the highest reward. The model trained with this approach is called Llama3-SWE-RL.

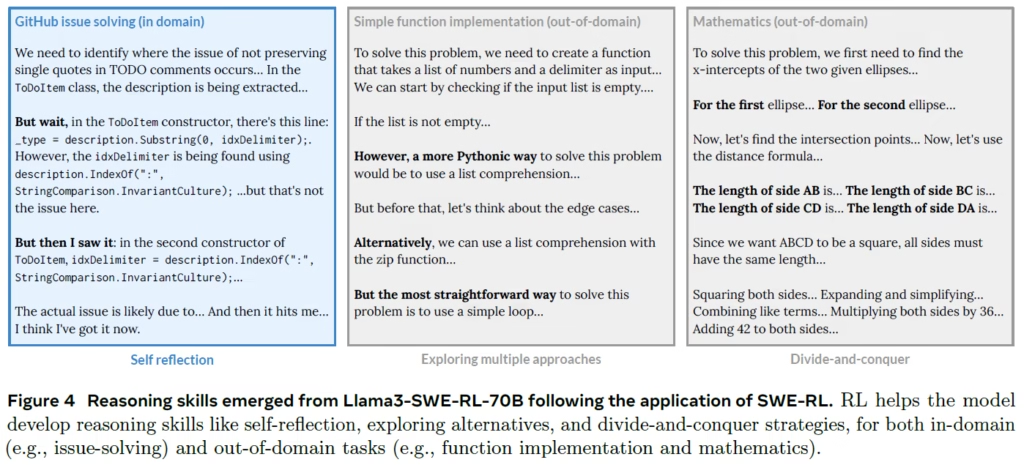

LLama3-SWE-RL Aha Moments

Applying this RL training process confirms the aha moment discovered by DeepSeek-R1, but this time in the context of real-world software engineering tasks. We can see an example of this on the left of the above figure. Given a problem, the model learns to allocate more thinking time to reflect on its initial assumptions during the reasoning process. This isn’t something the researchers explicitly programmed. It’s an emergent behavior that arises naturally through reinforcement learning.

But that’s not all. Surprisingly, the researchers identified additional aha moments where the model developed general reasoning abilities that transfer to out-of-domain tasks, even though these tasks were not included in the reinforcement learning dataset.

- One example is a simple function implementation task, where the model shows a behavior of exploring alternatives for the solution.

- Another example is a math question, where the model demonstrates a divide-and-conquer strategy, breaking the problem into smaller pieces and solving each part step by step to compute the final result.

Results

Software Engineering Performance Comparison

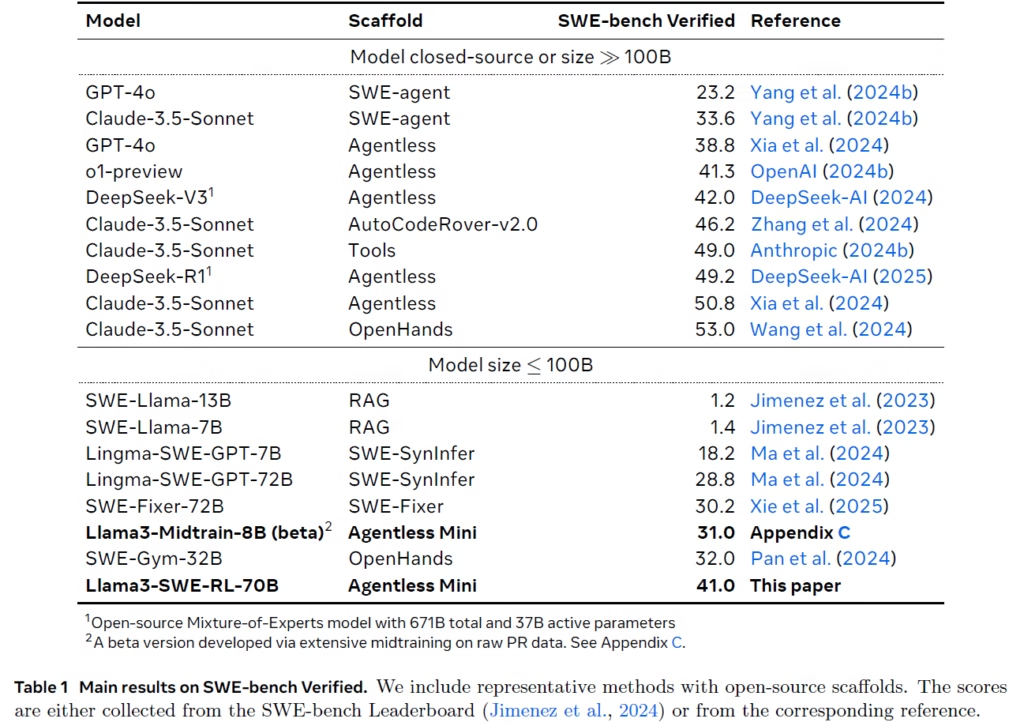

The above table presents the pass@1 results on SWE-bench Verified, a human-verified collection of high-quality GitHub issues. This benchmark is specifically designed to evaluate how well models can fix real-world software issues. The table is divided into two sections:

- The upper section lists closed-source models or models with more than 100 billion parameters.

- The lower section focuses on open-source models with fewer than 100 billion parameters.

Llama3-SWE-RL-70B achieves a pass@1 score of 41%, meaning it solves 41% of the issues correctly on the first try. This sets a new state-of-the-art for models under 100 billion parameters. This result is not as good as the results of some of the closed source models or the larger open-source models. However, this model is open-source and of a much more friendly size.

SFT vs RL

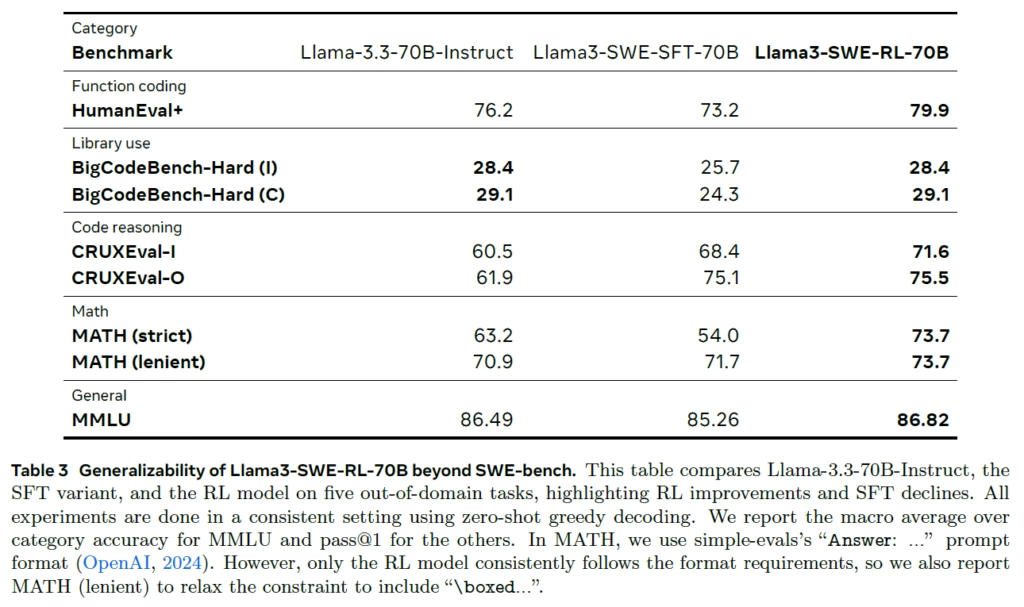

In the above table, we can see the performance on multiple out-of-domain tasks for the base model, on the left, a model that was trained on the PR data using SFT, in the middle, and the RL model on the right.

The results show that the RL model consistently outperforms both the base model and the SFT model, even on tasks that were never part of the RL training data. This reinforces the idea that RL unlocks general reasoning skills, rather than just memorizing the training examples.

References & Links

- Paper

- Code

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.