In this post we break down the Large Language Diffusion Models paper, introducing the first diffusion based LLM that rival a strong LLM at large scale.

Introduction

Large Language Models (LLMs) have become extremely powerful over the recent years, paving the way toward artificial general intelligence. These models are fundamentally autoregressive, meaning they predict the next token given a sequence of tokens. We can think of this as if they are writing a response one word at a time, where each new word is based on the words that came before it. This approach has proven remarkably powerful, bringing us to where we are today.

However, this approach also has its challenges. For instance, the sequential token-by-token generation comes with high computational costs. Additionally, the inherent left-to-right modeling limits the model’s effectiveness in reversal reasoning. One example we will touch on later is reversal poem completion, where given a sentence from a poem, the model should predict the previous sentence in the poem. Regardless, it is interesting to ask whether autoregressive modeling is the only possible way.

The Large Language Diffusion Models paper challenges this notion. Just as LLMs are the cornerstone of natural language processing, diffusion models are the kings of computer vision, serving as the backbone behind the top text-to-image generation models. In this post, we’ll see how the researchers applied diffusion models to language modeling.

What Are Diffusion Models?

Let’s start with a quick recap of diffusion models in computer vision, which will help us understand the high-level idea of the paper.

Diffusion models take a prompt as input, such as “A cat is sitting on a laptop”. The model learns to gradually remove noise from an image to generate a clear image. The model starts with a random noise image, as shown on the left, and in each step, it removes some of the noise. The noise removal is conditioned on the input prompt, so we’ll end with an image that matches the prompt. The three dots imply that we skip steps in this example. Finally, we get a clear image of a cat, which we take as the final output of the diffusion model for the provided prompt.

During training, to learn how to remove noise, we gradually add noise to a clear image, which is known as the diffusion process. Various advancements have been made in this area, but that is not the focus of this post.

Large Language Diffusion Models Intuition

The model presented in the paper is called LLaDA, short for Large Language Diffusion with mAsking. We start with a sequence of tokens on the left, where black color represents a masked token. The orange non-masked tokens represent the prompt, and the black masked tokens represent the response. Note that a special token represents a mask token, unlike a noise that is added to an image as we’ve seen before.

We gradually remove masks from the tokens, with bright color representing non-masked tokens. Finally, we remove all the masks and end with a response to the input prompt. In this example, the clear response token sequence represents the response: “Once upon a time, in a small village, there lived a wise old owl”.

LLaDA Training & Inference Overview

Let’s dive deeper into a more detailed overview of large language diffusion models to understand better. We’ll use the following figure from the paper, which illustrates the two training stages of the model, pretraining and supervised fine-tuning, as well as the inference process.

LLaDA Training Phase 1 – Pretraining

We start with the pretraining stage, illustrated on the left of the above figure.

At the top, we have a sample sequence from the training set. We randomly sample the masking ratio t (a value between 0 and 1). Then, we randomly decide for each token independently whether it should be masked or not, with probability t. This results in a partially masked tokens sequence. This sequence is fed into the main component of the model called mask predictor, which is a transformer model that is trained to restore the masked tokens, using a cross entropy loss computed on the masked tokens. The pretraining dataset size is 2.3 trillion tokens.

LLaDA Training Phase 2 – Supervised Fine-Tuning

The second training stage is supervised fine-tuning, illustrated in the middle of the figure above. The purpose of this phase is to enhance LLaDA’s ability to follow instructions.

At the top, we have a sample with a prompt and a response. We want to train the model to output the response given the prompt. Similarly to pretraining, we randomly mask some of the tokens in the sample, but this time we only mask tokens from the response, leaving the prompt unmasked. We then feed the prompt and the partially masked response to the mask predictor to recover the masked tokens from the response. This process is very similar to pretraining, except we only mask the response part of the samples.

The masking ratio, which determines how many tokens are masked during training, is random for each sample. This means that during training, the model sees a variety of almost non-masked samples and heavily masked samples.

The researchers trained LLaDA on 4.5 million samples during this stage. Since the samples are not of the same length in this stage, samples are padded with a special end-of-sequence token. This way, the model is trained on an artificial fixed-length input and is capable of predicting the end-of-sequence tokens to end the generation process.

Inference: How LLaDA Generates Text

Now that we have an idea of how LLaDA is trained, let’s move on to review the inference process, illustrated on the right of the above figure.

Given a prompt, we create a sample with the prompt and a fully masked response. We gradually unmask the response using an iterative process called the reverse diffusion process. At the beginning of each iteration, we have a sequence with the clean prompt and a partially masked response. We feed the sequence into the mask predictor, which predicts all of the masked tokens. However, some of the predicted tokens are remasked, so we remain with a partially masked response until the final iteration, where we get the full response.

Remasking Strategies During Inference

The number of iterations is a hyperparameter of the model, determining a tradeoff between compute and quality, as more iterations enhance the output quality. In each iteration, the number of tokens being remasked is based on the total number of iterations. But how do we decide which tokens to remask in each iteration? This could have been random, but the researchers use two more effective methods.

- Low-confidence remasking – In this method, tokens with the lowest confidence in their prediction are the ones being remasked. For each token, the mask predictor chooses the token with the highest probability from the vocabulary. That highest probability represents the confidence of the token prediction, indicating how certain the model is that this is the correct token compared to other options.

- Semi-autoregressive remasking – The length of the responses can vary between prompts. For some prompts that require a short response, a large portion of the response will be the end-of-sequence token. To avoid generating many high-confidence end-of-sequence tokens, the generation length is divided into blocks, and the blocks are processed from left to right. Within each block, we apply the reverse diffusion process to perform sampling.

LLaDA Results

Benchmark Results

In the above figure, we see a comparison between the 8 billion parameters LLaDA base model and LLaMA 3 and LLaMA 2 of similar sizes on multiple tasks. LLaDA, in red, clearly outperforms LLaMA 2, in blue, and is comparable and in some cases better than LLaMA 3, in purple.

The results that we see here are for the base models. For the instruction-tuned models which are not shown in this chart, the comparison leans more toward LLaMA 3. However, the instruction tuned LLaMA 3 was trained with both supervised fine-tuning and reinforcement learning after the pretraining stage, while the instruction tuned LLaDA model was only trained with supervised fine-tuning after the pretraining stage.

LLaDA Scaling Trends

Another interesting figure from the paper shows the scalability of LLaDA on language tasks. The researchers trained LLaDA and autoregressive baseline models of similar sizes, with different allocations for training compute, shown on the x-axis. Each chart represents a different task where the performance is shown on the y-axis. LLaDA demonstrates impressive scalability trends, being competitive with the autoregressive baselines. In the math dataset GSM8K, we see significantly stronger scalability for LLaDA. For the reasoning dataset PIQA, LLaDA lags behind the autoregressive models. However, as the number of FLOPs increases, it closes the gap.

Breaks The “Reversal Curse”

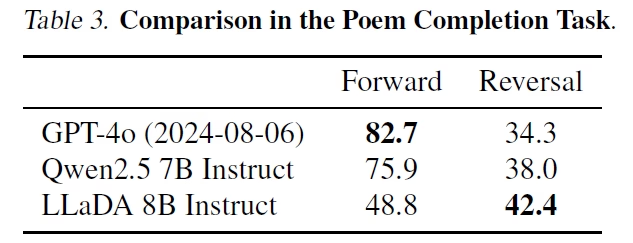

In the above table we see performance comparison on the poem completion task, where given a sentence from a poem, the model is asked to generate the next or the previous sentence. Looking at GPT-4o, it performs much better on the forward task comparing to the reversal task. This is inherent from the autoregressive training. LLaDA is able to improve upon this, demonstrating a more consistent performance across forward and reversal tasks and outperforming GPT-4o and Qwen 2.5 on the reversal task. It will be interesting to see how large language diffusion models perform when trained on a larger scale.

Conclusion: A New Era for Language Models?

LLaDA introduces a paradigm shift in language modeling by applying diffusion models to text generation. With its bidirectional reasoning and scalability, it challenges traditional AR-based LLMs. While still in early stages, its potential impact makes it an exciting development in AI research.

References & Links

- Paper

- GitHub Page

- Join our newsletter to receive concise 1-minute read summaries for the papers we review – Newsletter

All credit for the research goes to the researchers who wrote the paper we covered in this post.